The Convergence of Sound and Synthesis

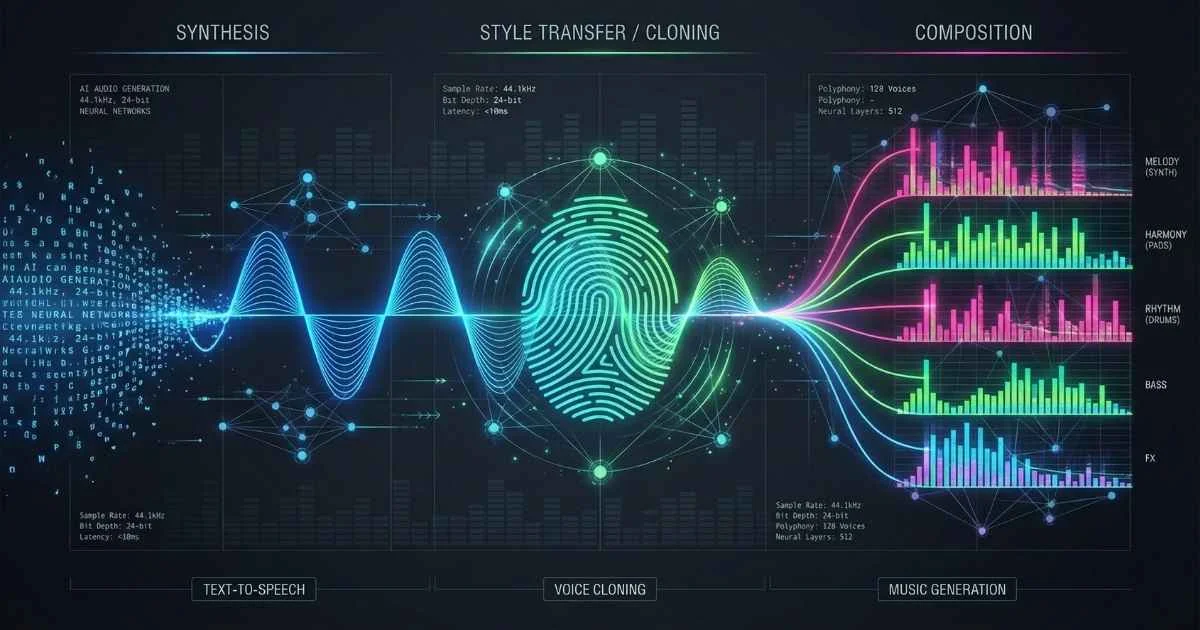

Audio technology has reached an inflection point where synthetic speech is no longer distinguishable from human recording. The “robotic voice” of the past decade has been replaced by systems capable of capturing the nuance, emotion, and prosody of natural human speech.

AI audio is reshaping how we create and consume sound.

From instant voice cloning that requires only seconds of reference audio to generative music models that compose full orchestral scores, the tools of 2025 offer unprecedented creative power. However, they also raise significant ethical questions regarding consent and authenticity.

This guide provides a comprehensive technical and ethical overview of the AI audio landscape, covering:

- How text-to-speech evolved from robotic squawks to emotional intelligence

- Which platforms to use for voice generation, cloning, and music creation

- The very real risks of deepfakes and how to protect yourself

- Step-by-step tutorials to create your first AI voice and music

Let’s explore the sound of the future.

$4.9B

TTS Market 2025

18.4% CAGR growth

$2.64B

Voice Cloning Market

28% CAGR

$2.92B

AI Music Gen Market

2025 valuation

$6.6B

ElevenLabs Valuation

Doubled in 9 months

Sources: Business Research Company • MarketsandMarkets • Analytics India Magazine

From Robot Voice to Emotional Intelligence: The TTS Evolution

The Long Road to Natural Speech

Text-to-speech technology has been around since the 1960s—but for most of that history, it sounded like a malfunctioning robot reading a dictionary. Remember Microsoft Sam? Stephen Hawking’s synthesized voice? Those were state-of-the-art in their time.

Here’s how TTS evolved:

| Era | Technology | Sound Quality | Example |

|---|---|---|---|

| 1960s-1980s | Formant Synthesis | Robotic, mechanical | Early speech synthesizers |

| 1990s-2000s | Concatenative | Choppy, unnatural pauses | Microsoft Sam, AT&T Natural Voices |

| 2010s | Statistical Parametric | Better flow, still artificial | Google Translate voice |

| 2016-2020 | Neural TTS (WaveNet/Tacotron) | Near-human, occasional glitches | Google Assistant, Alexa |

| 2021-2024 | Diffusion/Transformer TTS | Indistinguishable from human | ElevenLabs, OpenAI TTS |

| 2025 | Emotional Intelligence TTS | Infers emotion from context | Hume AI Octave, ElevenLabs v2 |

The breakthrough came when Google DeepMind released WaveNet in 2016, a neural network that generated audio sample-by-sample. Suddenly, synthesized speech had the subtle variations—the breaths, the micro-pauses, the warmth—that make human speech feel alive.

How Modern TTS Works

Today’s TTS systems don’t just “read” text—they understand it. Here’s the simplified pipeline:

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#4f46e5', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#3730a3', 'lineColor': '#6366f1', 'fontSize': '16px' }}}%%

flowchart LR

A["Text Input"] --> B["Text Analysis"]

B --> C["Prosody Engine"]

C --> D["Neural Synthesis"]

D --> E["Audio Output"]How Text-to-Speech Works

From text to natural-sounding speech

Text Input

Your text

Text Analysis

Parse & understand

Prosody Engine

Rhythm & emotion

Neural Synthesis

Generate waveform

Audio Output

Human-like speech

💡 Key Concept: Modern neural TTS can generate speech with emotional intelligence—inferring appropriate tone from context without explicit markers.

Key Concepts You Should Know

Prosody - The rhythm, stress, and intonation of speech. Why “I didn’t say he stole the money” means seven different things depending on which word you emphasize.

Emotional Intelligence - The newest frontier. Systems like Hume AI can now infer the appropriate emotion from context. Type “I’m so sorry for your loss” and it will sound genuinely sympathetic—without you specifying the tone.

Zero-Shot Voice Cloning - Creating a synthetic voice from just a few seconds of audio, without any training. ElevenLabs can clone a voice from 30 seconds of recording.

Latency - How quickly speech is generated after you submit text. Critical for real-time applications like voice agents. Cartesia achieves sub-100ms latency.

🎯 Why This Matters: Understanding these concepts helps you choose the right platform. Need emotional expressiveness? Hume AI. Need speed? Cartesia. Need the best all-around quality? ElevenLabs.

The Major Players: TTS Platforms in December 2025

Let me introduce you to the tools that are defining the AI voice landscape.

ElevenLabs - The Industry Leader

ElevenLabs has become synonymous with AI voice. Their December 2025 numbers are staggering:

- Valuation: $6.6 billion (doubled in just 9 months)

- Meta Partnership: Announced December 11, 2025—dubbing Instagram Reels into local languages, creating expressive character voices for Meta Horizon VR

- Scribe v2: Human-quality live transcription in under 150 milliseconds, supporting 90+ languages

What makes ElevenLabs special:

Think of ElevenLabs as the “Photoshop of voice.” Just as Photoshop revolutionized image editing, ElevenLabs has made professional voice production accessible to anyone with a browser.

- Industry-leading voice quality (98% realism score in blind tests)

- Voice cloning from 30-second samples—simply upload audio, and it creates a “voice fingerprint”

- Full emotion control: Inline audio tags let you specify

[whisper],[excited], or[sad]anywhere in your text - Multi-speaker dialogue: Generate conversations between multiple voices in one generation

- 73 languages and 420+ dialects supported

- C2PA content watermarking: Every generated audio file is signed for authenticity—helping combat deepfakes

- Eleven Music: AI music generation launched August 2025—studio-grade music with vocals or instrumentals from text prompts, cleared for commercial use

- Stems Separation: Split songs into 2, 4, or 6 components (vocals, drums, bass, other)

- FL Studio Integration: December 2025 integration with FL Cloud Pro for AI-powered sample creation

- Iconic Voices: Partnerships with Michael Caine and Matthew McConaughey—their voices available on ElevenReader and the Iconic Marketplace

- ElevenLabs Agents: Real-time conversation monitoring, GPT-5.1 support, Hinglish language mode, agent coaching and evaluation

⚠️ Model Changes (December 2025): ElevenLabs discontinued V1 models (Monolingual V1, English V1, Multilingual V1) on December 15, 2025. English V2 deprecated January 15, 2026.

📦 Try This Now: Visit elevenlabs.io, sign up for free (10,000 characters/month), and type “Hello, I’m testing AI voice generation. Can you believe how natural this sounds?” Click generate—you’ll hear the result in under a second.

Pricing: Free tier (10,000 characters/month), Starter $5/mo, Creator $22/mo, Pro $99/mo

Best for: Audiobooks, professional voiceovers, content creators, multilingual content

Source: Analytics India Magazine (Dec 2025), Economic Times (Dec 2025)

Hume AI - The Empathic Voice 🔥

Hume AI is doing something nobody else does: building AI that genuinely understands how you feel.

The Simple Explanation:

Most TTS systems are like reading a script—they say the words, but they don’t feel them. Hume AI is like talking to someone who actually listens. If you sound stressed, it responds with calm. If you’re excited, it matches your energy.

October 2025 Update - Octave 2:

Hume AI launched Octave 2 in October 2025—their next-generation multilingual voice AI model:

- 40% faster than the original, generating audio in under 200ms

- 11 languages supported with natural emotional expression

- Voice conversion: Transform any voice while preserving emotion

- Direct phoneme editing: Fine-tune pronunciation at the sound level

- Half the price of Octave 1

Empathic Voice Interface (EVI 4 mini):

The EVI 4 mini integrates Octave 2 into a speech-to-speech API. It can:

- Detect emotional tones from your voice in real-time

- Express nuances like surprise, sarcasm, and genuine empathy

- Adapt its responses based on your emotional state

- Handle complex conversations with emotional memory

EVI 3 (May 2025):

Hume AI launched EVI 3 in May 2025—the world’s most realistic and instructible speech-to-speech foundation model:

- Instant voice generation: Create new voices and personalities on the fly

- Enhanced realism: More natural conversational dynamics and speech patterns

- Improved context understanding: Better interpretation of emotional context in conversations

November 2025 API Updates:

- New

SESSION_SETTINGSchat event for EVI API - Voice conversion endpoints for TTS API

- Control plane API for EVI

- Voice changes within active sessions

Real-World Example:

Imagine calling a customer service line when you’re frustrated. Traditional systems respond with robotic cheer: “I’m sorry to hear that! How can I help?” Hume AI detects your frustration and responds with genuine acknowledgment: “I can hear this has been really frustrating for you. Let me help sort this out.” The difference is subtle but profound.

Best for: AI characters, customer service bots, mental health applications, gaming NPCs

Source: Hume AI Blog (Oct 2025)

🔥 Emotion AI Capabilities (Hot in 2025)

Emotional intelligence in voice AI

| Capability | Hume AI | Cartesia | ElevenLabs |

|---|---|---|---|

| Detect Stress | ✓ | ✓ | ✗ |

| Express Sarcasm | ✓ | ✗ | ✗ |

| Adapt to User Mood | ✓ | ✓ | ✓ |

| Empathic Responses | ✓ | ✗ | ✗ |

| Emotional Memory | ✓ | ✗ | ✗ |

| Real-Time Analysis | ✓ | ✓ | ✓ |

🎭 Why It Matters: Hume AI's Octave platform can detect emotions like stress, sarcasm, and joy—then respond with appropriate empathy. This enables truly conversational AI that adapts to how you're feeling.

Cartesia - Ultra-Low Latency

Cartesia’s Sonic-3 model, launched October-November 2025, sets new standards for real-time voice synthesis using State Space Model (SSM) architecture.

Sonic-3 Specifications:

- 90ms model latency, 190ms end-to-end (Sonic Turbo: 40ms)

- 42 languages with native pronunciation

- Instant voice cloning from 10-15 seconds of audio

- Advanced voice control: Volume, speed, emotion via API and SSML

- Intelligent text handling: Contextual pronunciation of acronyms, dates, addresses

- Lifelike speech quality: Nuanced emotional expression including excitement, empathy, and natural laughter

State-Space Architecture (SSM):

Unlike traditional Transformer models, Sonic-3 uses SSM architecture that mimics human cognitive processes by maintaining contextual memory—enabling exceptional efficiency and low latency.

Trade-off: Smaller voice library than ElevenLabs

Best for: Real-time voice agents, conversational AI, interactive apps

The Enterprise Giants

OpenAI TTS - Major December 2025 updates:

- New Model:

gpt-4o-mini-tts-2025-12-15with substantially improved accuracy and lower word error rates - 8 voices: alloy, ash, ballad, coral, echo, sage, shimmer, verse (expanded from original 6)

- Enhanced control: Natural language prompts control accent, emotion, intonation, and tone

- Custom Voices: Now available for production applications

- Integrated with ChatGPT and GPT-5.1. No voice cloning. $0.015 per 1,000 characters.

Additional December 2025 Audio Models:

gpt-4o-mini-transcribe-2025-12-15(speech-to-text)gpt-realtime-mini-2025-12-15(real-time speech-to-speech)gpt-audio-mini-2025-12-15(Chat Completions API)

Google Cloud TTS - 220+ voices across 40+ languages. WaveNet, Standard, and Neural2 voice types. Custom Voice training available. ~$0.016 per million characters.

Microsoft Azure Speech - The largest library: 400+ neural voices in 140+ languages. Custom Neural Voice for enterprise. Speaking style customization (newscast, customer service, chat). ~$0.016 per 1M characters.

Amazon Polly - 60+ languages and variants. Neural TTS and Standard voices. SSML and Speech Marks support. Best for AWS users and scalable applications.

Enterprise Platform Comparison Matrix

| Feature | OpenAI TTS | Google Cloud | Azure Speech | Amazon Polly | ElevenLabs |

|---|---|---|---|---|---|

| Voices | 8 | 220+ | 400+ | 60+ | 1000+ |

| Languages | 50+ | 40+ | 140+ | 33 | 73 |

| Voice Cloning | Custom voices | Custom Voice | Custom Neural | ❌ | ✅ Instant |

| SSML Support | ❌ | ✅ Full | ✅ Full | ✅ Full | Partial |

| Streaming | ✅ | ✅ | ✅ | ✅ | ✅ |

| Latency | ~200ms | ~150ms | ~100ms | ~150ms | <100ms |

| Max Characters | 4096 | 5000 | 10000 | 3000 | 5000 |

Compliance & Security Certifications

| Platform | SOC 2 | HIPAA | GDPR | FedRAMP | ISO 27001 |

|---|---|---|---|---|---|

| OpenAI | ✅ | ✅ (Enterprise) | ✅ | ❌ | ✅ |

| Google Cloud | ✅ | ✅ | ✅ | ✅ | ✅ |

| Azure | ✅ | ✅ | ✅ | ✅ | ✅ |

| Amazon | ✅ | ✅ | ✅ | ✅ | ✅ |

| ElevenLabs | ✅ | Contact | ✅ | ❌ | ✅ |

Integration Complexity

| Platform | SDK Languages | Setup Time | Documentation | Support |

|---|---|---|---|---|

| OpenAI TTS | Python, Node, many | <1 hour | Excellent | Chat + Forum |

| Google Cloud | All major | 2-4 hours | Excellent | Tiered |

| Azure Speech | All major | 2-4 hours | Excellent | Tiered |

| Amazon Polly | All major | 1-2 hours | Good | AWS Support |

| ElevenLabs | Python, REST | <30 min | Good | Email + Discord |

The Specialized Players

| Platform | Focus | Best For | Starting Price |

|---|---|---|---|

| Descript | Edit audio by editing text | Podcasters, video creators | Free tier |

| Podcastle | Podcast creation suite | End-to-end podcast production | $11.99/mo |

| Speechify | Reading assistance | Accessibility, document consumption | Free tier |

| Murf.ai | Video voiceovers | Marketing content, explainers | Free tier |

| Play.ht | Voice cloning focus | 900+ voices, 142 languages | Free tier |

TTS Platform Comparison

Performance scores (December 2025)

💡 Key Insight: ElevenLabs leads in overall quality, while Hume AI excels at emotional intelligence. Cartesia offers the lowest latency for real-time applications.

Sources: ElevenLabs • Hume AI • Cartesia

Voice Cloning: Replicating Human Voices

This is where things get both exciting and ethically complex.

What Is Voice Cloning?

Voice cloning creates a synthetic voice that replicates a specific person’s voice characteristics—capturing tone, rhythm, accent, and emotional patterns.

December 2025 capabilities:

- 30-90 second samples for high-quality clones

- Emotion-aware multilingual cloning

- Real-time synthesis with preserved personality

- Near-100% accuracy in blind tests

How Voice Cloning Works

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#4f46e5', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#3730a3', 'lineColor': '#6366f1', 'fontSize': '16px' }}}%%

flowchart TD

A["Voice Sample (30-90 sec)"] --> B["Feature Extraction"]

B --> C["Voice Embedding"]

C --> D["Neural Voice Model"]

E["New Text Input"] --> D

D --> F["Synthesized Speech"]

F --> G["Cloned Voice Output"]| Stage | What Happens | Technology |

|---|---|---|

| Audio Input | High-quality voice sample uploaded | Preprocessing, noise reduction |

| Feature Extraction | Voice characteristics analyzed | Mel spectrograms, pitch analysis |

| Embedding Creation | Unique “voice fingerprint” created | Encoder networks |

| Model Adaptation | Neural network learns voice patterns | Fine-tuning or zero-shot |

| Synthesis | New text converted to cloned voice | Decoder + vocoder |

Legitimate Use Cases

Entertainment & Media:

- Dubbing films in new languages with original actor’s voice

- Creating audiobooks with author’s voice

- Voice restoration for actors who have lost their voice

- AI NPCs in games with consistent character voices

Accessibility:

- Helping ALS/MND patients preserve their voice before loss

- Creating synthetic voices for those who cannot speak

Business:

- Consistent brand voice across thousands of videos

- Personalized customer service at scale

- Rapid content localization

Personal:

- Preserving voices of loved ones (like my grandmother’s message)

- Creating voice messages in your voice while traveling

Voice Cloning Platforms

Sample required vs quality trade-off

| Platform | Sample Needed | Quality | Languages | Real-Time | Price |

|---|---|---|---|---|---|

| ElevenLabs | 30 sec | ★★★★★ | 32+ | ✅ | $5-330/mo |

| Cartesia | 3-15 sec | ★★★★★ | 15 | ✅ | Contact |

| Resemble AI | 25 min | ★★★★★ | Multi | ✅ | Custom |

| Descript | 10 min | ★★★★★ | English | ❌ | $12-24/mo |

| Respeecher | 1-2 hours | ★★★★★ | Multi | ❌ | Enterprise |

Sources: ElevenLabs • Resemble AI

The Dark Side: Deepfakes and Fraud

Here’s the uncomfortable truth. The same technology enabling beautiful applications is also enabling fraud at an unprecedented scale.

December 2025 Statistics (The Numbers Are Staggering):

| Statistic | Source |

|---|---|

| Deepfake files projected: 8 million in 2025 (up from 500K in 2023, ~900% annual growth) | UK Government/Keepnet Labs |

| Global losses from deepfake-enabled fraud: $200+ million in Q1 2025 alone | Keepnet Labs |

| U.S. AI-assisted fraud losses: $12.5 billion in 2025 | Cyble Research |

| Deepfake fraud attempts: up 1,300% in 2024 (from 1/month to 7/day) | Pindrop 2025 Report |

| Synthetic voice attacks in insurance: +475% (2024) | Pindrop |

| Synthetic voice attacks in banking: +149% (2024) | Pindrop |

| Contact center fraud exposure: $44.5 billion potential in 2025 | Pindrop |

| Deepfaked calls projected to increase: 155% in 2025 | Pindrop |

| North America deepfake fraud increase: 1,740% (2022-2023) | DeepStrike Research |

| Average bank loss per voice deepfake incident: ~$600,000 | Group-IB |

| 77% of victims targeted by voice clones reported financial losses | Keepnet Labs |

Why Voice Deepfakes Are So Dangerous:

Think about it: you can verify a suspicious email by checking the sender address. You can reverse-image-search a photo. But when your “mother” calls you crying, saying she needs bail money urgently—your instincts say help, not verify.

Studies show humans can only correctly identify AI-generated voices about 60% of the time—barely better than flipping a coin. Human accuracy detecting high-quality video deepfakes is even worse at just 24.5%. Only 0.1% of people can reliably detect deepfakes.

Common Attack Vectors:

- Executive impersonation: CEO calls CFO requesting urgent wire transfer

- Family emergency scams: “Grandma, I’m in jail and need bail money”

- Authentication bypass: Criminals use cloned voices to pass voice verification

- Political disinformation: Fake audio of political figures

- Retail fraud: Major retailers report over 1,000 AI-generated scam calls per day

⚠️ Real Case: In early 2024, a finance employee in Hong Kong transferred $25 million after a video call with what appeared to be his CFO and colleagues—all were deepfakes. An engineering firm lost $25 million to similar tactics.

Sources: Keepnet Labs Q1 2025, Pindrop 2025 Voice Intelligence Report, DeepStrike Research, American Bar Association

Regulations and Ethics: The Legal Landscape

Governments are racing to catch up with AI voice technology. Here’s where we stand in December 2025. For a broader view of AI ethics and regulations, see the Understanding AI Safety, Ethics and Limitations guide.

Global Regulatory Framework

| Region | Key Regulation | Status | Key Requirements |

|---|---|---|---|

| European Union | AI Act | Active (Aug 2025) | Voice cloning = high-risk AI; transparency required |

| United States | TAKE IT DOWN Act | Signed (May 2025) | Criminalizes non-consensual deepfakes |

| United States | FCC Ruling | Active | AI voices in robocalls illegal under TCPA |

| United States | NO FAKES Act | Proposed | Unauthorized AI replicas illegal |

| United States | State Laws | Varies | 20+ states with deepfake legislation |

| China | Deep Synthesis Regulations | Active | Registration and disclosure required |

EU AI Act - Voice Cloning Provisions

The EU AI Act classifies voice cloning as high-risk AI:

- February 2, 2025: General provisions, prohibitions on unacceptable risk AI, and AI literacy duties came into effect

- August 2025: Transparency obligations for GPAI providers now active

- November 5, 2025: European Commission launched work on code of practice for marking and labeling AI-generated content in machine-readable formats

- August 2026: Full enforcement of Article 50 (content disclosure and marking)

- Requirements:

- Clear disclosure when content is AI-generated

- Mandatory watermarking for synthetic audio

- Audit trails and abuse monitoring

- Penalties up to €30 million or 7% of global turnover for non-compliance

Ethical Best Practices

If you’re using AI voice technology:

- Always obtain explicit consent before cloning any voice

- Use watermarking (C2PA, SynthID) on all generated content

- Disclose AI usage to listeners/viewers

- Respect deceased individuals’ voice rights

- Educate users about deepfake risks

- Maintain audit trails of all generated content

Protecting Yourself from Voice Deepfakes

Create a “voice vault” with family safe words for verification:

- Choose a code word only family members know

- If you receive an urgent call from “family,” ask for the code word

- Enable multi-factor authentication beyond voice

- Be skeptical of urgent requests via phone

- Call back on known numbers, not ones provided

- Report suspected deepfakes to platforms and authorities

AI Music Generation: Create Songs in Seconds

The AI music revolution is just as profound as the voice revolution—and perhaps even more controversial.

The Numbers

- AI-generated music expected to boost industry revenue by 17.2% in 2025

- 60% of musicians now use AI tools (mastering, composing, artwork)

- Generative AI music market: $2.92 billion in 2025

- Anyone can now create professional-quality music with text prompts

How AI Music Generation Works

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#4f46e5', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#3730a3', 'lineColor': '#6366f1', 'fontSize': '16px' }}}%%

flowchart LR

A["Text Prompt"] --> B["Music Understanding"]

B --> C["Style/Genre Selection"]

C --> D["Audio Diffusion Model"]

D --> E["Raw Audio Generation"]

E --> F["Mastering/Enhancement"]

F --> G["Final Track"]| Component | Function | Technology |

|---|---|---|

| Text Encoder | Understand musical intent from prompt | Transformer language models |

| Music Prior | Map text to musical concepts | Trained on vast music libraries |

| Audio Generator | Create actual sound waves | Diffusion models, autoregressive |

| Vocoder/Enhancer | Polish and finalize audio | Neural audio codecs |

Suno AI - The Text-to-Music Leader

Suno has exploded in popularity. Think of it as “ChatGPT for music”—describe what you want, and it creates a complete song with vocals, instruments, and production.

December 2025 Milestones:

- Valuation: $2.45 billion (Series C closed Nov 18, 2025, led by Menlo Ventures + NVIDIA NVentures)

- Revenue: Over $100 million ARR

- WMG Settlement: November 2025—Warner Music Group settled their copyright lawsuit. Suno will implement “opt-in” mechanisms for artists.

- Songkick Acquisition: Acquired Warner’s concert-discovery platform as part of the settlement

- Acquisition: WavTool DAW (June 2025)—now offers integrated audio editing

Suno v5 Features (September 2025):

- Songs up to 8 minutes in a single generation (up from 2 minutes)

- Suno Studio: Section-based timeline with “Replace Section,” “Extend,” “Add Vocals,” “Add Instrumentals”

- Remastering modes: Subtle, Normal, and High polish options

- Stem exports: Pro = 2 stems (vocal + instrumental), Premier = 12 stems

- Consistent Personas: Vocal styles like “Whisper Soul,” “Power Praise,” “Retro Diva”

- Improved lyric markers:

[Verse],[Chorus],[Bridge]now work reliably

December 2025 Updates:

- Enhanced Personas: Apply consistent vocal styles across multiple songs for album creation

- “Hoooks” Feature: Community-driven discovery within the platform (launched October 2025)

- Policy Changes for 2026: Following WMG agreement:

- Subscriber-only monetization (free accounts cannot use music commercially)

- Download limits for paid tiers with optional purchase of additional downloads

- New licensed models expected to surpass Suno v5

🎵 Try This Now: Go to suno.ai, sign in with Google, and enter: “Upbeat indie rock song about chasing dreams, catchy chorus, energetic guitars”. In 30 seconds, you’ll have a complete song with vocals!

Pricing:

| Plan | Price | Credits | Song Length | Stem Downloads | Commercial Use |

|---|---|---|---|---|---|

| Free | $0 | 50/day | 2 min | ❌ | ❌ |

| Pro | $10/mo | 2,500/mo | 4 min | 2 stems | ✅ |

| Premier | $30/mo | 10,000/mo | 4 min | 12 stems | ✅ |

Source: Forbes (Nov 2025), The Guardian

Udio AI - The Quality-Focused Alternative

Udio has taken a different path—focusing on audio quality over feature count. If Suno is “good enough for social media,” Udio is “studio-quality for professionals.”

Why Choose Udio:

- Superior audio fidelity: Cleaner mixes, better instrument separation, warmer vocals

- Stem downloads: Separate vocals, bass, drums, synths—essential for producers

- Audio-to-audio: Upload and remix existing music

- Multi-language vocals: Natural-sounding singing in many languages

Major Development: Universal Music Group Partnership (October 2025)

This is a game-changer. Udio settled copyright litigation with UMG and announced a groundbreaking partnership:

- New UMG/Udio platform launching mid-2026

- Licensed training data from UMG’s catalog—first major label to license for AI training

- Artist opt-in: Creators can license their voice/style for AI generation

- “Walled Garden” model: During transition, downloads are limited; you can stream and share within Udio

- Fingerprinting and filtering: Built-in safeguards against direct replication

What This Means:

Until October 2025, AI music companies faced existential legal threats. The UMG-Udio deal creates a template for legal AI music generation: license the training data, compensate artists, and build ethical AI. Expect other labels to follow.

Warner Music Group Partnership (November 2025):

Following the UMG deal, Udio also partnered with Warner Music Group in November 2025, furthering the industry’s shift toward licensed AI music generation. Key terms mirror the UMG agreement.

Platform Changes (Late 2025):

- Downloads disabled temporarily; 48-hour window provided for existing song downloads

- Terms of service modified for transition to licensed model

- Music Artists Coalition (MAC) called for fair compensation, mandatory “meaningful” consent from artists, and transparency regarding settlement details

Source: Universal Music Group (Oct 2025), Music Business Worldwide, Udio (Nov 2025)

🎧 Pro Tip: If you need to edit stems in a DAW (Ableton, Logic, FL Studio), choose Udio. If you want quick complete songs for content, choose Suno.

Suno vs Udio Comparison

| Feature | Suno v5 | Udio 1.5 |

|---|---|---|

| Max Song Length | 8 min | 4-5 min |

| Vocal Quality | ⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ |

| Instrumental Quality | ⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ |

| Genre Flexibility | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐ |

| Stem Downloads | ❌ | ✅ |

| DAW Integration | ✅ (WavTool) | ❌ |

| Commercial Rights | ✅ Paid plans | ✅ Paid plans |

| Audio-to-Audio | ⚠️ Limited | ✅ |

Other AI Music Tools

| Tool | Focus | Best For | Pricing | Key Features | Limitations |

|---|---|---|---|---|---|

| AIVA | Classical/film scoring | Composers, soundtracks | Free-$49/mo | Emotional soundtracks, full ownership | Limited to specific genres |

| Soundraw | Royalty-free music | Video creators | $16.99/mo | Customizable length/tempo, stems | No vocals |

| Boomy | Quick social tracks | TikTok/YouTube Shorts | Free tier | Fast generation, distribution | Basic quality |

| Mubert | Generative loops/ambient | Background music, apps | Free-$69/mo | Real-time generation, API | Repetitive patterns |

| Stable Audio | Experimental AI audio | Creative exploration | Free tier | Open weights available | Requires GPU |

| Beatoven.ai | Video soundtracks | Filmmakers, marketers | Free-$20/mo | Mood-based generation | Limited customization |

| Mureka | Original melodies/lyrics | Royalty-free originals | Free tier | Unique compositions | Smaller catalog |

| ACE Studio | Lyric-to-vocal-melody | Song prototyping | Subscription | Professional vocals | Higher learning curve |

| Meta MusicGen | Open-source sketches | Developers | Free | Self-hostable, customizable | 30-sec limit, no vocals |

| Loudly | Social media music | Content creators | $7.99/mo | Quick generation, stems | Limited genres |

| Ecrett Music | Royalty-free BGM | YouTubers | $4.99/mo | Scene-based creation | Basic editing |

| Amper (Shutterstock) | Enterprise music | Brands, agencies | Enterprise | Full licensing, API | High cost |

| Riffusion | Experimental | Developers, researchers | Free | Visual spectrogram approach | Experimental quality |

| Harmonai | Open-source | Researchers | Free | Dance Diffusion, open | Technical expertise needed |

| Splash Music | Gaming/interactive | Game developers | Contact | Real-time generation | Specialized use |

The Copyright Question

Major record labels sued Suno and Udio in 2024 for training on copyrighted music. Udio’s UMG settlement creates a path forward, but the legal landscape remains complex:

- Licensed training data becomes crucial for legal operation

- Commercial users should use platforms with clear licensing terms

- “Royalty-free” doesn’t always mean “copyright-free”

- AI-generated songs cannot currently be copyrighted in the US

Practical Applications: Creating with AI Audio

Let’s get practical. Here are real workflows for different use cases.

Audiobook Production

ElevenLabs or Play.ht

Long-form consistency

Podcast Creation

Podcastle or Descript

End-to-end workflow

Real-Time Voice Agents

Cartesia or ElevenLabs

<100ms latency

Empathic AI Characters

Hume AI or ElevenLabs

Emotional intelligence

Music with Vocals

Suno v5 or Udio

Best overall quality

Pro Music Production

Udio 1.5 or Suno

Stem downloads

Accessibility

Speechify or Azure Speech

Reading assistance

Video Voiceovers

Murf.ai or Descript

Template library

Audiobook Production

Traditional vs AI Audiobook Production:

| Aspect | Traditional | AI-Generated |

|---|---|---|

| Time | 2-6 hours per finished hour | 1-2 hours per finished hour |

| Cost | $200-400/hour (narrator) | $50-100/hour (AI + editing) |

| Consistency | Dependent on narrator stamina | Perfectly consistent |

| Revisions | Expensive re-records | Instant regeneration |

| Languages | One per narrator | Instant translation |

Recommended Workflow:

- Prepare manuscript (clean formatting, phonetic spellings for unusual words)

- Generate chapter by chapter (not all at once)

- Review for audio artifacts and mispronunciations

- Use SSML tags for emphasis and pauses

- Master and export final audio

Podcast Production with AI

The Podcastle/Descript Workflow:

- Script: Write or generate with AI assistance

- Record: Use platform’s recording tools

- Enhance: Apply “Magic Dust” or “Studio Sound” for professional quality

- Edit: Edit the transcript, not the waveform—the audio follows

- Add Voice: Clone your voice for pickup recordings without re-recording

- Export: Distribute to podcast platforms

Cost Savings: What used to require a $500+ audio setup and hours of post-production can now be done with a laptop and $20/month subscription.

Video Voiceovers

The Murf.ai/ElevenLabs Workflow:

- Write script optimized for spoken word (shorter sentences)

- Select voice matching your brand and content

- Generate multiple takes with slight variations

- Download and sync to video timeline

- Add background music (Suno/Udio AI-generated or stock)

Pro Tip: Always generate 2-3 versions of each section. AI output varies slightly each time—pick the best.

Gaming & Interactive Entertainment

Dynamic NPC Voice Generation:

Game developers are using AI voice to create thousands of unique NPC voices:

- Character Design: Define personality traits, accent, age

- Voice Profile: Create or select matching voice in ElevenLabs/Hume AI

- Dynamic Generation: Generate dialogue on-the-fly based on player actions

- Emotional Context: Use Hume AI for emotionally responsive characters

Use Cases:

- Procedurally generated quest givers

- Reactive enemy taunts

- Companion characters that remember player choices

- Localization across 40+ languages from single source

For more on building AI agents that interact with users, see the AI Agents guide.

Cost Impact: AAA games spending $500K+ on voice acting can reduce costs by 70-80% for secondary characters.

E-Learning & Course Creation

Scaling Educational Content:

| Content Type | Traditional Cost | AI Cost | Time Savings |

|---|---|---|---|

| 1-hour course narration | $300-500 | $20-40 | 80% |

| Multi-language versions | $300/language | $5/language | 95% |

| Content updates | Full re-record | minutes | 99% |

Workflow for Course Creators:

- Script your course in plain text with SSML markers

- Generate narration with consistent voice (ElevenLabs “Adam” for authority)

- Sync with slide timings in your LMS

- Generate translations for global audiences

- Update sections as content evolves—regenerate only changed parts

Social Media Content at Scale

Creating Consistent Brand Voice:

Content creators producing 30+ videos/month use AI voice for:

- Faceless YouTube channels: Consistent narrator across all videos

- TikTok/Reels: Quick voiceover generation for trending formats

- Podcast clips: Repurpose long-form into bite-sized content

Workflow:

- Write scripts in batch (10-20 at a time)

- Generate all audio in one session

- Combine with AI-generated music (Suno) for complete package

- Schedule across platforms

AI Voice for Specific Industries

Healthcare & Medical

Patient Communication Systems:

- Appointment reminders with natural, calming voices

- Medication instructions in patient’s preferred language

- Post-procedure care instructions

- Mental health chatbots with empathetic voices (Hume AI)

Medical Transcription:

- AI-assisted dictation with specialized medical vocabulary

- Automatic clinical note generation

- HIPAA-compliant voice processing

Accessibility Applications:

- Reading assistance for visually impaired patients

- Prescription label readers

- Telemedicine voice interfaces

💊 Case Study: A regional hospital network implemented AI voice for appointment reminders, reducing no-shows by 23% and saving $1.2M annually.

Education & E-Learning

Scalable Educational Content:

- Narrated textbooks and study materials

- Multi-language educational resources

- Interactive AI tutors with emotional intelligence

- Personalized learning companions

Accessibility:

- Reading assistance for dyslexic students

- Audio versions of all written content

- Adjustable speaking rates and voice preferences

Language Learning:

- Native speaker pronunciation examples

- Conversation practice with AI partners

- Accent training and feedback

Financial Services

Customer Service Automation:

- 24/7 voice-enabled banking assistance

- Account balance and transaction inquiries

- Fraud alert notifications with voice verification

Compliance & Security:

- Voice biometric authentication (with deepfake detection)

- Recorded transaction confirmations

- Regulatory disclosure readings

Challenges:

- Voice deepfake risks require multi-factor authentication

- Compliance with financial regulations

- Customer preference for human agents for complex issues

⚠️ Security Note: Financial institutions must implement liveness detection and never authorize transactions on voice alone.

Retail & Customer Service

IVR System Modernization:

- Natural-sounding menu navigation

- Conversational order status updates

- Personalized product recommendations

Brand Voice Consistency:

- Consistent voice across all customer touchpoints

- Multi-language support without additional staff

- Peak call handling with AI-first response

Implementation Metrics:

| Metric | Before AI | After AI | Improvement |

|---|---|---|---|

| Average wait time | 4:30 min | 0:45 min | 83% reduction |

| Call resolution | 67% | 78% | 16% improvement |

| Customer satisfaction | 3.2/5 | 4.1/5 | 28% improvement |

| Operating cost | $12/call | $0.50/call | 96% reduction |

Getting Started: Your First AI Voice and Music

Ready to try this yourself? Here’s a step-by-step tutorial.

Try This Now: Create Your First AI Voice

Step 1: Text-to-Speech with ElevenLabs (Free)

- Go to elevenlabs.io

- Sign up for free account (10,000 characters/month)

- Navigate to “Speech Synthesis”

- Type: “Welcome to the future of audio. This voice was generated by artificial intelligence in under one second. Can you tell the difference?”

- Select a voice (try “Rachel” for warmth or “Adam” for narration)

- Click “Generate” and download

Step 2: Generate Music with Suno (Free)

- Go to suno.ai

- Sign in with Google/Discord

- Click “Create”

- Enter prompt: “Upbeat electronic podcast intro, modern production, 15 seconds, energetic synths, no vocals”

- Generate and select best version

- Download and combine with your voiceover

Congratulations! You just created professional-quality audio content that would have cost hundreds of dollars and hours of work just a few years ago.

Quick Start Settings

| Project Type | Platform | Voice/Style | Settings |

|---|---|---|---|

| Narration | ElevenLabs | Rachel, Adam | Stability 0.5, Similarity 0.75 |

| Podcast | ElevenLabs | Josh, Bella | Stability 0.6, Similarity 0.7 |

| Audiobook | ElevenLabs | Antoni, Elli | Stability 0.7, Similarity 0.85 |

| Explainer Video | OpenAI TTS | fable, nova | Default settings |

| Background Music | Suno | Instrumental prompt | Specify “no vocals” |

Common Mistakes to Avoid

- ❌ Generating entire books in one pass (do chapters)

- ❌ Using voice cloning without consent

- ❌ Ignoring pronunciation issues (use phonetic spelling)

- ❌ Choosing mismatched voice for content

- ❌ Forgetting to add emotional cues in text

- ❌ Not reviewing for audio artifacts before publishing

Cost-Effective Strategies

- Use free tiers for prototyping, paid for final production

- Batch generate during planning phase, not at deadline

- Use shorter, higher-quality samples for voice cloning

- Combine platforms: Free TTS for drafts, premium for finals

- Generate music with AI, edit in DAW for custom needs

AI Voice & Audio Pricing

Monthly pricing as of December 2025

| Platform | Free | Starter | Pro | Notes |

|---|---|---|---|---|

| ElevenLabs | 10K chars | $5 | $22-99 | Best overall |

| OpenAI TTS | ❌ | $0.015/1K | $0.030/1K | API only |

| Hume AI | ✅ Test | Contact | Enterprise | Emotion AI |

| Cartesia | ✅ Yes | Contact | Contact | Low latency |

| Suno | 50/day | $10/mo | $30/mo | Music gen |

| Udio | ✅ Limited | ~$10/mo | ~$30/mo | Music stems |

| Podcastle | ✅ Yes | $11.99 | $23.99 | Podcasts |

| Descript | ✅ Yes | $16 | $30 | Edit by text |

Sources: ElevenLabs Pricing • Suno Pricing

Complete Pricing & Cost Analysis

Text-to-Speech Platform Pricing Comparison

| Platform | Free Tier | Starter | Pro | Enterprise | Per Character Cost |

|---|---|---|---|---|---|

| ElevenLabs | 10K chars/mo | $5/mo (30K) | $22/mo (100K) | $99/mo (500K) | $0.00018-0.0003 |

| OpenAI TTS | None | Pay-as-go | Pay-as-go | Custom | $0.015/1K chars |

| Google Cloud | 1M chars/mo | Pay-as-go | Pay-as-go | Custom | $0.000016/char |

| Azure Speech | 500K/mo | Pay-as-go | Pay-as-go | Custom | $0.000016/char |

| Cartesia | 10K chars/mo | $29/mo | $99/mo | Custom | Contact sales |

| Hume AI | Trial | $20/mo | Custom | Custom | Token-based |

| Play.ht | 12.5K words/mo | $39/mo | $99/mo | Custom | ~$0.002/word |

AI Music Platform Pricing

| Platform | Free | Pro | Premier/Max | Approx Per Song |

|---|---|---|---|---|

| Suno | 50 credits/day | $10/mo (2,500) | $30/mo (10,000) | $0.04-0.12 |

| Udio | Limited | $10/mo | $30/mo | $0.03-0.10 |

| AIVA | 3 downloads/mo | $15/mo | $49/mo | N/A |

| Soundraw | Limited | $16.99/mo | $29.99/mo | Unlimited |

| Boomy | Unlimited free | $9.99/mo | N/A | N/A |

Cost Comparison: Human vs AI Production

| Project Type | Human Cost | AI Cost | Savings | Time Savings |

|---|---|---|---|---|

| 1-hour audiobook narration | $200-400 | $20-50 | 75-90% | 60-80% |

| 30-second commercial voiceover | $500-2000 | $5-20 | 95-99% | 90% |

| 3-minute podcast intro music | $200-500 | $1-5 | 99% | 95% |

| 10-video voiceover series | $1500-3000 | $50-100 | 95% | 80% |

| Game NPC voices (100 lines) | $2000-5000 | $50-200 | 95% | 85% |

| Multi-language localization (5 langs) | $5000+ | $100-300 | 95% | 90% |

ROI Calculator Example

Scenario: Content creator producing 20 videos/month with voiceovers

| Expense | Traditional | With AI | Annual Savings |

|---|---|---|---|

| Voiceover talent | $100/video | $2/video | $23,520 |

| Background music | $50/video | $1/video | $11,760 |

| Production time | 4 hrs/video | 1 hr/video | 720 hours |

| Total Annual | $36,000 | $720 | $35,280 |

Troubleshooting Common Issues

Text-to-Speech Problems

| Problem | Likely Cause | Solution |

|---|---|---|

| Mispronounced words | Uncommon names, technical terms | Use phonetic spelling: “Kubernetes” → “koo-ber-net-eez” |

| Robotic tone | Text lacks emotional context | Add punctuation, emotion tags [excited], or conversational phrases |

| Choppy audio | Input text too long | Break into paragraphs of 500-1000 characters |

| Wrong word emphasis | Ambiguous sentences | Use SSML <emphasis> tags or rewrite sentence |

| Audio artifacts/glitches | Complex phonetics or rapid speech | Reduce speed, regenerate, try different voice |

| Inconsistent pacing | Mixed sentence structures | Normalize sentence lengths, add break tags |

| Background noise in output | Platform processing issue | Download higher quality format, regenerate |

Voice Cloning Issues

| Problem | Likely Cause | Solution |

|---|---|---|

| Clone doesn’t match original | Poor source audio quality | Re-record in quiet environment with quality mic |

| Accent drift | Insufficient training samples | Increase sample to 60-90 seconds with varied content |

| Emotional flatness | Training sample lacks expression | Include happy, serious, and questioning tones in sample |

| Background noise | Noisy training recording | Apply noise reduction before upload, re-record |

| Pronunciation errors | Limited training phonemes | Include words with varied vowel/consonant patterns |

| Clone quality degrades | Platform model updates | Regenerate voice with current model version |

AI Music Generation Issues

| Problem | Likely Cause | Solution |

|---|---|---|

| Genre doesn’t match | Vague prompt | Be specific: ”80s synth-pop” not “retro music” |

| Lyrics don’t fit melody | Missing structure markers | Use [Verse], [Chorus], [Bridge] markers |

| Audio quality issues | Overly complex arrangement | Simplify prompt, reduce number of instruments |

| Song cuts off early | Hit generation length limit | Use extend feature, upgrade to Pro plan |

| Wrong instruments | Prompt misinterpretation | Explicitly list instruments: “acoustic guitar, drums, bass” |

| Vocals don’t match style | Conflicting prompt elements | Ensure genre and vocal style align |

| Repetitive sections | Prompt too short | Add more descriptive details, structure markers |

API Integration Issues

| Problem | Likely Cause | Solution |

|---|---|---|

| Rate limiting (429) | Too many requests | Implement exponential backoff, batch requests |

| Authentication failed | Invalid/expired API key | Regenerate key, check environment variables |

| Audio format errors | Wrong encoding specified | Verify format (mp3/wav), sample rate, bit depth |

| High latency | Synchronous processing | Use streaming endpoints for real-time needs |

| Character encoding | Unicode handling issues | Ensure UTF-8 encoding throughout pipeline |

| Large file failures | Request size limits | Chunk large texts, process in segments |

For Developers: API Integration Guide

ElevenLabs API Quick Start

import requests

ELEVENLABS_API_KEY = "your_api_key_here"

VOICE_ID = "21m00Tcm4TlvDq8ikWAM" # Rachel voice

def generate_speech(text: str, voice_id: str = VOICE_ID) -> bytes:

"""Generate speech from text using ElevenLabs API."""

url = f"https://api.elevenlabs.io/v1/text-to-speech/{voice_id}"

headers = {

"Accept": "audio/mpeg",

"xi-api-key": ELEVENLABS_API_KEY,

"Content-Type": "application/json"

}

data = {

"text": text,

"model_id": "eleven_multilingual_v2",

"voice_settings": {

"stability": 0.5,

"similarity_boost": 0.75,

"style": 0.3,

"use_speaker_boost": True

}

}

response = requests.post(url, json=data, headers=headers)

response.raise_for_status()

return response.content

# Usage

audio_bytes = generate_speech("Hello, this is AI-generated speech!")

with open("output.mp3", "wb") as f:

f.write(audio_bytes)OpenAI TTS API

from openai import OpenAI

client = OpenAI()

def generate_openai_speech(text: str, voice: str = "alloy") -> None:

"""Generate speech using OpenAI's TTS API."""

response = client.audio.speech.create(

model="gpt-4o-mini-tts-2025-12-15",

voice=voice, # alloy, ash, ballad, coral, echo, sage, shimmer, verse

input=text,

response_format="mp3"

)

response.stream_to_file("openai_output.mp3")

# Usage

generate_openai_speech("Welcome to OpenAI text-to-speech!", voice="nova")Streaming Audio for Low Latency

import websocket

import json

import base64

def stream_elevenlabs_audio(text: str, voice_id: str):

"""Stream audio in real-time from ElevenLabs."""

ws_url = f"wss://api.elevenlabs.io/v1/text-to-speech/{voice_id}/stream-input"

audio_chunks = []

def on_message(ws, message):

data = json.loads(message)

if "audio" in data:

audio_bytes = base64.b64decode(data["audio"])

audio_chunks.append(audio_bytes)

def on_open(ws):

ws.send(json.dumps({

"text": text,

"voice_settings": {"stability": 0.5, "similarity_boost": 0.75}

}))

ws.send(json.dumps({"text": ""})) # Signal end of input

ws = websocket.WebSocketApp(

ws_url,

header={"xi-api-key": ELEVENLABS_API_KEY},

on_message=on_message,

on_open=on_open

)

ws.run_forever()

return b"".join(audio_chunks)Rate Limits and Best Practices

| Platform | Free Tier Limits | Pro Tier Limits | Best Practices |

|---|---|---|---|

| ElevenLabs | 10K chars, limited requests | 100K+ chars, higher RPM | Cache outputs, batch similar requests |

| OpenAI TTS | Standard rate limits | Higher RPM with tier | Use streaming for long text |

| Google Cloud | 1M chars/mo, 1000 RPM | Unlimited, higher RPM | Implement request queuing |

| Azure | 500K/mo, 20 RPM | Custom limits | Use regional endpoints for latency |

Error Handling with Retry Logic

import time

from functools import wraps

from typing import Callable, TypeVar

T = TypeVar('T')

def retry_with_backoff(max_retries: int = 3, base_delay: float = 1.0):

"""Decorator for retrying API calls with exponential backoff."""

def decorator(func: Callable[..., T]) -> Callable[..., T]:

@wraps(func)

def wrapper(*args, **kwargs) -> T:

for attempt in range(max_retries):

try:

return func(*args, **kwargs)

except Exception as e:

if attempt == max_retries - 1:

raise

delay = base_delay * (2 ** attempt)

print(f"Attempt {attempt + 1} failed: {e}. Retrying in {delay}s...")

time.sleep(delay)

raise RuntimeError("Max retries exceeded")

return wrapper

return decorator

@retry_with_backoff(max_retries=3, base_delay=2.0)

def generate_speech_with_retry(text: str) -> bytes:

return generate_speech(text)Voice Cloning Quality Guide

Recording the Perfect Sample

Equipment Recommendations:

| Quality Level | Microphone | Environment | Duration | Quality Score |

|---|---|---|---|---|

| Basic | Phone mic | Quiet room | 30 sec | ⭐⭐ |

| Good | USB condenser ($50-150) | Treated room | 60 sec | ⭐⭐⭐⭐ |

| Professional | XLR + interface ($200+) | Studio/booth | 90+ sec | ⭐⭐⭐⭐⭐ |

Recommended Budget Microphones:

- Blue Yeti ($100): Great all-around USB mic

- Audio-Technica AT2020USB+ ($150): Professional quality USB

- Rode NT-USB Mini ($100): Compact, broadcast quality

- Samson Q2U ($70): Budget-friendly hybrid USB/XLR

Recording Checklist

✅ Environment:

- Record in a quiet space (no AC, traffic, echoes)

- Use soft furnishings to reduce reflections

- Consider closet recording for improvised booth

- Test for background noise before recording

✅ Technique:

- Position mic 6-12 inches from mouth

- Use pop filter to reduce plosives

- Maintain consistent distance throughout

- Speak at natural pace and volume

✅ Content:

- Include varied sentence types (statements, questions, exclamations)

- Use natural emotional expressions

- Include pauses and breaths naturally

- Read content similar to intended use case

Sample Content Script Template

Hello, I'm recording a voice sample for AI cloning.

This is my natural speaking voice.

Let me demonstrate some different tones.

First, a statement: The weather today is beautiful.

Now a question: Have you ever visited Paris in spring?

And excitement: I can't believe we won the championship!

Here's a longer passage to capture my natural rhythm...

[Continue with 2-3 paragraphs of natural content]Platform-Specific Requirements

| Platform | Min Duration | Max Duration | Format | Special Requirements |

|---|---|---|---|---|

| ElevenLabs | 30 sec | 30 min | MP3/WAV | High quality = longer samples |

| Cartesia | 10 sec | 60 sec | WAV | SSM needs less data |

| Play.ht | 30 sec | 5 min | MP3/WAV | Supports audio enhancement |

| Descript | 10 min | 30 min | WAV | Needs longer for Overdub |

| Resemble AI | 25 samples | Unlimited | WAV | Script-based recording |

Quality Optimization Tips

- Pre-process your audio: Remove background noise using Audacity or Adobe Podcast

- Normalize levels: Ensure consistent volume throughout

- Export in highest quality: WAV 44.1kHz 16-bit minimum

- Test before committing: Clone with sample, evaluate, re-record if needed

- Update periodically: Voices change over time; refresh samples annually

SSML Reference Guide

Basic SSML Tags for Text-to-Speech

<speak>

<!-- Add a pause -->

<break time="500ms"/>

<break strength="strong"/>

<!-- Emphasize words -->

<emphasis level="strong">critical</emphasis>

<emphasis level="moderate">important</emphasis>

<!-- Control pronunciation -->

<phoneme alphabet="ipa" ph="ˈtoʊmeɪtoʊ">tomato</phoneme>

<phoneme alphabet="x-sampa" ph="t@'meItoU">tomato</phoneme>

<!-- Say as specific type -->

<say-as interpret-as="characters">API</say-as>

<say-as interpret-as="cardinal">42</say-as>

<say-as interpret-as="ordinal">1st</say-as>

<say-as interpret-as="date" format="mdy">12/25/2025</say-as>

<say-as interpret-as="telephone">+1-555-123-4567</say-as>

<!-- Control pitch and rate -->

<prosody rate="slow" pitch="+10%">Slower and higher</prosody>

<prosody rate="120%" volume="loud">Faster and louder</prosody>

<!-- Substitute pronunciation -->

<sub alias="World Wide Web Consortium">W3C</sub>

</speak>Platform SSML Support Comparison

| Platform | Full SSML | Custom Tags | Notes |

|---|---|---|---|

| ElevenLabs | Partial | [whisper], [excited], [sad] | Emotion tags in text |

| Google Cloud | ✅ Full | Standard W3C | Best SSML support |

| Azure Speech | ✅ Full | Extended SSML + MSTTS | Speaking styles |

| Amazon Polly | ✅ Full | NTTS extensions | Newscaster style |

| OpenAI TTS | ❌ None | Natural language prompts | Use plain text |

| Cartesia | Partial | Volume, rate controls | API parameters |

Common SSML Use Cases

Technical Terms:

<speak>

The <say-as interpret-as="characters">API</say-as>

uses <sub alias="JavaScript Object Notation">JSON</sub> format.

</speak>Phone Numbers and Addresses:

<speak>

Call us at <say-as interpret-as="telephone">1-800-555-1234</say-as>.

We're located at <say-as interpret-as="address">123 Main Street</say-as>.

</speak>Dramatic Reading:

<speak>

<prosody rate="slow">

The door <break time="300ms"/> slowly <break time="200ms"/> opened.

</prosody>

<prosody rate="fast" volume="loud">

Suddenly, a scream pierced the night!

</prosody>

</speak>Pronunciation Dictionary

Create consistent pronunciations for your content:

{

"pronunciations": {

"API": "ay-pee-eye",

"CEO": "see-ee-oh",

"GitHub": "git-hub",

"nginx": "engine-x",

"Kubernetes": "koo-ber-net-ees",

"PyTorch": "pie-torch",

"SQL": "ess-queue-el or sequel",

"GIF": "jif or gif"

}

}Advanced Music Prompting Techniques

The Prompt Structure Formula

For best results, structure prompts as:

[Genre] + [Mood/Energy] + [Instruments] + [Era/Style] + [Vocals] + [Production Notes] + [Duration]Genre-Specific Prompt Templates

Epic Orchestral (Film Score):

Epic cinematic orchestral score, Hans Zimmer style, building tension,

full orchestra with brass fanfare climax, soaring strings,

timpani drums, no vocals, modern blockbuster film production, 2 minutesLo-fi Hip Hop (Study Music):

Lo-fi hip hop beat, relaxed chill vibes, vinyl crackle texture,

jazzy piano samples with warm chords, mellow bass, soft boom-bap drums,

rain ambiance, no vocals, perfect for studying, 3 minutesPop Hit (Radio Ready):

Upbeat pop song, 2025 modern production, catchy hook with singalong chorus,

female vocal, synth-driven with acoustic guitar accent,

dance-pop energy, radio-ready mix, verse-chorus-verse-chorus-bridge-chorusRock Anthem:

Powerful rock anthem, arena rock energy, distorted electric guitars,

driving drums with big fills, anthemic chorus, male vocal with power,

80s influence with modern production, builds to epic finaleElectronic/EDM:

High-energy EDM track, festival-ready drop, progressive build-up,

supersaw synths, punchy kick, sidechain compression,

euphoric breakdown, no vocals, peak-time club banger, 128 BPMGenre-Specific Keywords Reference

| Genre | Effective Keywords |

|---|---|

| Rock | distorted guitars, power chords, driving drums, anthemic, arena rock, riff-heavy |

| EDM | drop, synth bass, sidechain, festival energy, build-up, euphoric, supersaw |

| Jazz | swing, walking bass, brass section, smoky lounge, bebop, improvisation, brushed drums |

| Classical | strings quartet, chamber music, romantic era, full orchestra, legato, pizzicato |

| Country | twangy guitar, steel pedal, Nashville production, storytelling, honky-tonk, fiddle |

| Hip Hop | 808 bass, trap hi-hats, boom bap, sampled loops, flow, bars, producer tag |

| R&B | smooth vocals, neo-soul, groove, sensual, falsetto, lush harmonies |

| Ambient | atmospheric pads, drone, evolving textures, soundscape, ethereal, minimal |

Lyric Formatting Best Practices

[Intro - atmospheric synth pad, 8 bars]

[Verse 1]

Walking through the city lights at midnight

Every star above reflects in your eyes

The world is sleeping but we're wide awake

Making memories that we'll never forsake

[Pre-Chorus - building energy]

And I know, I know, I know

This feeling's taking over

[Chorus - full energy, catchy hook]

We're unstoppable tonight!

Dancing under neon lights!

Nothing's gonna bring us down

We own this town, we own this town!

[Verse 2]

(Continue pattern...)

[Bridge - stripped back, emotional, half-time feel]

When the morning comes

And reality sets in

I'll still remember

The night we shared

[Final Chorus - biggest energy, ad libs]

We're unstoppable tonight!

(Yeah, we're unstoppable!)

...

[Outro - fade out with instrumental]Mood and Energy Descriptors

| Energy Level | Descriptors |

|---|---|

| Peaceful | serene, tranquil, meditative, floating, gentle, soft |

| Melancholy | bittersweet, nostalgic, reflective, wistful, emotional |

| Upbeat | energetic, bouncy, cheerful, fun, playful, bright |

| Intense | powerful, driving, aggressive, urgent, explosive |

| Epic | cinematic, triumphant, majestic, soaring, grandiose |

| Dark | ominous, brooding, sinister, heavy, mysterious |

As AI voice technology improves, so must our defenses. It’s an arms race—and currently, the attackers have the advantage.

The Detection Challenge

Why This Is Hard:

Detection is fundamentally difficult because AI voice is designed to sound human. The same neural networks that make voices convincing also make them hard to detect. It’s like asking a colorblind person to spot a counterfeit bill that only differs in color.

- AI-generated voices are now near-indistinguishable from real voices

- Humans correctly identify AI voices only ~60% of the time

- Detection systems require constant retraining as AI improves

- Multi-layered approaches are essential for reliability

Deepfake Detection Technologies

Effectiveness by approach

Examines audio frequency patterns

Identifies live human markers

Finds embedded AI signatures

ML models for fake/real

⚠️ Critical: Voice deepfakes are outpacing visual deepfakes in frequency. Always use multi-factor verification for high-value transactions.

Sources: Pindrop • Reality Defender

Deepfake Detection Market (December 2025)

The detection industry is racing to catch up with the threat:

| Metric | Value | Source |

|---|---|---|

| 2025 Market Size | $857 million | MarketsandMarkets |

| Projected 2031 Market | $7.27 billion | MarketsandMarkets |

| Growth Rate (CAGR) | 42.8% | Market.us |

| Alternative Estimate 2025 | $211 million | Infinity Market Research |

| Alternative 2034 Projection | $5.6 billion (47.6% CAGR) | Market.us |

Note: Estimates vary widely across research firms, reflecting the nascent and rapidly evolving nature of this market.

What You Can Do

For Businesses:

- Multi-factor verification: Never approve financial transactions based on voice alone

- Detection APIs: Integrate solutions like Pindrop or Reality Defender into call centers

- Employee training: Regular deepfake awareness sessions (77% of victims could have been saved with better training)

- Callback verification: Establish protocols to call back on known numbers for high-value transactions

- AI watermarking: Require vendors to use C2PA or SynthID on all AI-generated content

For Individuals:

- Create family “safe words”: A secret code word only family members know

- Be skeptical of urgency: Scammers create panic to bypass critical thinking

- Call back on known numbers: If your “bank” calls, hang up and call the number on your card

- Check multiple channels: If someone emails an urgent request, call them to verify

- Report suspected deepfakes: Help platforms improve detection

Industry Solutions

| Solution | Focus | Best For |

|---|---|---|

| Pindrop | Voice authentication, call center fraud | Financial services, enterprises |

| Reality Defender | Content authentication | Media, government, enterprises |

| Attestiv | Content authenticity verification | Insurance, legal, media |

| ElevenLabs C2PA | Content watermarking | Creators using ElevenLabs |

| Resemble AI Detect | Voice deepfake detection | Call centers, verification |

Sources: MarketsandMarkets, Market.us, Infinity Market Research

How Detection Technology Works

Watermarking Technologies:

| Technology | Provider | Method | Detection |

|---|---|---|---|

| C2PA | Coalition (Adobe, Microsoft, etc.) | Cryptographic signatures in metadata | Visible in supporting apps |

| SynthID | Google DeepMind | Imperceptible watermark in audio | ML-based detector |

| ElevenLabs Watermark | ElevenLabs | C2PA implementation | API verification |

| Resemble Detect | Resemble AI | Neural audio fingerprinting | Real-time API |

Detection Algorithms:

- Spectral Analysis: AI voices have different frequency patterns than human speech

- Temporal Features: Breathing patterns, micro-pauses differ in synthetic speech

- Prosodic Analysis: Pitch variations and emphasis patterns

- Liveness Detection: Real-time challenges to verify live speaker

- Watermark Verification: Check for known AI platform signatures

Detection Accuracy by Method:

| Method | Accuracy | False Positive Rate | Best For |

|---|---|---|---|

| Watermark check | 99%+ | <0.1% | Known AI platforms |

| Neural classifier | 85-95% | 5-15% | Unknown sources |

| Spectral analysis | 70-85% | 10-20% | Quick screening |

| Human judgment | 24-60% | 40-75% | Should not rely on |

AI Voice for Accessibility

Screen Reader Enhancement

AI voice technology is revolutionizing accessibility for visually impaired users:

- Custom voice profiles: Create personalized, natural-sounding narration

- Emotional context: Hume AI adds appropriate emotional cues to text

- Multi-language support: Real-time translation with natural voices

- Faster consumption: Adjustable speed without pitch distortion

Voice Banking for ALS/MND Patients

One of the most impactful applications of voice cloning is preserving voices for people facing speech loss:

The Process:

- Record early: Bank voice samples before significant speech changes

- Multiple sessions: Capture varied content over time for best quality

- Clone creation: ElevenLabs and other platforms create personal voice

- AAC integration: Use cloned voice with augmentative communication devices

- Ongoing updates: Refresh clone as technology improves

Resources:

- ElevenLabs Voice Restoration - Free access for eligible patients

- Team Gleason Voice Banking - Resources and support

- My Own Voice by Acapela - AAC-focused solution

💬 Impact Story: “When my father was diagnosed with ALS, we recorded his voice. After he lost the ability to speak, he could still ‘read’ bedtime stories to his grandchildren in his own voice. The technology gave him back a piece of himself.”

Dyslexia and Reading Assistance

AI-Narrated Reading Tools:

- Speechify: Document reading with premium AI voices

- NaturalReader: PDF, ebook, and web page narration

- Immersive Reader (Microsoft): Built into Office and Edge

Benefits:

- Adjustable reading speed without comprehension loss

- Word highlighting synchronized with audio

- Multi-language pronunciation support

- Reduced cognitive load for reading-intensive tasks

Deaf and Hard-of-Hearing Support

AI audio technology complements accessibility with:

- Real-time captioning: Scribe v2 (ElevenLabs) with <150ms latency

- Caption generation: Automatic subtitles from any audio source

- Visual speech representation: Waveform and phoneme visualization

- Sign language integration: AI-generated text as bridge

Open Source Alternatives

Text-to-Speech Open Source Options

| Project | Quality | Languages | License | GPU Required | Best For |

|---|---|---|---|---|---|

| Coqui TTS | ⭐⭐⭐⭐ | 20+ | MPL 2.0 | Recommended | Self-hosting, customization |

| Piper | ⭐⭐⭐ | 40+ | MIT | No | Edge devices, Raspberry Pi |

| VITS | ⭐⭐⭐⭐ | Custom | MIT | Yes | Research, custom voices |

| Mozilla TTS | ⭐⭐⭐ | 10+ | MPL 2.0 | Recommended | Learning, research |

| eSpeak NG | ⭐⭐ | 100+ | GPL | No | Accessibility, lightweight |

| Bark | ⭐⭐⭐⭐ | Multi | MIT | Yes | Expressive, non-speech audio |

| StyleTTS2 | ⭐⭐⭐⭐⭐ | English | MIT | Yes | Highest quality open source |

Music Generation Open Source

| Project | Quality | License | Notes |

|---|---|---|---|

| Meta MusicGen | ⭐⭐⭐⭐ | CC-BY-NC | 30-sec generations, text-to-music |

| Stable Audio Open | ⭐⭐⭐ | Custom | Sound effects focused |

| Riffusion | ⭐⭐⭐ | MIT | Spectrogram diffusion, visual approach |

| AudioCraft | ⭐⭐⭐⭐ | MIT | Meta’s full audio suite (MusicGen + AudioGen) |

| Moûsai | ⭐⭐⭐ | MIT | Long-form music generation |

Self-Hosting Considerations

Pros:

- ✅ Full data privacy and control

- ✅ No per-character or API costs at scale

- ✅ Customization freedom

- ✅ No rate limits

- ✅ Offline operation possible

Cons:

- ❌ Requires GPU infrastructure ($0.50-3/hour cloud)

- ❌ Generally lower quality than commercial options

- ❌ Technical maintenance burden

- ❌ No official support

- ❌ May require ML expertise for customization

Quick Start with Coqui TTS:

# Install

pip install TTS

# List available models

tts --list_models

# Generate speech

tts --text "Hello, this is open source TTS!" \

--model_name "tts_models/en/ljspeech/tacotron2-DDC" \

--out_path output.wavAudio Post-Processing & Enhancement

AI-Generated Audio Enhancement

Even the best AI audio benefits from post-processing:

Quick Enhancement Tools:

| Tool | Function | Price | Best For |

|---|---|---|---|

| Adobe Podcast “Enhance Speech” | Noise removal, clarity | Free | Quick cleanup |

| Auphonic | Auto-leveling, mastering | 2 hrs/mo free | Podcasters |

| Descript Studio Sound | Professional enhancement | $12/mo+ | Full editing |

| Dolby.io | API-based enhancement | Pay-as-go | Developers |

| iZotope RX | Professional repair | $129+ | Audio pros |

The Mastering Chain

For professional results, process AI audio through:

1. Noise Reduction (if needed)

└─> Remove any artifacts or background noise

2. EQ Adjustment

└─> Boost clarity (2-4kHz), reduce muddiness (200-400Hz)

3. Compression

└─> Even out volume (3:1 ratio, -18dB threshold)

4. De-essing (for vocals)

└─> Reduce harsh "s" sounds (4-8kHz)

5. Limiting

└─> Prevent clipping (-1dB ceiling)

6. Format Conversion

└─> Export as needed format (MP3 320kbps or WAV)Combining AI Audio with DAW Workflow

Professional Integration:

- Generate TTS in ElevenLabs (download as WAV for highest quality)

- Import into DAW (Audacity for free, Logic/Ableton for pro)

- Apply EQ and compression to match your mix

- Generate background music in Suno/Udio

- Layer voice over music with proper levels (-6dB voice, -12dB music)

- Add transitions, sound effects as needed

- Master final mix for consistent loudness

- Export in required format and bitrate

Recommended Levels:

- Voice/dialogue: -6 to -3 dB

- Background music: -18 to -12 dB

- Sound effects: -12 to -6 dB

- Master output: -1 dB peak, -14 LUFS integrated

The Future of AI Audio: What’s Coming

Near-Term Predictions (2026)

- Real-time voice translation with emotion preservation (already emerging)

- AI music generation indistinguishable from human compositions

- Universal voice cloning standards and consent frameworks

- AI-generated soundtracks synchronized to video automatically

- Voice-first AI assistants as primary computing interface

Medium-Term Horizon (2027-2028)

- Personalized AI musicians adapting to listener preferences in real-time

- Full audiobook generation with multiple character voices, auto-casting

- Real-time dubbing for live broadcasts

- AI sound design for AR/VR environments

- Democratization of professional audio production

The Emerging Trend: Emotion AI 🔥

The hottest development in 2025 is Emotion AI—AI systems that recognize and express emotions in voice.

Key Players:

- Hume AI (Octave/EVI): Leading empathic voice platform

- Cartesia: Emotion-aware synthesis with ultra-low latency

- ElevenLabs: Emotion control in voice generation

Applications:

- Customer service: Empathetic AI agents

- Mental health: Supportive voice companions

- Gaming: Emotionally responsive NPCs

- Education: Adaptive AI tutors

2025 Milestone: PieX AI launched an emotion-tracking pendant at CES 2025, using radar technology for on-device emotion detection.

Challenges Ahead

- Regulatory frameworks struggling to keep pace

- Voice talent displacement concerns

- Copyright and training data controversies

- Deepfake detection arms race

- Ethical considerations for deceased voice recreation

Opportunities

- Accessibility: Audio content for all languages and abilities

- Creativity: New forms of musical and audio expression

- Efficiency: Rapid content production at scale

- Personalization: Custom audio experiences for every user

AI Audio Market Growth

2025 vs 2030 projections (in billions USD)

📈 Fastest Growth: AI Music Generation is projected to grow 6x by 2030, from $2.92B to $18.47B.

Sources: MarketsandMarkets • Business Research Company • Grand View Research

Key Takeaways

Let’s wrap up with the essential points:

- AI has made professional audio production accessible to everyone - What cost thousands now costs dollars, with 75-99% cost savings across use cases

- Text-to-speech is now indistinguishable from human voice - ElevenLabs leads at $6.6B valuation with 98% realism scores

- Voice cloning is powerful but requires ethical consideration - Consent and transparency are crucial; legal frameworks are evolving

- AI music generation is revolutionizing content creation - Suno and Udio lead with billion-dollar valuations and major label partnerships

- Emotion AI is the next frontier - Hume AI and Cartesia enable empathetic voice interaction with emotional memory

- Regulations are evolving to address risks - EU AI Act, TAKE IT DOWN Act set new standards; penalties up to €30M

- Deepfake detection is a $857M market - Growing to $7.3B by 2031; C2PA and SynthID watermarking becoming standard

- API integration is straightforward - Python/Node SDKs available; most platforms under 1 hour to first generation

- Open source alternatives exist - Coqui TTS, MusicGen offer self-hosting options for privacy-conscious users

- Industry-specific applications are expanding - Healthcare, education, gaming, and financial services leading adoption

Action Items

For Beginners:

- ✅ Try ElevenLabs (free tier) for your first TTS project today

- ✅ Generate a track with Suno to experience AI music firsthand

- ✅ Create a family safe word for voice deepfake protection

- ✅ Test OpenAI TTS with the new December 2025 models

- ✅ Explore accessibility features if you or others could benefit

For Developers: 6. 🔧 Integrate ElevenLabs API using the code examples in this guide 7. 🔧 Implement error handling with exponential backoff for production 8. 🔧 Explore streaming endpoints for low-latency applications 9. 🔧 Consider open source (Coqui TTS, MusicGen) for self-hosted solutions 10. 🔧 Add C2PA watermarking to AI-generated content

For Businesses: 11. 💼 Calculate ROI using the cost comparison tables 12. 💼 Review compliance requirements (HIPAA, GDPR, SOC 2) for your platform choice 13. 💼 Implement multi-factor verification beyond voice for sensitive transactions 14. 💼 Train employees on deepfake detection (77% of victims could have been saved) 15. 💼 Establish voice cloning consent policies aligned with EU AI Act requirements

What’s Next?

This is Article 18 in our AI Learning Series. Continue your journey:

- Previous: AI Video Generation - From Text to Moving Pictures

- Next: AI for Design - Canva, Figma AI, and Design Automation

Related Articles: