The Trajectory of Intelligence

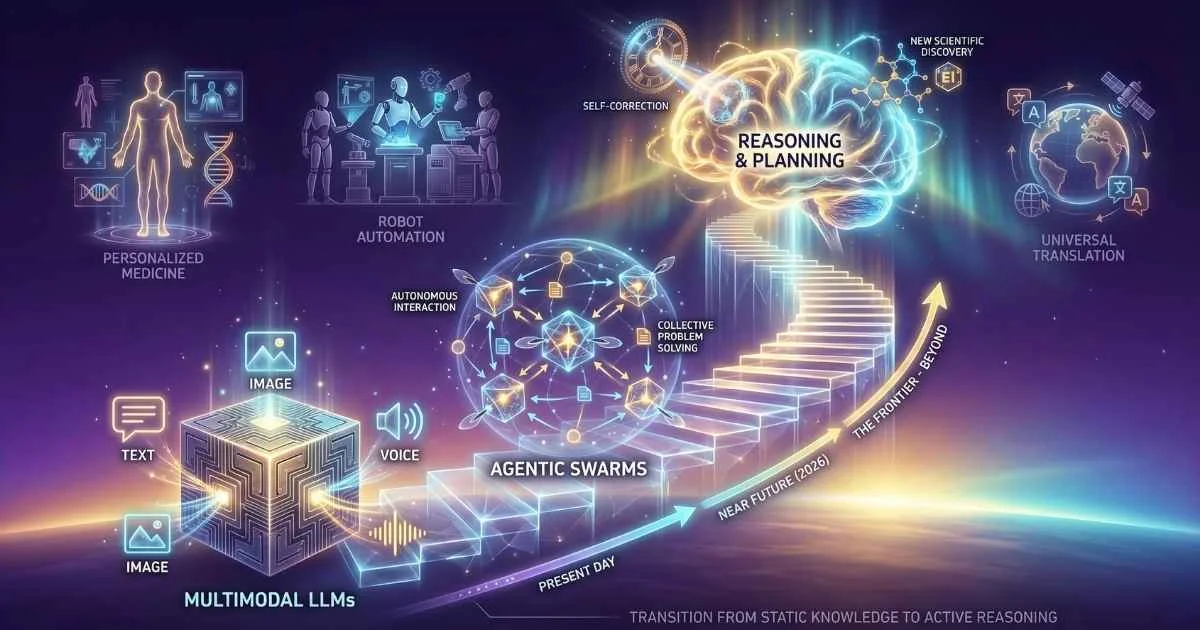

Predicting the future of AI has historically been a perilous exercise—usually because progress moves faster than anticipated. In 2025, we find ourselves at a critical inflection point where experimental capabilities are hardening into reliable infrastructure.

The focus has shifted from “what can AI do?” to “what can AI achieve autonomously?”

Current research trajectories point toward three definitive trends for 2026 and beyond: the rise of reasoning agents (System 2 thinking), the integration of multimodal senses (sight, sound, touch), and the push for personalization at the device level. For a deep dive into autonomous AI, see the AI Agents guide.

This forecast analyzes the technical roadmap for the next generation of Large Language Models:

| Date | Model | Key Highlight |

|---|---|---|

| Nov 18, 2025 | Gemini 3 Pro | PhD-level reasoning, 37.5% on Humanity’s Last Exam (Google Blog) |

| Nov 24, 2025 | Claude Opus 4.5 | 80.9% on SWE-bench Verified, best-in-class coding (Anthropic) |

| Dec 1, 2025 | DeepSeek V3.2 | Open-source, IMO gold-medal level reasoning (DeepSeek) |

| Dec 2, 2025 | Mistral Large 3 | 41B active/675B total params, open weights (Mistral AI) |

| Dec 4, 2025 | Gemini 3 Deep Think | 93.8% on GPQA Diamond, 45.1% on ARC-AGI-2 (Google Blog) |

| Dec 11, 2025 | GPT-5.2 | 400K context, 11x faster than human experts (OpenAI) |

The pace is accelerating, not slowing. And understanding where we’re heading has never been more important.

In this article, I’ll share what the research and data tell us about the future of Large Language Models—from new scaling paradigms that are reshaping how we think about AI capabilities, to the agentic revolution that’s transforming AI from conversation partners to autonomous workers.

💡 Why This Matters Now: According to McKinsey’s 2025 State of AI report, 88% of organizations now use AI in at least one business function—up from 78% just one year ago. The agentic AI market alone is projected to reach $7.6-8.3 billion in 2025 (Business Research Company). For understanding current AI capabilities, see the Understanding the AI Landscape guide.

By the end, you’ll understand:

- The new scaling paradigms beyond just “bigger models”

- How agents are evolving from chatbots to autonomous teams

- Where multimodal AI is heading

- The path (and debates) around AGI

- How regulation is shaping AI development

- Practical strategies for staying current

For understanding AI limitations and ethics, see the Understanding AI Safety, Ethics, and Limitations guide.

Let’s dive in.

88%

Organizations using AI (up from 78%)

62%

Enterprises experimenting with agents

$8B+

AI Agents market 2025

10K+

Active MCP servers

Sources: McKinsey State of AI 2025 • Business Research Co. • Anthropic MCP

The New Scaling Paradigms: Beyond Bigger Models

For years, the AI industry operated on a simple principle: bigger is better. GPT-2 had 1.5 billion parameters. GPT-3 had 175 billion. GPT-4 jumped to an estimated 1.76 trillion. The pattern was clear—scale up, and capabilities follow.

But something interesting happened in 2024-2025. The rules of the game started changing.

The Original Scaling Law Era (2020-2024)

OpenAI’s 2020 scaling laws revealed a powerful insight: model performance improves predictably with more parameters, more training data, and more compute. If you wanted better AI, you just had to throw more resources at training.

Then came DeepMind’s “Chinchilla” research in 2022, which showed that many large models were actually undertrained—they would perform better if you trained smaller models on more data. This led to more efficient models like LLaMA.

But here’s the thing: training costs were becoming astronomical. GPT-4 reportedly cost over $100 million to train (Forbes). Gemini Ultra cost $191 million (Stanford AI Index). Only a handful of organizations could afford to play this game.

The Shift to Test-Time Compute (2024-2025)

The breakthrough of 2024-2025 was recognizing that you don’t just have to scale up training—you can also scale up inference.

What does this mean in practice?

Let me explain with an analogy. Imagine you’re taking an exam:

- Traditional LLMs (like early GPT-4) are like students who immediately write down their first answer—fast, but sometimes wrong on hard problems

- Reasoning models (like o3 or Gemini Deep Think) are like students who take scratch paper, work through the problem step by step, check their reasoning, and then write down the answer

The second approach takes longer but gets more correct answers on difficult questions. That’s test-time compute.

When you ask a reasoning model like o3 or Gemini 3 Deep Think a complex question, it doesn’t just immediately spit out an answer. It “thinks” first—sometimes for seconds, sometimes for minutes—working through the problem step by step before responding.

🎯 Real-World Example: On December 4, 2025, Google launched Gemini 3 Deep Think mode, which scored 93.8% on GPQA Diamond (a graduate-level science benchmark) and an unprecedented 45.1% on ARC-AGI-2—a test designed to measure novel problem-solving ability (Google Blog).

The results are remarkable. A smaller model with more “thinking time” can match or exceed the performance of larger models on complex reasoning tasks. OpenAI’s o3-mini, for example, outperforms much larger models on STEM tasks through extended reasoning.

The Evolution of AI Scaling

From bigger models to smarter inference

🚀 Key Insight: The focus has shifted from training compute to inference compute—smaller models with more "thinking time" can match larger models.

Sources: OpenAI Research • DeepMind Chinchilla • NVIDIA Blog

Inference Optimization: The Real Frontier

Here’s a truth that’s reshaping the industry: for deployed AI applications, inference costs dominate, not training costs.

Training happens once. Inference happens billions of times. Every question you ask ChatGPT, every line of code Copilot suggests, every search Perplexity runs—that’s inference.

So the smartest labs are now investing heavily in making inference faster and cheaper:

- Quantization: Reducing the precision of model weights (from FP16 to FP8) can cut memory usage and speed up responses with minimal quality loss

- Mixture of Experts (MoE): Instead of activating all 1.76 trillion parameters for every query, GPT-4 only activates a fraction—the relevant “experts” for that particular question

- KV-Cache Optimization: Efficiently managing the model’s “memory” of the conversation to handle longer contexts

- Speculative Decoding: Using a small, fast model to draft responses that a larger model then verifies

The result? 10x efficiency gains on the same hardware. That’s why you’re seeing increasingly capable AI running on phones, laptops, and embedded devices. For more on running AI locally, see the Running LLMs Locally guide.

What This Means for 2026

The implications are profound:

- Smaller, specialized models may outperform general giants for specific tasks

- Edge deployment becomes practical—powerful AI without needing the cloud

- Reasoning capabilities will continue their dramatic improvement

- Cost of AI will continue to drop, democratizing access

The “bitter lesson” of AI is evolving. It’s not just “scale training compute.” It’s “scale everything—training, inference, and specialization.”

The Agentic AI Revolution: From Assistants to Autonomous Workers

If I had to bet on the single most transformative AI trend for 2026, it would be this: the rise of agentic AI. For a complete guide to AI agents, see the AI Agents guide.

We’re witnessing a fundamental shift in what AI systems do:

- 2024: AI that responds to questions

- 2025: AI that takes actions to achieve goals

- 2026: AI that manages complex, long-running projects autonomously

Sam Altman said it clearly: “2025 is when agents will work.” And the data is staggering:

| Statistic | Source |

|---|---|

| 88% of organizations use AI in at least one business function (up from 78% in 2024) | McKinsey 2025 |

| 62% of organizations are at least experimenting with AI agents | McKinsey 2025 |

| 57% of companies already have AI agents running in production | G2 2025 Report |

| $7.6-8.3B projected AI agents market size in 2025 | Business Research Company |

| 93% of IT leaders plan to introduce autonomous AI agents within 2 years | MuleSoft/Deloitte 2025 |

The Agent Evolution

From chatbots to autonomous agent teams

Sources: McKinsey AI Report • Gartner Predictions

What Makes Agents Different

A chatbot responds to prompts. A copilot suggests actions for you to take. An agent acts on your behalf.

Think of it this way:

Chatbot: “Here are some good flights to NYC next week.”

Copilot: “I found a $250 flight on Tuesday. Want me to show you how to book it?”

Agent: “Done! I booked you on the $250 Tuesday flight, added it to your calendar, and sent the confirmation to your email.”

The key capabilities that define an agent:

| Capability | Description | Example |

|---|---|---|

| Goal-Oriented | Works toward defined objectives | ”Book the cheapest flight to NYC” |

| Autonomous | Operates with minimal human intervention | Compares prices, handles booking |

| Tool-Using | Interacts with external systems | Browses web, calls APIs, uses apps |

| Adaptive | Learns from feedback and adjusts | Tries different approach if first fails |

This isn’t a subtle improvement—it’s a paradigm shift. Instead of AI being a fancy autocomplete, it becomes a worker that can complete multi-step tasks independently.

The December 2025 Agent Landscape

Let me walk you through the major agent platforms as they stand today:

OpenAI Operator & Agents SDK (launched January 2025, SDK March 2025)

- Browser-based task automation for ChatGPT Pro users

- The “CUA” (Computer Use Agent) model can navigate websites, click buttons, fill forms

- GPT-5.2 brings state-of-the-art agentic tool-calling, scoring 55.6% on SWE-Bench Pro (OpenAI)

- Produces deliverables 11x faster than human experts at less than 1% of the cost

- New Agents SDK (March 2025) replaced the deprecated Assistants API

Claude Computer Use (launched October 2024)

- Controls entire computer desktop—not just browser

- Mouse movement, clicking, typing, application switching

- Claude Opus 4.5 achieved breakthrough in self-improving agents—peak performance in 4 iterations while competitors couldn’t match that quality after 10 (Anthropic)

- New “effort parameter” lets developers balance performance with latency and cost

- Available via Anthropic API, AWS Bedrock, and Google Vertex AI

Google Project Mariner & Gemini

- Browser agent for Chrome, integrated with Gemini

- Gemini 3 Pro tops WebDev Arena leaderboard (Elo 1487) and scores 54.2% on Terminal-Bench 2.0 (Google Blog)

- Part of broader “Project Astra” universal assistant vision

- Native Google Workspace integration

- Now integrated into Google Antigravity (agentic development platform)

Salesforce Agentforce 360 (broader rollout December 2025)

- Operating system for the “agentic enterprise”

- Pre-built agents for service, sales, and marketing

- 119% increase in agent creation among early adopters (Salesforce)

- Integrated with Informatica for enterprise data governance

Microsoft Copilot Agents (December 2025)

- 12 new security agents for M365 E5 subscribers (bundled with Security Copilot)

- Agents for Defender, Entra, Intune, and Purview

- Threat detection, phishing triage, compliance auditing

- Built with Copilot Studio (no-code builder)

The Multi-Agent Future

Here’s where it gets really interesting: single agents are powerful, but teams of agents are transformative.

Frameworks like CrewAI, AutoGen, and LangGraph are enabling multi-agent orchestration—systems where specialized agents work together:

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#4f46e5', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#3730a3', 'lineColor': '#6366f1', 'fontSize': '16px' }}}%%

flowchart LR

A["Research Agent"] --> B["Writing Agent"]

B --> C["Editing Agent"]

C --> D["Publishing Agent"]

E["Supervisor Agent"] --> A

E --> B

E --> C

E --> DEmerging patterns include:

- Supervisor agents that coordinate specialists

- Debate and consensus where agents argue to reach better conclusions

- Specialized crews with domain experts working together

The Protocol That Could Change Everything: MCP

One of the most significant developments of late 2025 is the Model Context Protocol (MCP)—an open standard for connecting AI agents to tools and data.

Let me explain why this matters with an analogy:

Imagine if every app on your phone needed its own unique charging cable. You’d need a drawer full of different cables for different devices. That was the state of AI agent-tool integration before MCP.

MCP is like USB-C for AI agents—one standard protocol that lets any agent connect to any tool.

Launched by Anthropic under the Agentic AI Foundation (formed December 2025 with OpenAI, Anthropic, and Block under the Linux Foundation), MCP is becoming the standard way for agents to interact with external systems.

Why it matters:

- 10,000+ active MCP servers as of December 2025 (Anthropic)

- Developers build tool integrations once and connect to any agent platform

- It’s like HTTP for agentic AI—a universal language for agent-tool interaction

- Major platforms (Claude, ChatGPT, Gemini) are adopting MCP support

My prediction for 2026: MCP becomes as fundamental to agents as REST APIs are to web applications. For a complete guide, see the MCP Introduction guide.

Agent Predictions for 2026

Based on current trajectories and analyst predictions from Gartner (Top Tech Trends 2025):

| Prediction | Timeline | Source |

|---|---|---|

| 40% of enterprise applications will incorporate task-specific AI agents | By 2026 | Gartner |

| 33% of enterprise software will include agentic AI | By 2028 | Gartner |

| 15% of daily work decisions made autonomously by agentic AI | By 2028 | Gartner |

| Agentic AI to drive 30% of enterprise application software revenue ($450B+) | By 2035 | Gartner |

Other 2026 expectations:

- Autonomous coding agents (Devin, Replit Agent, Claude Code) become standard in development workflows

- Consumer agents emerge for personal task management (booking, shopping, research)

- Agent marketplaces and ecosystems develop, similar to app stores

- Multi-agent orchestration frameworks (CrewAI, LangGraph) reach production-grade maturity

Multimodal Evolution: Native Understanding of Everything

Remember when AI could only handle text? That feels like ancient history now.

Modern LLMs are becoming natively multimodal—they don’t just handle text with image analysis bolted on. They’re trained from the ground up to understand and generate across modalities. For tips on prompting multimodal models, see the Multi-Modal Prompting guide.

What Does “Natively Multimodal” Mean?

Let me explain the difference:

- Add-on multimodal (older approach): A text model gets an image analysis module attached. The text and image parts work separately, then combine results.

- Natively multimodal (new approach): The model learns text, images, audio, and video together from scratch. It understands that “this photo” and “the image above” refer to the same thing.

Think of it like learning a second language as an adult (add-on) versus growing up bilingual (native). The bilingual person doesn’t translate—they just understand both.

The Modality Expansion

| Modality | 2024 State | December 2025 State | 2026 Prediction |

|---|---|---|---|

| Text | Mature | Near-human quality | Indistinguishable |

| Images | Good understanding, generation | Real-time editing, precise text (DALL-E 3.5, Midjourney v7) | Perfect consistency |

| Audio | Transcription, TTS | Real-time voice conversations (GPT-4o, Gemini Live) | Emotionally-aware, personalized voices |

| Video | Early generation | Sora 2.0, Runway Gen-4.5, Veo 3 (up to 2 min) | 10+ minute coherent videos |

| 3D/Spatial | Experimental | Point-E, Shap-E, NVIDIA Omniverse | Interactive 3D scenes from text |

Real-Time Multimodal Interaction

GPT-4o introduced real-time voice conversations in 2024. By December 2025, all major models support natural voice interactions.

Gemini 3 Pro (November 2025) represents the current state of the art:

| Benchmark | Gemini 3 Pro Score | What It Measures |

|---|---|---|

| MMMU-Pro | 81% | Multimodal understanding across subjects |

| Video-MMMU | 87.6% | Video understanding and reasoning |

| GPQA Diamond | 91.9% | Graduate-level science questions |

| Context Window | 1M tokens | ~750,000 words of context |

Source: Google Blog

You can upload an entire book’s worth of documents, images, and audio, and have a coherent conversation across all of it.

The vision we’re heading toward: AI that sees, hears, and speaks as naturally as humans—but faster and with access to vast knowledge.

Video: The Next Frontier

2025 saw major leaps in video AI:

| Model | Company | Key Feature | Context |

|---|---|---|---|

| Sora 2.0 | OpenAI | Flagship video model | December 2025 |

| Runway Gen-4.5 | Runway | Professional video editing | Production-ready |

| Google Veo 3 | 4K video with audio | DeepMind integration | |

| Kling 2.6 | Kuaishou | Competitive Chinese video AI | 2-minute clips |

The capability gap between AI-generated and human-created video is narrowing rapidly. By 2026, expect:

- Real-time video analysis in agent workflows

- AI directors that can create short films from text descriptions

- Video that’s increasingly difficult to distinguish from real footage

The Unified Multimodal Future

We’re moving toward single models that handle all modalities natively. Training on mixed-modality data from the start creates richer representations and more natural interactions.

Applications span:

- Creative tools: Generate any media type from any other (image → video → 3D)

- Accessibility: Real-time translation, audio description, sign language generation

- Education: Interactive, multi-sensory learning experiences

- Healthcare: Analyze medical images, audio, and patient notes together

- E-commerce: Virtual try-on, 3D product visualization, voice shopping

The challenge remains balancing quality across modalities versus specialization. But the trajectory is clear: unified multimodal AI is the future.

Physical AI: LLMs Meet the Real World

AI is moving beyond screens into the physical world. This is Physical AI—the convergence of large language models, world models, and robotics.

NVIDIA’s Jensen Huang calls it one of the most significant trends of 2025. The International Federation of Robotics agrees, highlighting Physical AI as the breakthrough trend enabling robots to handle complex, non-repetitive tasks (IFR).

What Is Physical AI?

Let me explain the concept:

Traditional robots follow precise, pre-programmed instructions: “Move arm 30 degrees, grip object, lift 10 cm.” They fail when anything unexpected happens.

Physical AI robots understand goals in natural language: “Pick up the mug and put it in the dishwasher.” They figure out the movements themselves, adapting to different mugs, positions, and obstacles.

This is LLMs meeting robotics—and it’s transforming what’s possible.

World Models: AI That Understands Physics

One of the most fascinating developments is the emergence of world models—internal representations that let AI simulate and predict real-world dynamics.

Think about how you can mentally simulate what will happen if you push a cup off a table. You have an internal model of physics. Now AI is developing similar capabilities.

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#4f46e5', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#3730a3', 'lineColor': '#6366f1', 'fontSize': '16px' }}}%%

flowchart LR

A["LLM<br/>(Language Understanding)"] --> B["World Model<br/>(Physics Simulation)"]

B --> C["Action Planning"]

C --> D["Robot Execution"]

D --> E["Sensor Feedback"]

E --> ANVIDIA’s Cosmos framework (2025) is building world foundation models that make AI physically aware—helping robots understand 3D spaces and physics-based interactions. It’s trained on 20 million hours of video to understand how the physical world works (NVIDIA).

Google’s Gemini Robotics On-Device (mid-2025) runs sophisticated vision-language-action (VLA) models directly on robotic platforms, offline. This is a big deal—no latency, no internet dependency.

LLMs in Robotics

Large Language Models are providing “higher-level intelligence” to robots:

- Natural language commands → robot actions

- Task decomposition: Complex goals broken into executable steps

- Generalization: Learn one task, apply to similar situations

- Explanation: Robots can tell you what they’re doing and why

Use cases expanding in:

- Warehouses and logistics

- Healthcare and elder care

- Manufacturing

- Home assistance

Humanoid Robots: The 2025 Reality

The humanoid robot race is accelerating, with major tech companies racing to deploy human-form robots:

| Company | Robot | Key Features | Status (Dec 2025) |

|---|---|---|---|

| Figure AI | Figure 02 | GPT-4 powered, multi-task learning | BMW factory deployment |

| Tesla | Optimus Gen 3 | Manufacturing focus, home tasks | Pilot in Tesla factories |

| Boston Dynamics | Atlas + AI | Advanced mobility, parkour capability | Research partnerships |

| 1X Technologies | NEO | Home assistance, safety focus | Norway pilot program |

| Agility Robotics | Digit | Warehouse logistics | Amazon warehouse testing |

December 2025 reality: Single-purpose humanoids are being deployed in automotive and warehousing settings for specific repetitive tasks like moving boxes and parts assembly.

2026 prediction: General-purpose humanoid pilots in controlled environments, with limited consumer availability by late 2026.

Market Predictions

The market for physical AI and robotics is expected to grow dramatically:

| Prediction | Timeline | Source |

|---|---|---|

| Humanoid robot market reaches $38B | By 2035 | McKinsey |

| ~1 million humanoid robots deployed | By 2030 | Goldman Sachs |

| Physical AI market reaches $79B | By 2030 | Fortune Business Insights |

| 620,000 industrial robots installed annually | 2025 | IFR |

The convergence is happening: digital agents are meeting physical robots, creating AI that can help in both digital and physical realms.

💡 The Big Picture: In 2025, we’re seeing AI move from purely digital (answering questions, writing code) to physically embodied (moving boxes, assembling products). This is a massive expansion of what AI can do for us.

The AGI Question: Timelines, Debates, and Reality

Let’s address the elephant in the room: When will we achieve Artificial General Intelligence?

First, let me explain what AGI actually means—and why it’s so controversial.

What Is AGI?

AGI (Artificial General Intelligence) is AI that can match or exceed human cognitive abilities across all domains—not just language or games, but truly general-purpose intelligence that can learn any task a human can.

To understand why current AI isn’t AGI, consider this comparison:

| Capability | Current LLMs (GPT-5.2, Claude 4.5) | Hypothetical AGI |

|---|---|---|

| Writing essays | ✅ Excellent | ✅ Excellent |

| Coding | ✅ Very good (80.9% SWE-bench) | ✅ Perfect |

| Learning from conversation | ❌ No persistent memory | ✅ Yes |

| Genuine understanding | ❓ Debated | ✅ Yes |

| Physical world reasoning | ⚠️ Limited | ✅ Full |

| Novel scientific discovery | ❌ Not yet demonstrated | ✅ Yes |

| Self-improvement | ⚠️ Emerging | ✅ Yes |

Current LLMs, despite their impressive capabilities, are narrow but deep. They’re excellent at language tasks but still struggle with novel reasoning that goes beyond their training data.

The Expert Prediction Landscape (December 2025)

AGI Timeline Predictions

When experts think we'll achieve AGI (December 2025)

Dario Amodei

Anthropic: 2026-2027

Shane Legg

DeepMind: 2028 (50%)

Sam Altman

OpenAI: 2028-2029

Metaculus

Forecasters: 2031 (50%)

AI Researchers

Survey: 2040 (50%)

⚠️ Important: AGI predictions vary widely and carry significant uncertainty. These represent predictions, not guarantees. The definition of AGI itself remains debated.

Sources: TIME Magazine • Metaculus • 80,000 Hours

The predictions span a wide range, and importantly, timelines have been shortening over the past year:

| Expert/Source | Prediction | Quote/Notes | Source |

|---|---|---|---|

| Dario Amodei (Anthropic CEO) | 2026-2027 | ”Human-level AI within 2-3 years” (from early 2024) | TIME Magazine |

| Shane Legg (DeepMind Co-founder) | 50% by 2028 | Long-held prediction, reaffirmed 2024 | BBC Interview |

| Sam Altman (OpenAI CEO) | 2028-2029 | ”4-5 years” (from 2024) | Davos 2024 |

| Metaculus Forecasters | 25% by 2027, 50% by 2031 | Aggregate of thousands of forecasters | Metaculus |

| AI Researcher Survey | 50% by 2040 | 2,700+ researchers surveyed | AI Impacts 2024 |

Why Timelines Are Shortening

Several factors are driving more aggressive predictions:

- Rapid capability gains in reasoning models (o1 → o3)

- Emergent abilities appearing at scale

- Test-time compute unlocking new performance levels

- Multi-agent systems showing collective intelligence

- Investment levels unprecedented ($100B+ annually in AI infrastructure)

The Skeptical Perspective

Not everyone is convinced. Valid critiques include:

- LLMs are sophisticated pattern matchers, not true reasoners

- No genuine understanding—just statistical prediction

- The hallucination problem remains fundamental

- Physical world grounding is still limited

- Consciousness and genuine understanding may require fundamentally different approaches

What “AGI” Might Actually Look Like

Rather than a singular moment, AGI will likely be a gradual crossing of thresholds:

- Autonomous scientific research (AI that makes novel discoveries)

- Self-improving systems (AI that improves its own code and training)

- General task completion (any cognitive task a human can do)

OpenAI internally tracks progress on a scale from L1 (chatbots) to L5 (organizations). As of December 2025, we may be at L2 (reasoning) moving toward L3 (agents).

Preparing for an AGI Future

Regardless of exact timing, preparation matters:

- Focus on uniquely human skills: creativity, empathy, ethical judgment

- Learn to collaborate with increasingly capable AI

- Understand AI capabilities and limitations

- Stay informed about safety and governance developments

- The timeline matters less than preparation

For more on AI safety considerations, see the Understanding AI Safety, Ethics, and Limitations guide.

Regulation and Governance: Shaping AI’s Future

AI development doesn’t happen in a vacuum. Regulatory frameworks are increasingly shaping what gets built and how.

The EU AI Act: Leading Global Regulation

The EU AI Act is the world’s first comprehensive AI regulation, and it’s having global impact.

EU AI Act Timeline

Key regulatory milestones

Sources: EU AI Act • European Commission

Key milestones already passed:

- August 1, 2024: Act entered into force

- February 2, 2025: Prohibited AI practices banned (social scoring, real-time biometric ID)

- August 2, 2025: GPAI (General Purpose AI) rules became applicable

Coming up:

- August 2026: Full applicability

- August 2027: High-risk systems deadline

Requirements for LLM Providers

If you’re building or deploying LLMs in Europe, here’s what you need to know:

| Requirement | What It Means |

|---|---|

| Transparency | Disclose when content is AI-generated |

| Technical Documentation | Detailed model documentation required |

| Copyright Disclosure | Reveal copyrighted training data |

| Systemic Risk Assessment | Extra obligations for frontier models |

| AI Literacy | Train employees who use AI |

US Regulatory Landscape

The US approach remains more fragmented:

- Executive Order on AI Safety (October 2023): Reporting requirements for frontier models

- State-level action: California AI safety proposals, others following

- Congressional efforts: Various AI bills in progress

- December 2025 status: Industry-driven self-regulation predominates

OpenAI is advocating for specific legislation, including an “AI Companion Chatbot Safety Act” proposal addressing age verification and parental controls.

Global AI Governance Trends

- China: Comprehensive AI regulations with domestic model requirements

- UK: Pro-innovation, lighter-touch approach

- AI Sovereignty: Countries building national AI capabilities

- Emerging norm: Transparency and safety requirements becoming standard

How Regulation Is Shaping Development

The impact is real:

- Safety teams expanded at major AI labs

- Constitutional AI and alignment research prioritized

- Increased focus on explainability and audit trails

- Some capability development delayed for safety review

- Open vs. closed model debates intensifying

The November-December 2025 Model Explosion

Let me put the current moment in perspective. The past 30 days have seen more major model releases than some entire years before:

30 Days of AI Releases

Major model launches Nov-Dec 2025

Gemini 3 Pro

Claude Opus 4.5

Anthropic

DeepSeek V3.2

DeepSeek

Mistral Large 3

Mistral AI

GPT-5.2

OpenAI

Nemotron 3 Nano

NVIDIA

🔥 Historic Pace: More frontier models were released in November-December 2025 than in all of 2023 combined.

Sources: OpenAI • Anthropic • Google DeepMind

This isn’t sustainable—or is it? Investment in AI infrastructure has never been higher. The race between OpenAI, Anthropic, Google, Meta, xAI, and Chinese labs like DeepSeek is intensifying.

What’s driving this acceleration?

- Competitive pressure: No lab wants to fall behind

- Investor expectations: Billions invested, results expected

- Technical momentum: Each breakthrough enables the next

- Talent concentration: The best researchers are clustered at a few organizations

- Regulatory windows: Getting capabilities out before potential restrictions

Staying Current: Your Guide to the AI Fire Hose

With the pace this fast, how do you stay informed without drowning in information? Here’s my approach.

The Strategy: Curated Sources + Systematic Exploration

Don’t try to read everything. Instead, follow a few high-quality sources and actively experiment.

Essential Resources for AI News

| Resource | Type | Best For | Frequency |

|---|---|---|---|

| The Rundown AI | Newsletter | Daily curated news | Daily |

| AI Tidbits | Newsletter | Weekly summary | Weekly |

| Stratechery | Analysis | Strategic implications | Weekly |

| AI Explained (YouTube) | Video | Deep dives on models | Weekly |

| Hugging Face Blog | Technical | Open source updates | Weekly |

| ArXiv | Research | Latest papers | Daily |

Following the Right People

On Twitter/X:

- Sam Altman (@sama): OpenAI direction

- Dario Amodei (@DarioAmodei): Anthropic and safety

- Yann LeCun (@ylecun): Meta AI, critical perspective

- Andrej Karpathy (@karpathy): Technical education

- Jim Fan (@DrJimFan): NVIDIA, embodied AI

- Simon Willison (@simonw): Practical applications

Hands-On Learning Strategies

- Try every major model: ChatGPT, Claude, Gemini, Perplexity, DeepSeek

- Build something weekly: Small projects to test new features

- Run models locally: Ollama, LM Studio for understanding

- Follow release notes: Each update brings new capabilities

- Join communities: Discord servers, Reddit r/LocalLLaMA, r/ChatGPT

For guidance on prompt engineering, see the Prompt Engineering Fundamentals guide.

What to Ignore

- Hype cycles and exaggerated claims

- “AI will replace all jobs tomorrow” headlines

- Models without clear benchmarks

- Vendors selling solutions without substance

2026 Predictions: What’s Coming Next

Based on current trajectories, research, and expert analysis, here are my predictions for 2026:

2026 Predictions

What's likely coming next

Mid-2026 expected

Enhanced agentic capabilities

Deeper Google integration

Meta continues open-source

Gap continues to narrow

Model Predictions

- GPT-5.5 or GPT-6: Expected mid-2026 with significant reasoning improvements

- Claude 5: Enhanced agentic capabilities, potentially computer use 2.0

- Gemini 4: Deeper Google ecosystem integration, massive context

- LLaMA 5: Meta continues open-source push, MoE advances

- Open-source gap narrows: Approaching parity for many tasks

Capability Predictions

- Context windows: 2M-10M tokens becoming standard for frontier models

- Reasoning: Near-expert level on complex STEM problems

- Agents: Reliable multi-step task completion in production

- Multimodal: Seamless cross-modality generation and understanding

- Personalization: Models that adapt to individual users over time

Industry Predictions

- Enterprise AI spending: Continued double-digit growth

- Agent marketplaces: Ecosystems for pre-built agents emerge

- AI-native companies: Organizations built around AI capabilities

- Physical AI: Significant deployment in warehouses and factories

- Consolidation: Smaller AI labs acquired by larger players

Societal Predictions

- AI literacy: Becomes essential across all roles

- Regulation: More comprehensive frameworks globally

- Job transition: Accelerating need for reskilling

- AI companions: Mainstream adoption of personal AI assistants

Wild Cards

- Scientific breakthrough: AI discovers something transformative

- Safety incident: Major AI failure prompts regulatory response

- Hardware breakthrough: New chip architectures change the game

- Open-source surprise: Open models exceed proprietary capabilities

Embracing the AI Future

Let me wrap up with what I think actually matters.

Key Takeaways

- Scaling is evolving: From just bigger models to smarter inference and specialized systems

- Agents are here: Autonomous AI is becoming reality in 2025-2026

- Multimodal is default: Future LLMs will seamlessly handle all modalities

- Physical AI emerging: LLMs are extending into the real world

- AGI is uncertain: Predictions vary, but capabilities are advancing rapidly

- Regulation is coming: EU AI Act and global frameworks will shape development

- Adaptation is key: Skills, mindsets, and organizations must evolve

Your Action Items

Based on everything we’ve covered, here’s what I’d recommend:

- Experiment now: Try the latest agents, multimodal features, and tools

- Build skills: Learn prompt engineering, understand AI capabilities and limitations

- Stay informed: Follow curated resources, not just hype

- Think strategically: How can AI enhance your work, not replace it?

- Engage ethically: Consider the implications of the AI you use and build

A Final Perspective

We are living through one of the most significant technological transformations in history. The AI capabilities we’ll see in December 2026 will make today look like the early days—and December 2025 already feels remarkably advanced.

The next few years will be defined by how we harness these capabilities. The future of AI is not predetermined—it’s being built now, by researchers, developers, policymakers, and users like you.

Understanding these trends helps you participate in shaping that future. Whether you’re building with AI, using it for work, or simply trying to stay informed, your engagement matters.

The question isn’t whether AI will transform our world—it’s how, and who will guide that transformation.

What’s Next in This Series

This concludes our 24-article journey through LLMs and AI—from “What Are Large Language Models?” to understanding where we’re headed.

If you want to revisit earlier concepts or explore new areas:

- What Are Large Language Models? A Beginner’s Guide - Start from the basics

- The Evolution of AI - From Rule-Based Systems to GPT-5 - Historical context

- AI Agents: The Breakout Year of Autonomous AI - Deep dive on agents

- Building AI-Powered Workflows - Practical implementation

Thank you for joining me on this learning journey. The future is being written—let’s make sure we’re part of the story.

Sources and References:

Industry Reports:

- McKinsey State of AI 2025 - Enterprise adoption statistics

- Gartner Top Technology Trends 2025 - Agentic AI predictions

- Business Research Company - AI Agents Market - Market size data

- G2 2025 AI Report - Production deployment statistics

Model Releases (November-December 2025):

- OpenAI GPT-5.2 Announcement - December 11, 2025

- Anthropic Claude Opus 4.5 - November 24, 2025

- Google Gemini 3 Pro - November 18, 2025

- DeepSeek V3.2 - December 1, 2025

Technical Resources:

- Anthropic MCP Documentation - Model Context Protocol

- OpenAI Research - Reasoning models and test-time compute

- DeepMind Chinchilla Paper - Scaling law research

Regulation:

- EU AI Act Official Portal - Regulatory timeline

- European Commission AI Policy

Forecasting and Predictions:

- Metaculus AGI Forecasts - Aggregate predictions

- AI Impacts - Researcher Survey - Expert opinion surveys

- TIME AI 100 Leaders - Industry leader perspectives

Robotics and Physical AI:

- International Federation of Robotics - Physical AI trends

- NVIDIA Cosmos - World model research

- Goldman Sachs Humanoid Report - Market predictions

Last updated: December 15, 2025