Bridging the Knowledge Gap

Large Language Models have a fundamental limitation: their knowledge is frozen in time. A model trained until December 2024 cannot know about an event that happened yesterday, nor can it know about your company’s private data.

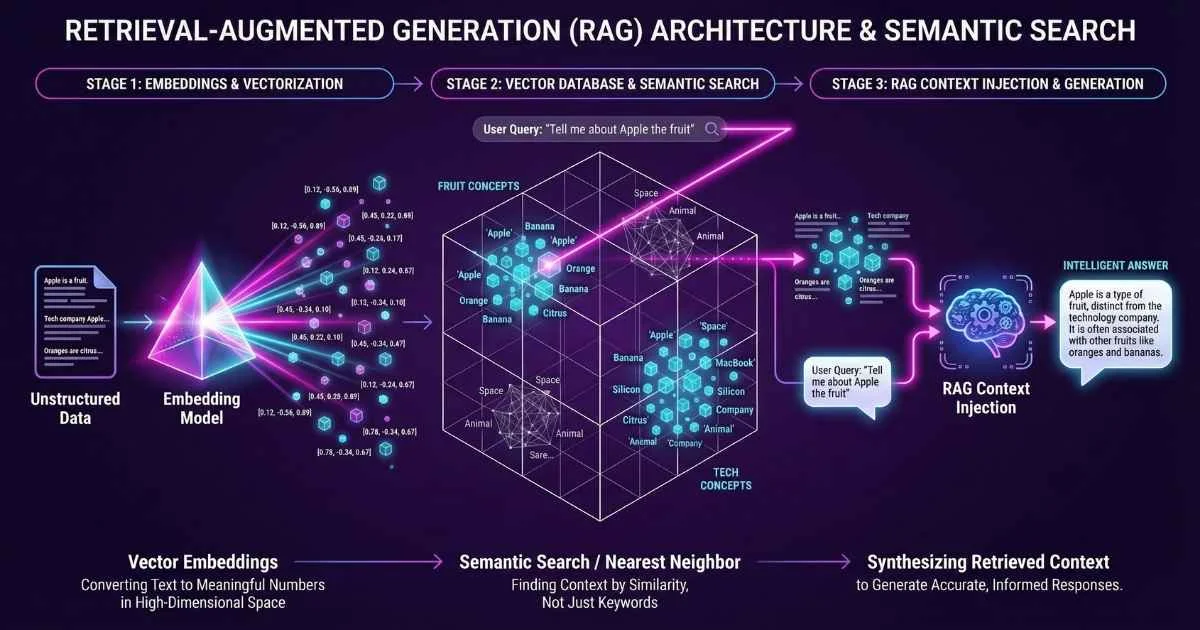

RAG (Retrieval-Augmented Generation) is the bridge between the model’s reasoning capabilities and your real-time data.

Instead of retraining a model (which is expensive and slow), RAG allows you to retrieve relevant information from a database and “feed” it to the LLM along with your question. This enables the AI to answer using your private data with high accuracy and visible citations.

This guide serves as a technical primer for the RAG architecture: it. You connect your own documents, your own knowledge base, and suddenly the AI stops hallucinating and starts giving accurate, source-backed answers.

In this guide, I’m going to break down everything you need to know about RAG, embeddings, and vector databases—the technology stack that’s powering the next generation of AI applications. By the end, you’ll understand how to build AI that knows your data. For foundational knowledge about LLMs, see the How LLMs Are Trained guide.

70%+

Enterprises using RAG

$2.65B

Vector DB market 2025

70-90%

Hallucination reduction

3.7x

ROI per $1 invested

Sources: Deloitte Gen AI Survey • MarketsandMarkets • Makebot RAG Stats

What We’re Building Toward

Let me give you the big picture first. By the end of this article, you’ll understand:

- Embeddings: How text gets transformed into searchable “meaning coordinates”

- Vector Databases: The specialized databases that store and search these embeddings

- RAG: How retrieval and generation combine to create accurate, grounded AI

- Practical Implementation: Code you can run today to build your first RAG system

- Production Considerations: What actually matters when you scale

Think of it like this: if LLMs are brilliant but forgetful experts, RAG is the system that hands them the right document at exactly the right moment.

Part 1: Embeddings – Turning Meaning Into Math

The Problem With Keywords

Before we had embeddings, search worked like this: you typed “car maintenance tips,” and the system looked for documents containing those exact words. If someone wrote about “automobile servicing advice,” you’d miss it completely—even though it’s about the same thing.

This is called the lexical gap, and it’s why traditional search often frustrates us. Human language is full of synonyms, paraphrases, and different ways of expressing the same idea.

💡 Real Example: If your company calls customers “members” but someone searches for “customer loyalty program,” keyword search fails completely. Embedding search understands they’re the same thing.

What Are Embeddings?

Embeddings solve this problem by capturing meaning instead of words.

Here’s the simplest way to think about it:

Imagine every possible meaning that could be expressed in language as a location in a massive map. An embedding is the “GPS coordinate” for a piece of text on that map.

- Texts with similar meanings have coordinates close together

- Texts with different meanings have coordinates far apart

Technically, an embedding is a list of numbers (called a “vector”)—typically 512 to 3072 of them. Each number captures some aspect of meaning that emerged from training on billions of text examples. For more on tokens and context windows, see the Tokens, Context Windows & Parameters guide.

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#6366f1', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#4f46e5', 'lineColor': '#818cf8', 'fontSize': '16px' }}}%%

flowchart LR

A["Text: 'The cat sat on the mat'"] --> B["Embedding Model"]

B --> C["Vector: [0.23, -0.41, 0.87, ...]"]

C --> D["1536 dimensions capturing meaning"]The GPS Analogy in Action:

- “King” and “Queen” are close together (both royalty, similar contexts)

- “King” and “Pizza” are far apart (completely unrelated concepts)

- “Car maintenance” and “Automobile servicing” are right next to each other (same meaning, different words)

🎓 Try This Now: Go to OpenAI’s Tokenizer and paste a few sentences. While this shows tokens (not embeddings), it helps visualize how AI breaks down text before processing.

The Magic of Semantic Math

Here’s something that still amazes me: you can do math on meanings.

The famous example: King - Man + Woman ≈ Queen

The embedding for “king” minus the embedding for “man” plus the embedding for “woman” gives you a vector very close to the embedding for “queen.” The model has somehow learned that “king” and “queen” have the same relationship as “man” and “woman.”

This isn’t programmed—it emerges from seeing billions of examples of how these words are used together.

Types of Embeddings

Different embedding models are optimized for different purposes:

| Type | What It Encodes | Best For |

|---|---|---|

| Word Embeddings | Individual words | Basic NLP, word similarity |

| Sentence Embeddings | Full sentences | Semantic search, Q&A systems |

| Document Embeddings | Long-form content | Document classification, retrieval |

| Multi-modal Embeddings | Text + Images | Cross-modal search, vision AI |

For RAG, we typically use sentence or document embeddings—we want to capture the meaning of chunks of text, not individual words.

Choosing an Embedding Model (December 2025)

The embedding model you choose has a massive impact on your RAG system’s quality. This choice often matters more than which LLM you use!

Embedding Models Comparison

Leading models ranked by retrieval relevance (December 2025)

🎯 Key Insight: Voyage-3.5 leads in retrieval relevance with 9.7% improvement over OpenAI's text-embedding-3-large on key benchmarks. For 100+ languages, BGE-M3 is the best open-source option.

Sources: MTEB Leaderboard • Voyage AI Benchmarks • Cohere Embed v4

The December 2025 Landscape:

According to benchmarks on the MTEB Leaderboard, here’s how the top models compare:

| Model | Provider | nDCG@10 | Context | Dimension | Special Feature |

|---|---|---|---|---|---|

| voyage-3.5 | Voyage AI | 0.845+ | 32K tokens | 256-2048 | Latest flagship (May 2025) |

| voyage-3-large | Voyage AI | 0.837 | 32K tokens | 256-2048 | Proven retrieval quality |

| text-embedding-3-large | OpenAI | 0.811 | 8K tokens | 256-3072 | Flexible dimensions |

| BGE-M3 | BAAI | 0.753 | 8K tokens | 1024 | Open-source, 100+ languages |

| Embed v4 | Cohere | 65.2 MTEB | 128K tokens | 256-1024 | Multimodal (text + images) |

Source: Agentset November 2025 Benchmarks, Voyage AI

My recommendations by use case:

-

For general production use: OpenAI

text-embedding-3-large— The most balanced option with 64.6% MTEB score. Supports flexible dimensions (256-3072) so you can trade off storage for quality. -

For maximum retrieval quality: Voyage AI

voyage-3.5(released May 2025) — Latest flagship model with best-in-class performance. Also considervoyage-3-large(January 2025) which shows 9.74% improvement over OpenAI text-embedding-3-large and 20.71% over Cohere. Worth the premium for precision-critical applications. -

For privacy/self-hosted: BAAI

BGE-M3— The best open-source option, supports 100+ languages, offers dense + sparse + multi-vector retrieval in one model. No data leaves your servers. -

For very long documents: Cohere

Embed v4.0(released April 15, 2025) — Revolutionary 128K token context window means you can embed entire books without chunking. Also the first major multimodal embedding model (text + images in same vector space). -

For multimodal embeddings (text + images): Voyage AI

voyage-multimodal-3.5or CohereEmbed v4.0— Both can process interleaved text and visual data (images, PDFs, videos) in the same vector space. Essential for document analysis with charts, diagrams, and visual content. -

For specialized domains: Voyage AI offers domain-specific models including

voyage-code-3(code/technical docs),voyage-finance-2(financial documents), andvoyage-law-2(legal contracts) — Outperform general models in their respective domains. -

For multilingual with budget constraints: Voyage AI

voyage-multilingual-2— Outperforms OpenAI and Cohere multilingual models in French, German, Japanese, Spanish, and Korean.

💡 Pro Tip: The embedding model matters more than the LLM for RAG quality. According to AI Multiple research, a mediocre LLM with excellent retrieval often beats a great LLM with poor retrieval. Invest your optimization time here first.

📰 December 2025 Update: MongoDB acquired Voyage AI in February 2025 and is integrating their embedding models into MongoDB Atlas for enhanced semantic search and RAG applications (currently in private preview).

Part 2: Vector Databases – The AI Memory Infrastructure

Why Regular Databases Don’t Work

You might be wondering: “Can’t I just store embeddings in PostgreSQL or MongoDB?”

Technically, yes. But when you have millions of embeddings and need to find the most similar ones in milliseconds, traditional databases fall apart.

The problem is the math. To find similar embeddings, you need to compare your query embedding to every stored embedding. That’s called a “brute force” search, and it’s O(n)—meaning if you have 1 billion vectors, you need 1 billion comparisons.

That’s where vector databases come in.

How Vector Databases Work

Vector databases use clever indexing algorithms to skip most comparisons while still finding the most similar vectors with high accuracy.

The most common algorithm is HNSW (Hierarchical Navigable Small World). Without diving into the math, it builds a multi-layer graph structure that lets you “jump” toward similar vectors quickly, then fine-tune your search in the local neighborhood.

The result: 99%+ accuracy with 1000x speed improvement over brute force.

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#10b981', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#059669', 'lineColor': '#34d399', 'fontSize': '16px' }}}%%

flowchart TD

A["Query Embedding"] --> B["HNSW Index"]

B --> C["Layer 3: Coarse Navigation"]

C --> D["Layer 2: Medium Navigation"]

D --> E["Layer 1: Fine Navigation"]

E --> F["Top-K Most Similar Vectors"]Key Concepts You Need to Know

Before we dive into specific databases, here are the terms you’ll encounter:

| Concept | What It Means |

|---|---|

| Dimensions | Size of your vectors (512, 1536, 3072). Must match your embedding model. |

| Index | The data structure enabling fast search. HNSW and IVF are most common. |

| Distance Metric | How similarity is measured. Cosine similarity is standard for text. |

| Namespace/Collection | Logical grouping of vectors. Like tables in SQL. |

| Metadata | Extra data attached to each vector (source file, page number, date). |

| Hybrid Search | Combining vector similarity with keyword matching (BM25). |

The Major Players (December 2025)

The vector database market is booming. According to MarketsandMarkets, the market is valued at $2.65 billion in 2025 and growing at 27.5% CAGR through 2030. Major funding rounds in 2024-2025 include Weaviate ($40M Series B, February 2024) and Pinecone’s continued expansion.

Let me introduce you to the vector databases that matter:

Vector Database Comparison

Click a database to see details (December 2025)

Sources: Pinecone Pricing • Weaviate Cloud • Qdrant Cloud

Pinecone: The Managed Champion

Pinecone is the go-to choice for teams that want zero operational overhead. It’s fully managed, automatically scales, and offers excellent developer experience.

December 2025 updates:

- Dedicated Read Nodes (DRNs) for preventing cold starts and ensuring predictable low-latency performance

- Bulk Data Operations (October 2025): update, delete, and fetch by metadata for simplified data management

- Hosted inference models for embedding generation

- Serverless architecture with pay-per-use pricing

Pricing: Free tier (2GB, 1M reads/month), Standard from $50/month

Best for: Teams wanting production-ready infrastructure without DevOps burden

Weaviate: The Hybrid Search Expert

Weaviate shines when you need to combine semantic search with traditional keyword matching. Its native hybrid search is best-in-class.

December 2025 updates:

- Weaviate 1.35 (December 29, 2025): Object TTL, zstd compression, flat index with RQ quantization

- Weaviate Agents: AI-driven automation tools for complex data workflows

- Multimodal support with Weaviate Embeddings

- Native BM25 + vector hybrid search in one query

- GraphQL-style API for complex queries

- 99.5% uptime on shared cloud

Pricing: Open-source free, Shared Cloud from $45/month

Best for: Complex queries combining semantic meaning + exact keyword matches

Qdrant: The Performance King

Qdrant is written in Rust and consistently tops performance benchmarks. If latency matters, Qdrant delivers.

December 2025 updates:

- Qdrant 1.16 (November 2025): Tiered Multitenancy and Disk-Efficient Vector Search

- Qdrant Cloud Inference: Unified embedding generation and vector search workflow

- Qdrant Edge: On-device retrieval for low-latency, deterministic search without servers

- Advanced retrieval with explicit control over retrieval quality

- Hybrid cloud option (connect your infrastructure to managed control plane)

- Quantization for 4x memory reduction

Pricing: Open-source free, Cloud free tier (1GB), paid from $25/month

Best for: Performance-critical applications, teams comfortable with more hands-on setup

Chroma: The Developer’s Friend

Chroma is where most people should start. It has the simplest API, runs locally with zero setup, and is perfect for prototyping.

December 2025 updates:

- Customer-Managed Encryption Keys (December 2025)

- Chroma Web Sync (November 2025) for GitHub repo indexing and web content

- Sparse vector search (October 2025)

- wal3: Improved Write-Ahead Log (September 2025)

- Collection Forking (August 2025) for dataset versioning and A/B testing

- 70% throughput improvement (July 2025)

Pricing: Open-source free, Chroma Cloud usage-based

Best for: Rapid prototyping, small-to-medium datasets, learning RAG

Milvus/Zilliz: The Enterprise Scale

Milvus is proven at billion-vector scale. If you’re at Meta or Uber scale, this is what you use.

Key strengths:

- Handles billions of vectors

- Kubernetes-native architecture

- Intelligent tiered storage (Milvus 2.6)

- Fine-grained performance tuning

Pricing: Open-source free, Zilliz Cloud from $99/month

Best for: Massive datasets, teams with strong DevOps capabilities

Which One Should You Choose?

Here’s my decision framework:

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#f59e0b', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#d97706', 'lineColor': '#fbbf24', 'fontSize': '16px' }}}%%

flowchart TD

A["Starting a RAG project?"] --> B{What's your priority?}

B -->|Learning/Prototyping| C["Chroma — Simplest setup"]

B -->|Production, zero ops| D["Pinecone — Fully managed"]

B -->|Need hybrid search| E["Weaviate — Best hybrid"]

B -->|Maximum performance| F["Qdrant — Fastest"]

B -->|Billion-scale data| G["Milvus/Zilliz — Proven scale"]

B -->|Already using Postgres| H["pgvector — Simpler stack"]Part 3: RAG Fundamentals – How It All Works Together

Now that you understand embeddings and vector databases, let’s see how they combine to create RAG.

The Core Insight

Here’s the fundamental problem: LLMs know a lot, but they don’t know your data.

- They have knowledge cutoff dates (can’t answer about recent events)

- They’ve never seen your internal documents

- They can’t access your product database or customer records

- When they don’t know something, they often make it up (hallucination)

The Library Analogy:

Think of an LLM as a brilliant librarian who’s memorized millions of books—but only books published before a certain date, and never your company’s private documents. When you ask about your specific policies, they’ll confidently guess based on similar topics they’ve read elsewhere.

RAG is like giving the librarian access to your filing cabinets. Now when you ask a question, they first pull the relevant files, read them, and then answer based on actual information.

RAG solves this by giving the LLM the right information at query time.

Instead of the LLM “remembering” everything, we:

- Store your documents in a vector database

- When a user asks a question, find the relevant documents

- Give those documents to the LLM as context

- Let the LLM generate an answer using that context

The result: accurate, up-to-date, source-backed responses.

🎓 Try This Now: Want to see RAG in action before building your own? Try Perplexity.ai—it’s a search engine that uses RAG to cite sources for every answer. Ask it something current, and notice how it shows you exactly which websites informed its response.

The RAG Pipeline

Click each stage to explore the steps

One-time preparation of your knowledge base

The Three Stages of RAG

Stage 1: Indexing (One-Time Preparation)

This happens offline, before any queries:

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#3b82f6', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#2563eb', 'lineColor': '#60a5fa', 'fontSize': '16px' }}}%%

flowchart LR

A["📄 Documents"] --> B["✂️ Chunking"]

B --> C["🔢 Embedding"]

C --> D["💾 Vector DB"]- Load Documents: Ingest all your data—PDFs, web pages, databases, APIs

- Chunk Documents: Split into smaller, meaningful pieces (more on this later)

- Create Embeddings: Convert each chunk to a vector using your embedding model

- Store: Save vectors + metadata in your vector database

Stage 2: Retrieval (Query Time)

When a user asks a question:

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#10b981', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#059669', 'lineColor': '#34d399', 'fontSize': '16px' }}}%%

flowchart LR

A["❓ User Question"] --> B["🔢 Embed Query"]

B --> C["🔍 Similarity Search"]

C --> D["📄 Top-K Chunks"]- Embed the Query: Convert the user’s question to a vector (same model as indexing!)

- Similarity Search: Find the most similar chunks in your vector database

- Ranking/Reranking: Optionally re-score results for better relevance

- Return Top-K: Usually 3-10 most relevant chunks

Stage 3: Generation

Finally, we generate the answer:

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#8b5cf6', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#7c3aed', 'lineColor': '#a78bfa', 'fontSize': '16px' }}}%%

flowchart LR

A["❓ Query + 📄 Context"] --> B["🤖 LLM"]

B --> C["✅ Grounded Answer"]- Context Assembly: Combine retrieved chunks into a context string

- Prompt Construction: Build a prompt with instructions, context, and question

- LLM Generation: The model generates an answer using the provided context

- Citation: (Optional) Include sources so users can verify

Why RAG Beats Fine-Tuning for Knowledge

You might be wondering: “Why not just fine-tune the LLM on my data?”

Here’s the comparison:

RAG vs Fine-tuning

When to use each approach for knowledge injection

Use RAG for:

Dynamic knowledge, facts, documents, citations needed

Use Fine-tuning for:

Style, behavior, format, specialized vocabulary

Use RAG when you need:

- Dynamic, frequently-changing knowledge

- Factual accuracy with citations

- Instant updates (change a document, answers change immediately)

- Transparency about sources

- Unlimited data volume

Use Fine-tuning when you need:

- Style or behavior changes (“always respond formally”)

- Specialized vocabulary or domain patterns

- Format preferences

- When the knowledge is stable and small

In practice, most enterprise applications use RAG for knowledge and fine-tuning for behavior. For more on fine-tuning, see the Fine-Tuning and Customizing LLMs guide.

How RAG Reduces Hallucinations

LLMs hallucinate because they’re trained to generate plausible text, not true text. When they don’t know something, they predict what sounds correct rather than admitting uncertainty.

Why LLMs Hallucinate (Simplified):

Imagine someone who learned to speak by reading millions of books but never experienced the real world. When asked a question they don’t know, they don’t say “I don’t know”—they construct a plausible-sounding answer based on patterns they’ve seen. That’s exactly what LLMs do.

How RAG Fixes This:

- Grounding responses in actual documents — The LLM can only use information you explicitly provide in the context

- Enabling citations — Users can click through to verify claims by checking the original sources

- Reducing the “knowledge gap” — Instead of relying on potentially outdated training data, it uses your current, verified documents

The Impact is Dramatic:

According to December 2025 research from Makebot.ai, RAG systems achieve:

- 70-90% reduction in hallucinations compared to standard LLMs

- 65-85% higher user trust in AI-generated content

- 40-60% fewer factual corrections needed post-generation

- 95-99% accuracy on queries related to recent events or updated policies

- 50% greater response relevance compared to systems without RAG (McKinsey 2025)

- Up to 48% of conventional AI outputs contain hallucinations before implementing RAG

⚠️ Important caveat: RAG doesn’t eliminate hallucinations completely. If the retrieved context is wrong or irrelevant, the LLM can still generate incorrect answers based on that context. This is why retrieval quality is everything—even more important than the LLM you choose.

Part 4: Chunking Strategies – The Hidden Art

Chunking is where many RAG systems fail. Get it wrong, and your retrieval quality plummets.

Why Chunking Matters

Embedding models have context limits—typically 512-8192 tokens. You can’t just embed entire documents.

But the way you split documents has huge implications:

- Chunks too large: Dilute relevance (good info buried in noise), may exceed model limits

- Chunks too small: Lose context, fragment meaning, may not have enough info to answer

The goal is chunks that are semantically coherent and self-contained.

Chunking Strategies Compared

Chunking Strategies

Quality vs Complexity tradeoffs

💡 Recommendation: Start with paragraph-based chunking (500-1000 tokens) with 10-20% overlap. Move to semantic chunking only if you see retrieval quality issues.

| Strategy | How It Works | Best For |

|---|---|---|

| Fixed-Size | Split every N tokens/characters | Simple documents, uniform content |

| Sentence-Based | Split at sentence boundaries | Articles, essays, narrative content |

| Paragraph-Based | Split at paragraph breaks | Well-structured documents |

| Semantic | Use embeddings to detect topic shifts | Mixed content, complex documents |

| Hierarchical | Parent-child chunks (summary + details) | Technical docs, manuals |

My Recommended Approach

For most use cases, start here:

- Use paragraph-based chunking with 500-1000 tokens per chunk

- Add 10-20% overlap between chunks to prevent breaking mid-thought

- Preserve document structure (don’t split tables, lists, or code blocks)

- Enrich with metadata (source file, section, page number)

Here’s what good metadata looks like:

{

"text": "Our standard return policy allows returns within 30 days of purchase...",

"source": "customer-support-guide.pdf",

"page": 12,

"section": "Returns and Refunds",

"date_indexed": "2025-12-14",

"doc_type": "policy"

}The metadata enables hybrid filtering—you can combine vector similarity with metadata filters like “only search policy documents” or “only recent content.”

Common Chunking Mistakes

| Mistake | Why It’s Bad | Fix |

|---|---|---|

| Splitting mid-sentence | Loses grammatical coherence | Use sentence-aware splitting |

| No overlap | Context lost at boundaries | Add 10-20% overlap |

| Ignoring structure | Tables and code blocks break | Preserve document structure |

| One-size-fits-all | Different content needs different sizes | Tune per content type |

| No metadata | Can’t filter or trace sources | Always enrich with metadata |

Document Type-Specific Chunking Strategies

Different document types require tailored chunking approaches for optimal RAG performance.

PDFs with Tables and Images

Challenge: Standard text splitters break tables and lose visual context.

Solution: Use unstructured.io or multimodal embeddings

from unstructured.partition.pdf import partition_pdf

# Extract content while preserving structure

elements = partition_pdf(

"financial_report.pdf",

strategy="hi_res", # OCR + layout analysis

infer_table_structure=True,

extract_images_in_pdf=True

)

# Separate tables from text

tables = [el for el in elements if el.category == "Table"]

text_chunks = [el for el in elements if el.category == "NarrativeText"]

# For multimodal RAG (December 2025)

from langchain.embeddings import CohereEmbeddings

# Cohere Embed v4 handles text + images

embed_model = CohereEmbeddings(

model="embed-v4.0",

input_type="search_document"

)

# Embed both text and base64-encoded images

for table in tables:

table_image = table.metadata.image_base64

embedding = embed_model.embed_multimodal(

text=table.text,

image=table_image

)Best for: Financial reports, scientific papers, technical documentation

Code Repositories

Challenge: Functions and classes have semantic structure that character-based splitting destroys.

Solution: AST-based chunking + code-specific embeddings

import ast

from langchain.text_splitter import Language, RecursiveCharacterTextSplitter

# Language-aware splitting

python_splitter = RecursiveCharacterTextSplitter.from_language(

language=Language.PYTHON,

chunk_size=1000,

chunk_overlap=100

)

# Preserves function and class boundaries

code_chunks = python_splitter.split_text(python_code)

# Alternative: Manual AST-based chunking

def chunk_by_function(code):

tree = ast.parse(code)

chunks = []

for node in ast.walk(tree):

if isinstance(node, (ast.FunctionDef, ast.ClassDef)):

# Extract entire function/class as one chunk

chunk = ast.get_source_segment(code, node)

chunks.append({

'content': chunk,

'metadata': {

'type': 'function' if isinstance(node, ast.FunctionDef) else 'class',

'name': node.name,

'line_start': node.lineno

}

})

return chunks

# Use code-specific embeddings

from voyageai import Client

voyage = Client(api_key="...")

embeddings = voyage.embed(

code_chunks,

model="voyage-code-3", # Specialized for code

input_type="document"

)Best for: Code search, documentation generation, code review assistants

Structured Data (CSV, JSON, SQL)

Challenge: Traditional RAG loses relational structure and query capabilities.

Solution: Hybrid approach (SQL + Vector)

import pandas as pd

from langchain_experimental.sql import SQLDatabaseChain

# For structured queries: Use SQL

db = SQLDatabase.from_uri("sqlite:///sales.db")

sql_chain = SQLDatabaseChain.from_llm(llm, db)

# For semantic queries: Use embeddings

df = pd.read_csv("products.csv")

# Create rich text descriptions for embedding

def create_searchable_text(row):

return f"""

Product: {row['name']}

Category: {row['category']}

Description: {row['description']}

Price: ${row['price']}

Features: {', '.join(row['features'])}

"""

text_docs = df.apply(create_searchable_text, axis=1)

vectorstore.add_texts(

texts=text_docs.tolist(),

metadatas=df.to_dict('records') # Store full row as metadata

)

# Route queries appropriately

def query_structured_data(question):

if "how many" in question.lower() or "average" in question.lower():

# Aggregation query → SQL

return sql_chain.run(question)

else:

# Semantic query → Vector search

docs = vectorstore.similarity_search(question, k=5)

return qa_chain.run(question=question, docs=docs)Best for: Product catalogs, CRM data, analytics dashboards

Long-Form Content (Books, Research Papers)

Challenge: Single long document needs context from multiple levels (chapter, section, paragraph).

Solution: Hierarchical summarization + parent-child indexing

from langchain.chains.summarize import load_summarize_chain

def hierarchical_indexing(long_document):

# Level 1: Chapters (large chunks)

chapters = split_by_chapters(long_document)

# Level 2: Sections (medium chunks)

sections = []

for chapter in chapters:

chapter_sections = split_by_sections(chapter)

sections.extend(chapter_sections)

# Level 3: Paragraphs (small chunks - actual retrieval units)

paragraphs = []

for section in sections:

section_paragraphs = split_by_paragraphs(section)

paragraphs.extend(section_paragraphs)

# Create summaries at each level

summarize_chain = load_summarize_chain(llm, chain_type="map_reduce")

for chapter in chapters:

chapter['summary'] = summarize_chain.run([chapter['content']])

for section in sections:

section['summary'] = summarize_chain.run([section['content']])

# Index paragraphs with hierarchical metadata

for para in paragraphs:

para['metadata'] = {

'chapter_title': para.parent_chapter.title,

'chapter_summary': para.parent_chapter.summary,

'section_title': para.parent_section.title,

'section_summary': para.parent_section.summary,

}

vectorstore.add_documents(paragraphs)

# Retrieval uses both paragraph content AND parent summaries

def enhanced_search(query):

# Search paragraphs

para_results = vectorstore.similarity_search(query, k=5)

# Also search chapter/section summaries

summary_results = vectorstore.similarity_search(

query,

filter={"type": "summary"},

k=3

)

# Combine results

return para_results + summary_results

return enhanced_searchBest for: Academic papers, legal documents, technical books, novels

Document Type Quick Reference

| Document Type | Recommended Strategy | Tools | Embedding Model |

|---|---|---|---|

| PDFs with visuals | Multimodal or structured extraction | unstructured.io | Cohere Embed v4, Voyage-multimodal |

| Code | AST-based, language-aware | Language splitters | voyage-code-3 |

| Spreadsheets/CSV | Hybrid (SQL + Vector) | Pandas + SQLDatabase | OpenAI, Voyage |

| Long documents | Hierarchical summaries | Recursive summarization | Any high-quality model |

| Web pages | HTML-aware splitting | BeautifulSoup + markdown | OpenAI, Voyage |

| Emails | Thread-aware chunking | Email parsers | OpenAI |

| Chat logs | Conversation-aware | Custom splitters | OpenAI |

💡 Pro Tip: When in doubt, start with

RecursiveCharacterTextSplitterwithchunk_size=800andchunk_overlap=150. This works for 80% of use cases. Optimize only when metrics show you need to.

Part 5: Advanced RAG Patterns (December 2025)

Basic RAG works great for simple queries. But complex questions require more sophisticated approaches.

Advanced RAG Patterns (2025)

Click to explore each pattern

Agentic RAG

AI agents that iteratively reason, retrieve, and tool-use for complex queries

Sources: LangChain Docs • Microsoft GraphRAG • Haystack

Agentic RAG: The Reasoning Retriever

Basic RAG retrieves once and generates. Agentic RAG retrieves iteratively, reasoning about what information is needed.

How it works:

- Agent receives a complex query

- Reasons about what information is needed

- Formulates a search query

- Retrieves and evaluates results

- Decides: Is this sufficient? Or do I need more?

- Repeats until confident, then synthesizes answer

Example: “Compare our Q3 performance to our main competitors”

A basic RAG might just search your documents. Agentic RAG would:

- Search internal data for Q3 numbers

- Realize it needs competitor data

- Fall back to web search for competitor financials

- Synthesize a comparison

Tools: LangGraph, Haystack, CrewAI

For more on AI agents and autonomous systems, see the AI Agents guide.

Graph RAG: Understanding Relationships

Graph RAG combines knowledge graphs with vector retrieval for questions requiring relationship understanding.

When to use it:

- “Who are the suppliers connected to our highest-revenue product?”

- “Show me all employees who worked with Sarah before she left”

- Multi-hop reasoning through entity connections

How it works:

- Build a graph of entities and relationships from your documents

- When querying, traverse the graph and search vectors

- Combine structural knowledge with semantic similarity

Tools: Neo4j + vector index, Microsoft GraphRAG, LlamaIndex KnowledgeGraphIndex

Long RAG: Full Document Context

Instead of small chunks, Long RAG retrieves entire sections or documents—leveraging the massive context windows of modern LLMs.

When to use it:

- Legal contracts (need full clause context)

- Academic papers (arguments span many pages)

- Technical manuals (procedures must be complete)

Requirements: LLMs with 32K-128K+ context (GPT-4, Claude, Gemini)

Trade-off: More tokens = higher cost, but better context preservation.

Hybrid Retrieval: Best of Both Worlds

Vector search captures meaning but can miss exact terms. Keyword search finds exact matches but misses paraphrases.

Hybrid retrieval combines both:

- Vector search for semantic similarity

- BM25 (keyword) search for exact matches

- Reciprocal Rank Fusion (RRF) to combine scores

When it matters:

- Proper nouns (company names, product SKUs)

- Technical terms and acronyms

- Rare words not well-represented in embeddings

Native support: Weaviate, Pinecone, Qdrant (sparse vectors)

Part 5.5: Retrieval Optimization – The Make-or-Break Factor

Retrieval quality is the #1 determinant of RAG performance. A mediocre LLM with excellent retrieval beats a great LLM with poor retrieval every time.

Here are production-proven techniques that separate amateur from professional RAG systems.

1. Query Expansion and Rewriting

The Problem: User queries are often ambiguous, incomplete, or poorly phrased.

The Solution: Automatically enhance queries before retrieval.

Technique A: HyDE (Hypothetical Document Embeddings)

Instead of embedding the user’s question, generate a hypothetical answer and embed that. Hypothetical answers are semantically closer to actual documents than questions are.

from langchain_openai import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

# HyDE implementation

def hyde_retrieval(query, vectorstore, k=5):

llm = ChatOpenAI(model="gpt-4o-mini", temperature=0.7)

# Generate hypothetical answer

prompt =ChatPromptTemplate.from_template(

"Write a detailed passage that would answer the following question:\n{query}"

)

hypothetical_answer = llm.invoke(prompt.format(query=query)).content

# Embed the hypothetical answer instead of the query

results = vectorstore.similarity_search(hypothetical_answer, k=k)

return results

# Example usage

query = "How do I reset my password?"

# HyDE generates: "To reset your password, navigate to settings, click 'Forgot Password',

# enter your email address, and follow the instructions in the reset email..."

# This is closer to actual help docs than the question is!

docs = hyde_retrieval(query, vectorstore)When to use: Complex questions where the answer has a predictable structure (how-to, troubleshooting, technical documentation).

Impact: 10-20% improvement in retrieval precision for well-structured domains.

Technique B: Multi-Query RAG

Generate multiple variations of the query, retrieve for each, then combine results.

from langchain.retrievers.multi_query import MultiQueryRetriever

from langchain_openai import ChatOpenAI

# Multi-Query implementation

llm = ChatOpenAI(model="gpt-4o-mini", temperature=0.7)

retriever = MultiQueryRetriever.from_llm(

retriever=vectorstore.as_retriever(),

llm=llm

)

# Automatically generates variations like:

# Original: "Impact of AI on healthcare"

# Variation 1: "How is artificial intelligence transforming medical treatment?"

# Variation 2: "What are the benefits of AI in healthcare systems?"

# Variation 3: "AI applications in clinical diagnosis and patient care"

results = retriever.get_relevant_documents("Impact of AI on healthcare")When to use: Ambiguous or broad queries that could be interpreted multiple ways.

Impact: 15-25% improvement in recall (finding all relevant documents).

Technique C: Step-Back Prompting

Generate a broader, more conceptual version of the query to retrieve background context alongside specific answers.

def step_back_retrieval(query, vectorstore, k=10):

llm = ChatOpenAI(model="gpt-4o-mini", temperature=0)

# Generate step-back query

prompt = f"""Given the specific question: "{query}"

Generate a broader, more general question that would help answer this.

The general question should ask about principles, concepts, or background information.

General question:"""

step_back_query = llm.invoke(prompt).content

# Retrieve for both queries

specific_docs = vectorstore.similarity_search(query, k=k//2)

general_docs = vectorstore.similarity_search(step_back_query, k=k//2)

# Combine and deduplicate

all_docs = specific_docs + general_docs

unique_docs = list({doc.page_content: doc for doc in all_docs}.values())

return unique_docs[:k]

# Example

query = "How to configure SSL in Nginx?"

# Step-back: "What are web server security best practices?"

# Retrieves both specific SSL config AND general security context

docs = step_back_retrieval(query, vectorstore)When to use: Technical questions that benefit from both specific instructions and general context.

Impact: Significantly better answer quality for complex technical queries.

2. Metadata Filtering (Query-Time)

Reduce noise and improve precision by filtering before vector search.

# Filter by document type

results = vectorstore.similarity_search(

"What's our vacation policy?",

filter={"doc_type": "HR_policy", "status": "current"},

k=10

)

# Filter by date range (recent documents only)

from datetime import datetime, timedelta

thirty_days_ago = (datetime.now() - timedelta(days=30)).isoformat()

results = vectorstore.similarity_search(

"Latest AI developments",

filter={"date": {"$gte": thirty_days_ago}},

k=10

)

# Multi-condition filtering

results = vectorstore.similarity_search(

"Engineering team procedures",

filter={

"department": "engineering",

"classification": {"$in": ["public", "internal"]},

"last_updated": {"$gte": "2025-01-01"}

},

k=10

)

# User-specific access control

def user_query(query, user_id, k=10):

return vectorstore.similarity_search(

query,

filter={"accessible_by": {"$in": [user_id, "all_users"]}},

k=k

)Impact: 30-50% latency reduction, significantly better precision, essential for multi-tenant systems.

3. Sentence Window Retrieval

The Problem: Small chunks give better retrieval precision but lack context for generation. Large chunks dilute relevance.

The Solution: Retrieve small chunks for matching, but include surrounding context for generation.

class SentenceWindowRetriever:

def __init__(self, vectorstore, window_size=3):

self.vectorstore = vectorstore

self.window_size = window_size

def retrieve(self, query, k=5):

# Retrieve small chunks (high precision)

small_chunks = self.vectorstore.similarity_search(query, k=k)

expanded_chunks = []

for chunk in small_chunks:

# Get chunk ID and position

chunk_id = chunk.metadata['chunk_id']

position = chunk.metadata['position']

# Fetch surrounding chunks

window_chunks = []

for i in range(-self.window_size, self.window_size + 1):

neighbor_pos = position + i

neighbor = self.fetch_chunk_by_position(chunk_id, neighbor_pos)

if neighbor:

window_chunks.append(neighbor)

# Combine into expanded context

expanded_text = "\n".join([c.page_content for c in window_chunks])

expanded_chunks.append(expanded_text)

return expanded_chunks

# Usage

retriever = SentenceWindowRetriever(vectorstore, window_size=2)

contexts = retriever.retrieve("What is mitochondria?", k=3)Metadata structure needed:

# When indexing, add position metadata

{

"chunk_id": "doc_123",

"position": 5, # This is chunk #5 of the document

"total_chunks": 20

}Impact: Best of both worlds - precise retrieval + rich context for generation.

4. Reranking (Two-Stage Retrieval)

The Pattern:

- Stage 1: Fast vector search retrieves top-50 candidates (high recall)

- Stage 2: Powerful reranker re-scores to find true top-5 (high precision)

from langchain.retrievers import ContextualCompressionRetriever

from langchain_cohere import CohereRerank

# Stage 1: Cast a wide net

base_retriever = vectorstore.as_retriever(search_kwargs={"k": 50})

# Stage 2: Rerank with Cohere

reranker = CohereRerank(

model="rerank-v3.5",

top_n=5,

client=cohere_client

)

compression_retriever = ContextualCompressionRetriever(

base_compressor=reranker,

base_retriever=base_retriever

)

# Retrieve

results = compression_retriever.get_relevant_documents(

"How do neural networks learn?"

)Popular Rerankers (December 2025):

| Model | Provider | Cost | Quality | Speed |

|---|---|---|---|---|

| Cohere Rerank v3.5 | Cohere | $0.002/search | Excellent | Fast |

| BGE Reranker v2.5 | BAAI | Free (OSS) | Very Good | Medium |

| Cross-Encoder | Sentence-BERT | Free (OSS) | Good | Slow |

| GPT-4o-mini | OpenAI | $0.15/1M tokens | Excellent | Medium |

DIY Reranker with GPT-4o-mini:

def llm_rerank(query, documents, top_n=5):

llm = ChatOpenAI(model="gpt-4o-mini", temperature=0)

# Create ranking prompt

docs_text = "\n\n".join([

f"Document {i+1}:\n{doc.page_content[:500]}"

for i, doc in enumerate(documents)

])

prompt = f"""Rank these documents by relevance to the query: "{query}"

{docs_text}

Return only the document numbers in order of relevance (most relevant first).

Format: 3, 1, 7, 2, 5"""

ranking = llm.invoke(prompt).content

indices = [int(x.strip()) - 1 for x in ranking.split(",")]

return [documents[i] for i in indices[:top_n]]Impact: 10-30% improvement in answer relevance. Worth the cost for quality-critical applications.

5. Parent-Document Retrieval

The Pattern: Index small chunks for precise retrieval, but return the full parent document for generation.

from langchain.retrievers import ParentDocumentRetriever

from langchain.storage import InMemoryStore

from langchain.text_splitter import RecursiveCharacterTextSplitter

# Splitters for parent and child

parent_splitter = RecursiveCharacterTextSplitter(chunk_size=2000)

child_splitter = RecursiveCharacterTextSplitter(chunk_size=400)

# Storage for parent documents

store = InMemoryStore()

# Create retriever

retriever = ParentDocumentRetriever(

vectorstore=vectorstore,

docstore=store,

child_splitter=child_splitter,

parent_splitter=parent_splitter,

)

# Index documents

retriever.add_documents(documents)

# Retrieve: searches child chunks, returns parent docs

results = retriever.get_relevant_documents(

"What are the terms of the service agreement?"

)When to use:

- Legal contracts (retrieve full clauses, not fragments)

- Technical documentation (retrieve complete procedures)

- Academic papers (retrieve full sections with context)

Impact: Eliminates context fragmentation while maintaining retrieval precision.

6. Hybrid Retrieval (Semantic + Keyword)

Combine vector similarity with keyword matching for best of both worlds.

from langchain.retrievers import EnsembleRetriever

from langchain_community.retrievers import BM25Retriever

# Vector retriever (semantic)

vector_retriever = vectorstore.as_retriever(search_kwargs={"k": 10})

# Keyword retriever (BM25)

bm25_retriever = BM25Retriever.from_documents(documents)

bm25_retriever.k = 10

# Ensemble with Reciprocal Rank Fusion

ensemble_retriever = EnsembleRetriever(

retrievers=[vector_retriever, bm25_retriever],

weights=[0.5, 0.5] # Equal weight to both

)

results = ensemble_retriever.get_relevant_documents(

"Product SKU ABC-123 installation guide"

)

# Vector search: finds semantic matches

# BM25 search: finds exact "ABC-123" match

# RRF: combines both intelligentlyWhen hybrid matters:

- ✅ Queries with proper nouns (company names, product SKUs, person names)

- ✅ Technical terms and acronyms

- ✅ Rare words not well-represented in embeddings

- ✅ Exact phrase matches

Native Hybrid Search:

# Weaviate native hybrid

from weaviate import Client

client = Client("http://localhost:8080")

results = client.query.get("Document", ["content"]) \

.with_hybrid(

query="Tesla Model 3 battery range",

alpha=0.5 # 0=pure keyword, 1=pure semantic, 0.5=balanced

) \

.with_limit(10) \

.do()Impact: 15-30% better results for queries with specific entities or technical terms.

7. Query Routing

Intelligently route queries to different retrieval strategies based on query characteristics.

from langchain_openai import ChatOpenAI

import json

class QueryRouter:

def __init__(self, vectorstore):

self.vectorstore = vectorstore

self.llm = ChatOpenAI(model="gpt-4o-mini", temperature=0)

def classify_query(self, query):

prompt = f"""Classify this query into one of these categories:

1. FACTUAL_LOOKUP - Simple fact retrieval (e.g., "What's our office address?")

2. SEMANTIC_QUESTION - Requires understanding and explanation

3. COMPARISON - Comparing multiple things

4. RECENT_EVENTS - About current or recent information

Query: "{query}"

Return only the category name."""

category = self.llm.invoke(prompt).content.strip()

return category

def route(self, query, k=10):

category = self.classify_query(query)

if category == "FACTUAL_LOOKUP":

# Use keyword search for exact matches

return self.keyword_search(query, k)

elif category == "SEMANTIC_QUESTION":

# Use vector search for semantic understanding

return self.vector_search(query, k)

elif category == "COMPARISON":

# Use multi-query retrieval

return self.multi_query_search(query, k)

elif category == "RECENT_EVENTS":

# Filter by date + vector search

return self.recent_vector_search(query, k, days=30)

else:

# Default: hybrid search

return self.hybrid_search(query, k)

def keyword_search(self, query, k):

# BM25 or exact match

return BM25Retriever.from_documents(documents).get_relevant_documents(query)

def vector_search(self, query, k):

return self.vectorstore.similarity_search(query, k=k)

def recent_vector_search(self, query, k, days=30):

from datetime import datetime, timedelta

cutoff = (datetime.now() - timedelta(days=days)).isoformat()

return self.vectorstore.similarity_search(

query,

k=k,

filter={"date": {"$gte": cutoff}}

)

# ... other search methods

# Usage

router = QueryRouter(vectorstore)

# Automatically routed to optimal strategy

docs = router.route("What is the capital of France?") # → keyword

docs = router.route("Explain quantum computing") # → semantic

docs = router.route("Compare Python vs JavaScript") # → multi-query

docs = router.route("Latest AI developments") # → recent + semanticImpact: 20-40% overall improvement by using the right tool for each query type.

Retrieval Optimization Checklist

Must-Have (Every Production System):

- ✅ Metadata filtering for access control and scope reduction

- ✅ Proper chunk size with overlap (500-1000 tokens, 10-20% overlap)

- ✅ Monitor retrieval metrics (precision@K, recall@K)

Should-Have (Quality-Critical Applications):

- ✅ Reranking for top results

- ✅ Hybrid search (semantic + keyword)

- ✅ Query expansion for ambiguous queries

Advanced (Complex Domains):

- ✅ HyDE for structured knowledge domains

- ✅ Parent-document retrieval for long-form content

- ✅ Query routing for diverse query types

- ✅ Sentence window retrieval for balanceprecision/context

Debugging Retrieval Issues

Low Precision (Irrelevant Results):

- Add reranking

- Increase chunk overlap

- Try better embedding model

- Implement metadata filtering

Low Recall (Missing Relevant Docs):

- Increase k (retrieve more documents)

- Use multi-query expansion

- Try hybrid search

- Check if documents were indexed properly

Slow Retrieval:

- Reduce k (retrieve fewer documents initially)

- Use metadata filters to reduce search space

- Optimize vector DB (HNSW parameters)

- Consider caching frequent queries

Production Tip

Start simple, add complexity only when metrics prove it helps.

The retrieval stack for most successful RAG systems:

- ✅ Good chunking (Part 4)

- ✅ Quality embedding model (Voyage-3.5 or OpenAI)

- ✅ Metadata filtering

- ✅ Reranking

This gets you 90% of the way there. Add advanced techniques only if you need that last 10%.

📊 Retrieval Quality > LLM Quality: Spending $0.13/1M on Voyage-3.5 embeddings returns more value than upgrading from GPT-4o-mini to GPT-4. Optimize retrieval first.

Part 6: Building Your First RAG System

Let’s get practical. Here’s a complete, working RAG system using LangChain and Chroma.

Installation

pip install langchain langchain-openai langchain-chroma pypdfStep 1: Load and Chunk Documents

from langchain_community.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

# Load a PDF

loader = PyPDFLoader("company_handbook.pdf")

documents = loader.load()

# Chunk with overlap

splitter = RecursiveCharacterTextSplitter(

chunk_size=1000, # ~750 words per chunk

chunk_overlap=200, # 20% overlap

separators=["\n\n", "\n", " ", ""] # Try paragraph first

)

chunks = splitter.split_documents(documents)

print(f"Created {len(chunks)} chunks from {len(documents)} pages")Step 2: Create Embeddings and Store

from langchain_openai import OpenAIEmbeddings

from langchain_chroma import Chroma

# Initialize embedding model

embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

# Create vector store (persisted to disk)

vectorstore = Chroma.from_documents(

documents=chunks,

embedding=embeddings,

persist_directory="./chroma_db"

)

print(f"Stored {len(chunks)} vectors in Chroma")Step 3: Build the RAG Chain

from langchain_openai import ChatOpenAI

from langchain.chains import RetrievalQA

# Initialize LLM

llm = ChatOpenAI(model="gpt-4o-mini", temperature=0)

# Create retrieval chain

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff", # Stuff all context into prompt

retriever=vectorstore.as_retriever(search_kwargs={"k": 5}),

return_source_documents=True

)Step 4: Query Your Documents

# Ask a question

result = qa_chain.invoke({"query": "What is our vacation policy?"})

# Print the answer

print("Answer:", result["result"])

print("\n--- Sources ---")

for doc in result["source_documents"]:

print(f"• {doc.metadata.get('source', 'Unknown')}, Page {doc.metadata.get('page', 'N/A')}")Complete Script

Here’s everything in one file:

"""

Simple RAG System with LangChain and Chroma

Requires: pip install langchain langchain-openai langchain-chroma pypdf

Set OPENAI_API_KEY environment variable

"""

import os

from langchain_community.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_openai import OpenAIEmbeddings, ChatOpenAI

from langchain_chroma import Chroma

from langchain.chains import RetrievalQA

# Configuration

PDF_PATH = "company_handbook.pdf"

PERSIST_DIR = "./chroma_db"

EMBEDDING_MODEL = "text-embedding-3-small"

LLM_MODEL = "gpt-4o-mini"

def create_vectorstore(pdf_path: str, persist_dir: str):

"""Load PDF, chunk it, and create vector store."""

print(f"Loading {pdf_path}...")

loader = PyPDFLoader(pdf_path)

documents = loader.load()

print(f"Chunking {len(documents)} pages...")

splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=200

)

chunks = splitter.split_documents(documents)

print(f"Creating embeddings for {len(chunks)} chunks...")

embeddings = OpenAIEmbeddings(model=EMBEDDING_MODEL)

vectorstore = Chroma.from_documents(

documents=chunks,

embedding=embeddings,

persist_directory=persist_dir

)

print("Done! Vector store created.")

return vectorstore

def load_vectorstore(persist_dir: str):

"""Load existing vector store."""

embeddings = OpenAIEmbeddings(model=EMBEDDING_MODEL)

return Chroma(

persist_directory=persist_dir,

embedding_function=embeddings

)

def main():

# Create or load vector store

if os.path.exists(PERSIST_DIR):

print("Loading existing vector store...")

vectorstore = load_vectorstore(PERSIST_DIR)

else:

vectorstore = create_vectorstore(PDF_PATH, PERSIST_DIR)

# Create QA chain

llm = ChatOpenAI(model=LLM_MODEL, temperature=0)

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

retriever=vectorstore.as_retriever(search_kwargs={"k": 5}),

return_source_documents=True

)

# Interactive query loop

print("\n🤖 RAG System Ready! Ask questions about your document.")

print("Type 'quit' to exit.\n")

while True:

query = input("You: ").strip()

if query.lower() in ['quit', 'exit', 'q']:

break

if not query:

continue

result = qa_chain.invoke({"query": query})

print(f"\nAssistant: {result['result']}")

print("\n📚 Sources:")

for doc in result["source_documents"][:3]:

page = doc.metadata.get('page', 'N/A')

print(f" • Page {page}")

print()

if __name__ == "__main__":

main()Adding Reranking for Better Results

Reranking improves precision by re-scoring retrieved results with a more powerful model:

from langchain.retrievers import ContextualCompressionRetriever

from langchain_cohere import CohereRerank

# Create reranker

reranker = CohereRerank(model="rerank-v3.5")

# Wrap retriever with reranking

compression_retriever = ContextualCompressionRetriever(

base_compressor=reranker,

base_retriever=vectorstore.as_retriever(search_kwargs={"k": 20}) # Retrieve more, rerank to top

)

# Use in chain

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

retriever=compression_retriever, # Uses reranked retriever

return_source_documents=True

)Part 6.5: Choosing Your RAG Framework

The RAG ecosystem has three major frameworks, each with distinct strengths. Choosing the right one can save you weeks of refactoring.

LangChain: The Orchestrator

Best for: Complex workflows, agent-based systems, production applications requiring flexibility

Strengths:

- 50K+ integrations with tools, APIs, and services

- Excellent for multi-step reasoning with LangGraph (2025’s breakthrough for agentic workflows)

- Strong community (200K+ GitHub stars) and enterprise support

- Built-in memory management for conversational applications

- Extensive prompt template library and chain composition

Weaknesses:

- Steeper learning curve for beginners

- Can be overly complex for simple RAG use cases

- Some performance overhead for straightforward retrieval

- Frequent API changes (though stabilizing in 2025)

Code Example:

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

from langchain_chroma import Chroma

from langchain.chains import RetrievalQA

from langchain_community.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

# LangChain RAG pipeline

loader = PyPDFLoader("docs.pdf")

documents = loader.load()

splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

chunks = splitter.split_documents(documents)

embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

vectorstore = Chroma.from_documents(chunks, embeddings)

llm = ChatOpenAI(model="gpt-4o-mini", temperature=0)

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

retriever=vectorstore.as_retriever(search_kwargs={"k": 5}),

return_source_documents=True

)

result = qa_chain.invoke({"query": "What is the return policy?"})

print(result["result"])When to choose LangChain: Building chatbots, customer support systems, complex agent workflows, or applications requiring extensive tool integrations.

LlamaIndex: The Retrieval Specialist

Best for: Document-heavy applications, semantic search, knowledge bases, research tools

Strengths:

- 150+ data connectors (APIs, databases, cloud storage, Google Drive, Notion, Slack, etc.)

- Optimized specifically for data indexing and retrieval

- Simpler API for standard RAG use cases

- Built-in query engines, routers, and response synthesizers

- Excellent for both structured and unstructured data

- Native support for advanced retrieval (sub-question queries, tree-based retrieval)

Weaknesses:

- Less flexible for non-retrieval tasks (e.g., complex agents, tool calling)

- Smaller ecosystem compared to LangChain

- Agent capabilities improving but still behind LangChain

- Fewer pre-built integrations for non-data sources

Code Example:

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

from llama_index.llms.openai import OpenAI

from llama_index.embeddings.openai import OpenAIEmbedding

# LlamaIndex RAG pipeline

documents = SimpleDirectoryReader("./docs").load_data()

# Configure LLM and embeddings

llm = OpenAI(model="gpt-4o-mini", temperature=0)

embed_model = OpenAIEmbedding(model="text-embedding-3-small")

# Create index

index = VectorStoreIndex.from_documents(

documents,

llm=llm,

embed_model=embed_model

)

# Query engine

query_engine = index.as_query_engine(similarity_top_k=5)

response = query_engine.query("What is the return policy?")

print(response)

# Access source nodes

for node in response.source_nodes:

print(f"Source: {node.metadata['file_name']}, Score: {node.score:.3f}")When to choose LlamaIndex: Internal documentation systems, semantic search engines, research assistants, or applications focused on efficient document retrieval.

Haystack: The Production Framework

Best for: Enterprise deployments, hybrid search, production pipelines requiring stability

Strengths:

- Production-ready with built-in REST APIs and Docker support

- Excellent hybrid search combining BM25 (keyword) + dense retrieval out of the box

- Easy deployment and horizontal scaling

- Strong focus on evaluation, monitoring, and observability

- Modular pipeline architecture (easy to swap components)

- Backed by Deepset (enterprise support available)

Weaknesses:

- Smaller community than LangChain/LlamaIndex

- Fewer bleeding-edge features (prioritizes stability)

- Documentation can be sparse for advanced use cases

- Less flexibility for rapid prototyping

Code Example:

from haystack import Pipeline

from haystack.components.retrievers import InMemoryBM25Retriever

from haystack.components.generators import OpenAIGenerator

from haystack.components.builders import PromptBuilder

from haystack.document_stores.in_memory import InMemoryDocumentStore

from haystack.components.converters import PyPDFToDocument

# Haystack RAG pipeline

document_store = InMemoryDocumentStore()

# Load documents

converter = PyPDFToDocument()

documents = converter.run(sources=["docs.pdf"])

document_store.write_documents(documents["documents"])

# Build pipeline

template = """

Answer the question based on the context below.

Context:

{% for doc in documents %}

{{ doc.content }}

{% endfor %}

Question: {{ question }}

Answer:

"""

pipe = Pipeline()

pipe.add_component("retriever", InMemoryBM25Retriever(document_store=document_store))

pipe.add_component("prompt_builder", PromptBuilder(template=template))

pipe.add_component("llm", OpenAIGenerator(model="gpt-4o-mini"))

pipe.connect("retriever", "prompt_builder.documents")

pipe.connect("prompt_builder", "llm")

result = pipe.run({

"retriever": {"query": "What is the return policy?"},

"prompt_builder": {"question": "What is the return policy?"}

})

print(result["llm"]["replies"][0])When to choose Haystack: Enterprise search systems, production applications requiring high uptime, or teams prioritizing stability over cutting-edge features.

Hybrid Approach (Best Practice for 2025)

Many production systems combine frameworks to leverage their strengths:

# Use LlamaIndex for optimized retrieval

from llama_index.core import VectorStoreIndex

index = VectorStoreIndex.from_documents(documents)

# Convert to LangChain retriever for orchestration

from llama_index.core.langchain_helpers.adapters import to_lc_retriever

retriever = to_lc_retriever(index.as_retriever())

# Use LangChain for complex chains and agents

from langchain.chains import RetrievalQA

from langchain_openai import ChatOpenAI

qa_chain = RetrievalQA.from_chain_type(

llm=ChatOpenAI(model="gpt-4o-mini"),

retriever=retriever, # LlamaIndex retriever

return_source_documents=True

)Pattern: LlamaIndex for data ingestion and optimized retrieval → LangChain for workflow orchestration and agents → Haystack for production deployment and monitoring

Framework Comparison Table

| Feature | LangChain | LlamaIndex | Haystack |

|---|---|---|---|

| Learning Curve | Moderate-Steep | Easy-Moderate | Moderate |

| Retrieval Performance | Good | Excellent | Excellent |

| Agent Capabilities | Excellent | Moderate | Limited |

| Data Connectors | 50K+ integrations | 150+ native | 50+ |

| Production Ready | Yes | Yes | Excellent |

| Hybrid Search | Via extensions | Via extensions | Native |

| Community Size | Very Large | Large | Medium |

| Enterprise Support | LangSmith (paid) | LlamaCloud (paid) | Deepset (paid) |

| Best Use Case | Complex workflows | Document retrieval | Enterprise search |

| GitHub Stars | 200K+ | 30K+ | 15K+ |

| Backed By | Independent | Run by community | Deepset AI |

Decision Framework

Use this flowchart to choose your framework:

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#6366f1', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#4f46e5', 'lineColor': '#818cf8', 'fontSize': '14px' }}}%%

flowchart TD

A[Starting a RAG project?] --> B{What's your primary need?}

B -->|Document retrieval & search| C{How many data sources?}

B -->|Complex agents & workflows| D[LangChain]

B -->|Production stability| E[Haystack]

C -->|Many diverse sources| F[LlamaIndex]

C -->|Simple/few sources| G{Need hybrid search?}

G -->|Yes| H[Haystack or Weaviate]

G -->|No| I[Any framework works]

D --> J[Also consider: LangGraph for advanced agents]

F --> K[Also consider: Combining with LangChain]Quick Start Recommendations

Absolute Beginner → Start with LlamaIndex

Why: Simplest API, great documentation, fastest path to working RAG

Coming from ML/Data Science → Start with Haystack

Why: Familiar pipeline paradigm, excellent for experiments and evaluation

Coming from Software Engineering → Start with LangChain

Why: Flexible architecture, extensive integrations, scales to complex applications

Enterprise Team → Evaluate Haystack or LangChain + LangSmith

Why: Production features, monitoring, enterprise support options

Migration Paths

Started with LlamaIndex, need more flexibility?

# Easy migration - use adapters

from llama_index.core.langchain_helpers.adapters import to_lc_retriever

langchain_retriever = to_lc_retriever(llamaindex_retriever)Started with LangChain, want better retrieval?

# Keep LangChain chains, swap in better retrievers

from langchain_community.retrievers import WeaviateHybridSearchRetriever

retriever = WeaviateHybridSearchRetriever(client=client) # Better than basic vector searchStarted with Haystack, need agents?

# Use Haystack for retrieval, LangChain for agents

haystack_pipeline = build_retrieval_pipeline()

results = haystack_pipeline.run(query)

# Pass to LangChain agent

from langchain.agents import initialize_agent

agent = initialize_agent(..., tools=[haystack_tool])Framework Trends (December 2025)

LangChain continues to dominate for complex applications:

- LangGraph adoption accelerating (agents, multi-step reasoning)

- LangSmith becoming standard for production monitoring

- Focus on stability after years of rapid API changes

LlamaIndex solidifying position as retrieval specialist:

- Best-in-class data connectors (150+ and growing)

- Improved integration with LangChain ecosystem

- LlamaCloud offering managed infrastructure

Haystack focusing on enterprise and production:

- Deepset investing heavily in monitoring and observability

- Strong in regulated industries (finance, healthcare)

- Version 2.0 (2024) brought major architectural improvements

Bottom line: You can’t go wrong with any of these. Choose based on your primary use case, then supplement with other frameworks as needed.

💡 Pro Tip: Start simple. Every framework can build basic RAG. Only add complexity when you need it. Most teams overengineer their first RAG system.

Part 7: Production Considerations

Building a demo is one thing. Running RAG in production is another.

Comprehensive RAG Evaluation (2025 Best Practices)

You can’t improve what you don’t measure. Here’s the complete framework for evaluating RAG systems.

Evaluation Framework: Three Levels

Level 1: Component-Level Evaluation

Test each component independently to isolate issues.

from ragas.metrics import context_precision, context_recall, context_relevancy

from ragas.metrics import faithfulness, answer_relevancy

# Retrieval Quality Metrics

retrieval_metrics = {

"context_precision": context_precision, # Are retrieved docs relevant?

"context_recall": context_recall, # Did we find all relevant docs?

"context_relevancy": context_relevancy # Is context focused on query?

}

# Generation Quality Metrics

generation_metrics = {

"faithfulness": faithfulness, # Is answer grounded in context?

"answer_relevancy": answer_relevancy # Does answer match query intent?

}Level 2: End-to-End Evaluation

Test the complete RAG pipeline:

from ragas import evaluate

from ragas.metrics import (

faithfulness,

answer_relevancy,

context_recall,

context_precision,

)

from datasets import Dataset

# Prepare test dataset

eval_data = {

"question": [

"What is our vacation policy?",

"How do I reset my password?",

# ... more test questions

],

"answer": [

# Generated answers from your RAG system

],

"contexts": [

# Retrieved contexts for each question

[["Vacation policy text chunk 1", "Vacation policy text chunk 2"]],

# ...

],

"ground_truth": [

# Gold standard answers

"Employees receive 15 days of paid vacation annually...",

# ...

]

}

dataset = Dataset.from_dict(eval_data)

# Run evaluation

result = evaluate(

dataset,

metrics=[

context_precision,

context_recall,

faithfulness,

answer_relevancy,

],

)

print(result)

# Output:

# {

# 'context_precision': 0.87,

# 'context_recall': 0.92,

# 'faithfulness': 0.89,

# 'answer_relevancy': 0.94

# }Level 3: Production Monitoring

Continuous evaluation with real user queries:

# Production metrics to track

production_metrics = {

"retrieval": {

"avg_similarity_score": 0.78,

"avg_docs_retrieved": 5.2,

"metadata_filter_hit_rate": 0.65,

"cache_hit_rate": 0.42

},

"generation": {

"avg_response_length": 245,

"citation_coverage": 0.82, # % of answer citing sources

"user_thumbs_up_rate": 0.68,

"user_thumbs_down_rate": 0.12

},

"system": {

"p50_latency_ms": 450,

"p95_latency_ms": 1200,

"p99_latency_ms": 2500,

"tokens_per_query": 1850,

"cost_per_query_usd": 0.0042

}

}Key Metrics Explained

| Metric | What It Measures | Target | How to Improve |

|---|---|---|---|

| Context Precision | % of retrieved docs that are relevant | >0.85 | Add reranking, improve chunking |

| Context Recall | % of relevant docs that were retrieved | >0.90 | Increase k, use multi-query, hybrid search |

| Faithfulness | Answer supported by retrieved docs | >0.90 | Stricter prompts, better context selection |

| Answer Relevancy | Answer addresses the query | >0.85 | Improve retrieval, tune LLM prompts |

| Hallucination Rate | % of responses with fabricated info | <5% | Increase context precision, lower temperature |

| Citation Coverage | % of claims with citations | >70% | Prompt engineering, post-processing |

Building Test Datasets

Option 1: Synthetic Generation (Fast Start)

from langchain.evaluation import QAGenerateChain

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model="gpt-4o", temperature=0.7)

qa_chain = QAGenerateChain.from_llm(llm)

# Generate questions from your documents

synthetic_dataset = []

for doc in documents[:50]: # Sample 50 docs

qa_pairs = qa_chain.run(doc.page_content)

synthetic_dataset.extend(qa_pairs)

print(f"Generated {len(synthetic_dataset)} synthetic Q&A pairs")Pros: Fast, scalable, covers edge cases

Cons: May not reflect real user queries, quality varies

Option 2: Human-Labeled Golden Set (Gold Standard)

# Manually create 50-100 high-quality examples

golden_set = [

{

"question": "What is our vacation policy for new employees?",

"ground_truth": "New employees receive 15 days of vacation after 90 days of employment...",

"category": "HR",

"difficulty": "easy",

"expected_sources": ["employee_handbook.pdf"]

},

{

"question": "How do I configure SSL certificates in production?",

"ground_truth": "Use cert-manager with Let's Encrypt. Deploy to the ingress controller...",

"category": "DevOps",

"difficulty": "hard",

"expected_sources": ["deployment_guide.md", "security_best_practices.md"]

},

# ... 48-98 more examples covering all use cases

]Pros: High quality, representative of actual use

Cons: Labor-intensive, requires domain expertise

Best practice: Create 20-30 manually, generate 50-100 synthetically, combine both.

Option 3: Production Sampling (Real-World)

# Sample real user queries with high engagement

import random

def sample_production_queries(n=100):

"""Sample diverse queries from production logs."""

# Get queries with positive feedback

thumbs_up_queries = db.query("""

SELECT query, answer, retrieved_docs, user_feedback

FROM rag_logs

WHERE user_feedback = 'thumbs_up'

ORDER BY RANDOM()

LIMIT ?

""", n//2)

# Get queries with negative feedback (learn from failures)

thumbs_down_queries = db.query("""

SELECT query, answer, retrieved_docs, user_feedback

FROM rag_logs

WHERE user_feedback = 'thumbs_down'

ORDER BY RANDOM()

LIMIT ?

""", n//2)

return thumbs_up_queries + thumbs_down_queries

production_samples = sample_production_queries(100)

# Human annotators review and create ground truth

for sample in production_samples:

sample['ground_truth'] = annotate(sample['query']) # Manual annotationPros: Real user needs, discovers edge cases

Cons: Requires production data privacy handling

Evaluation Tools Comparison (December 2025)

RAGAS (Recommended for most teams)

from ragas import evaluate

from ragas.metrics import faithfulness, answer_relevancy

# Simple to use, comprehensive metrics

result = evaluate(dataset, metrics=[faithfulness, answer_relevancy])Pros:

- Open-source, free

- Comprehensive metric suite

- LangChain integration

- Active community

Cons:

- Requires LLM API calls for scoring (costs money)

- Can be slow for large datasets

Best for: Startups, mid-size teams, rapid iteration

TruLens (by TruEra)

from trulens_eval import TruChain, Feedback, Tru

from trulens_eval.feedback import Groundedness

tru = Tru()

# Real-time monitoring with dashboards

f_groundedness = Feedback(Groundedness().groundedness_measure).on_output()

tru_recorder = TruChain(

qa_chain,

app_id='my_rag_app',

feedbacks=[f_groundedness]

)

# Auto-tracks every invocation

with tru_recorder as recording:

qa_chain.run("What is our policy?")Pros:

- Excellent visualization dashboards

- Real-time monitoring

- Trace-level debugging

Cons:

- Steeper learning curve

- More setup required

Best for: Production systems needing observability

LangSmith (by LangChain)

import os

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_API_KEY"] = "your-api-key"

# Automatic tracing - zero code changes

qa_chain.run("What is the policy?")

# All traces appear in LangSmith dashboardPros:

- Seamless LangChain integration

- Collaborative debugging

- Dataset management built-in

Cons:

- Paid service ($39/month+)

- Vendor lock-in

Best for: Teams already using LangChain heavily

Maxim AI

Pros:

- Enterprise-focused (compliance, SOC 2)

- Specialized hallucination detection

- Multi-modal support

Cons:

- Enterprise pricing (contact sales)

- Overkill for small teams

Best for: Regulated industries (finance, healthcare, legal)

Arize Phoenix (Open-Source)

import phoenix as px

# Self-hosted, full control

session = px.launch_app()

# Trace LangChain automatically

from phoenix.trace.langchain import LangChainInstrumentor

LangChainInstrumentor().instrument()Pros:

- Free, open-source

- Self-hosted (data privacy)

- Excellent trace visualization

Cons:

- Requires infrastructure setup

- Smaller community

Best for: Teams wanting full control, privacy-sensitive applications

A/B Testing RAG Systems

Test different configurations to find what works best:

import random

from datetime import datetime

class ABTestRAG:

def __init__(self, config_a, config_b):

self.config_a = config_a

self.config_b = config_b

self.results_a = []

self.results_b = []