The Paradox of Too Many Choices

The question of “Which AI should I use?” has become increasingly complex. Recommending ChatGPT because of its popularity, Claude for its coding prowess, or Gemini for its ecosystem integration requires a nuanced understanding of each tool’s distinct strengths. For an introduction to how these models work, see the What Are Large Language Models guide.

The AI landscape in 2025 resembles a vast marketplace with dozens of competitors, each claiming to have reinvented intelligence. From OpenAI, Anthropic, and Google to Meta, Mistral, xAI, and DeepSeek, the options are overwhelming. For a comprehensive month-by-month breakdown of how we got here, see our AI in 2025: Year in Review.

This confusion often leads to suboptimal workflows. Using the wrong AI for a specific task is akin to using a sports car to haul furniture—technically possible, but inefficient.

This guide provides a structured framework to navigate this ecosystem, covering:

- Who the major players are and what makes each unique

- The actual differences between GPT, Claude, Gemini, and LLaMA

- Open source vs closed source—and when each makes sense

- How to choose the right AI for your specific needs

- How to stay current without drowning in AI news

For guidance on effective prompting techniques, see the Prompt Engineering Fundamentals guide.

Let’s cut through the noise.

$157B

OpenAI Valuation

200M+

ChatGPT Weekly Users

$100B+

2024 AI Investment

1M+

Gemini Context Tokens

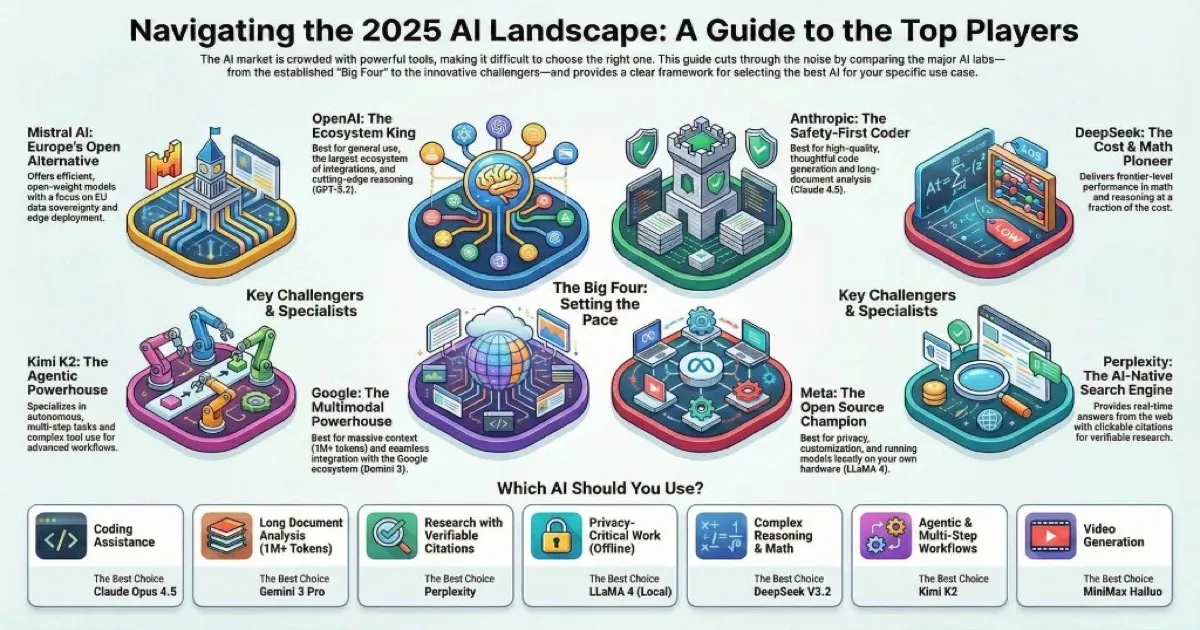

The Big Four: Who Actually Matters

Before we get into the weeds, let’s zoom out. Despite dozens of AI companies, four organizations are genuinely pushing the frontier of what’s possible. I call them the Big Four:

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#4f46e5', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#3730a3', 'lineColor': '#6366f1', 'fontSize': '16px' }}}%%

flowchart TB

subgraph Frontier["Frontier AI Labs"]

OpenAI["OpenAI<br/>ChatGPT, GPT-5.2"]

Anthropic["Anthropic<br/>Claude"]

Google["Google DeepMind<br/>Gemini"]

Meta["Meta AI<br/>LLaMA"]

endThese four share something crucial: they’re training the largest, most capable models in the world. They’re investing billions in compute. They’re employing the top AI researchers. They’re setting the pace everyone else follows.

Here’s a quick comparison to orient yourself:

| Company | Primary Product | Key Differentiator | Business Model |

|---|---|---|---|

| OpenAI | ChatGPT, GPT-5.2, o3 | First-mover, largest user base | Subscription + API |

| Anthropic | Claude | Safety-focused, best for coding | Subscription + API |

| Gemini | Multimodal, massive context | Bundled + API | |

| Meta | LLaMA | Open source, run locally | Free + ecosystem |

The interesting part? Each has a genuinely different philosophy:

- OpenAI bets on scale and consumer adoption

- Anthropic prioritizes safety and alignment (their founders left OpenAI over these concerns)

- Google focuses on multimodal and ecosystem integration

- Meta believes open source will win in the long run

These philosophical differences translate into real product differences. Let me show you what I mean.

The Big Four Compared

How the frontier labs stack up across key dimensions

Sources: Company Websites • Chatbot Arena • AI Index Report

OpenAI: The Pioneer Everyone Knows

If you’ve heard of AI chatbots, you’ve heard of ChatGPT. OpenAI has the kind of brand recognition that makes other companies jealous—“ChatGPT” has basically become a generic term for AI assistants, like “Googling” or “Xeroxing.”

The Company Story

OpenAI started in 2015 as a nonprofit with a mission to ensure AI benefits humanity. Elon Musk was one of the co-founders (he’s since left to start his own venture—more on that later). Over time, they restructured into a “capped-profit” company, took $14+ billion from Microsoft, and became the default AI company in most people’s minds.

The November 2023 drama—when the board briefly fired CEO Sam Altman before a near-universal employee revolt brought him back—highlighted internal tensions about the speed of AI development vs. safety considerations. But the company emerged stronger and more focused.

The GPT-5.2 Family (December 2025)

OpenAI’s latest models come in three flavors:

| Model | Best For | What Makes It Special |

|---|---|---|

| GPT-5.2 Instant | Quick tasks, chat | Fast responses, 256K context |

| GPT-5.2 Thinking | Complex analysis | Extended reasoning time |

| GPT-5.2 Pro | Professional work | Maximum capability |

They also have the o3 reasoning models—these are different from GPT-5.2. Where normal models respond immediately, o3 “thinks” before answering, taking more time to work through complex problems. Think of it like the difference between answering a trivia question immediately vs. working through a math problem step by step.

Beyond Chat: The OpenAI Ecosystem

What many people don’t realize is that OpenAI is far more than ChatGPT:

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#4f46e5', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#3730a3', 'lineColor': '#6366f1', 'fontSize': '16px' }}}%%

flowchart TB

OpenAI["OpenAI"] --> Language["Language Models"]

OpenAI --> Multi["Multimodal"]

OpenAI --> Platform["Platforms"]

Language --> GPT["GPT-5.2 Family"]

Language --> O3["o3 Reasoning"]

Multi --> DALLE["DALL-E 3 (Images)"]

Multi --> Whisper["Whisper (Speech)"]

Multi --> Sora["Sora (Video)"]

Platform --> ChatGPT["ChatGPT"]

Platform --> API["Developer API"]

Platform --> Enterprise["Enterprise"]- DALL-E 3 generates images directly in ChatGPT

- Whisper powers speech recognition

- Sora creates videos from text (still limited access)

- Custom GPTs let you build specialized assistants

When to Choose OpenAI

✅ Choose OpenAI when you want:

- The largest ecosystem and most third-party integrations

- The most mature consumer product

- Cutting-edge reasoning (o3/o3-Pro)

- Voice and vision capabilities

- GPTs/plugins for extended functionality

❌ Consider alternatives when:

- You need the longest context window (Gemini wins here)

- Coding is your primary use case (Claude edges ahead)

- You want to run models locally (LLaMA)

- Privacy is paramount (closed source, data may be used for training)

Anthropic: The Safety-First Challenger

If OpenAI is the mainstream pop star, Anthropic is the critically-acclaimed indie band. Smaller audience, but fiercely loyal fans—especially among developers and researchers.

The Company Story

Anthropic was founded in 2021 by Dario and Daniela Amodei, siblings who previously worked at OpenAI. They left because they believed safety research wasn’t being prioritized enough. Their approach, called Constitutional AI, trains models to follow written principles rather than just human ratings.

The company has raised $7.6 billion—$4 billion from Amazon, $2 billion from Google, plus others. Yes, both Amazon and Google invested heavily in an OpenAI competitor. Welcome to the strange economics of AI.

The Claude Family (December 2025)

| Model | Best For | Key Feature |

|---|---|---|

| Claude Opus 4.5 | Coding, complex tasks | Best coding model, period |

| Claude Sonnet 4.5 | Daily use | Balance of speed and intelligence |

| Claude Haiku 4.5 | Quick tasks | Fastest, most affordable |

All Claude models share a 200K token context window—enough to paste in a medium-sized book or an entire codebase.

What Makes Claude Different

Here’s what makes Claude special, based on my daily use:

The coding is exceptional. I’ve tried every major AI for coding, and Claude Opus 4.5 consistently produces the cleanest, most thoughtful code. It catches edge cases other models miss. It explains its reasoning. When I’m debugging something tricky, Claude is my first stop.

The responses feel more… thoughtful. This is subjective, but Claude’s outputs feel more nuanced. It acknowledges complexity instead of giving oversimplified answers. It expresses uncertainty when appropriate.

Artifacts are game-changing. Claude can create interactive artifacts—code you can run, documents you can edit, visualizations you can interact with—right in the chat. It sounds minor until you use it.

Computer Use. Claude can actually control desktop applications and browsers. This is still early, but it’s a glimpse of where agents are heading. For more on autonomous AI, see the AI Agents guide.

When to Choose Claude

✅ Choose Claude when you want:

- The best coding assistant (Opus 4.5)

- Long document analysis (200K context)

- Nuanced, thoughtful responses

- Safety-conscious outputs

- Agentic tasks (computer use, multi-step workflows)

❌ Consider alternatives when:

- You need image generation (Claude can only view images, not create them)

- You want the largest ecosystem (OpenAI has more integrations)

- Ultra-long context is crucial (Gemini’s 1M+ tokens)

- You need real-time web search built-in

Google DeepMind: The Multimodal Powerhouse

Google’s AI story is fascinating because they’ve always been an AI company—they just got outmaneuvered on the product front.

The Company Story

DeepMind, founded in 2010, was acquired by Google in 2014 for $500 million and produced some of the most iconic AI achievements: AlphaGo (beating the world Go champion), AlphaFold (predicting protein structures—Nobel-worthy work), and more.

Google Brain was their internal AI research division. In 2023, they merged into Google DeepMind, combining forces.

Here’s the irony: Google invented the Transformer architecture that powers all modern LLMs. The famous “Attention Is All You Need” paper came from Google researchers. Yet somehow OpenAI shipped ChatGPT first and captured the public imagination.

Google’s been playing catch-up on products while maintaining a research lead.

The Gemini Family (December 2025)

| Model | Best For | Context Window |

|---|---|---|

| Gemini 3 Pro | Everything, research | 1M+ tokens |

| Gemini 2.5 Pro | Stable daily use | 1M+ tokens |

| Gemini 2.5 Flash | Fast tasks | 1M+ tokens |

| Gemma 2 | Open source | 8K-128K |

Google’s Unfair Advantages

The 1M+ context window. This is genuinely game-changing. While others process books, Gemini can process entire book series. I’ve uploaded massive codebases and documentation sets that would choke other models.

Native multimodal. Gemini was built from the ground up to handle text, images, audio, and video together. It’s not bolted on—it’s fundamental. You can upload a video and ask questions about specific moments.

Deep Research Agent (December 2025). This new capability is remarkable. You can give it a research question, and it will autonomously browse the web, synthesize information, and produce a comprehensive report. It’s like having a research assistant.

Google integration. If you live in Google’s ecosystem (Gmail, Drive, Docs, Search), Gemini integrates seamlessly. Ask it about your emails and calendar. Have it summarize Google Docs. This integration is its superpower for Google users.

When to Choose Gemini

✅ Choose Gemini when you want:

- The largest context window (1M+ tokens)

- True multimodal capabilities (video, audio)

- Google ecosystem integration

- Autonomous research capabilities

- Real-time information access

❌ Consider alternatives when:

- You want the most polished chat experience

- Coding is your primary focus

- You need to run models locally

- You’re privacy-conscious about Google’s data practices

Meta: The Open Source Champion

Meta (formerly Facebook) took a different path than everyone else. Instead of building a closed product, they released their models for anyone to use.

The Company Story

Why would a trillion-dollar company give away its AI models for free? Meta’s bet is strategic:

- Commoditize the complement. If powerful AI is free, Meta’s platforms (Instagram, WhatsApp, Facebook) become more valuable.

- Attract talent. Researchers want to work on open projects.

- Ecosystem lock-in. Apps built on LLaMA stay in Meta’s orbit.

- Can’t beat them alone. Meta can’t outspend Google on AI. But they can enable thousands of others to build on LLaMA.

Led by Yann LeCun, one of the “Godfathers of AI” and a Turing Award winner, Meta AI has become a genuine force.

The LLaMA Family (December 2025)

| Model | Parameters | Best For |

|---|---|---|

| LLaMA 4 Scout | ~100B (MoE) | Efficient, edge deployment |

| LLaMA 4 Maverick | ~400B (MoE) | Maximum open-source power |

| LLaMA 3.3 | 70B | Optimized inference |

| LLaMA 3.1 | 8B-405B | Range of sizes |

MoE = Mixture of Experts, an efficient architecture that activates only part of the model for each query

The LLaMA Ecosystem

What makes LLaMA special is the ecosystem that’s grown around it:

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#4f46e5', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#3730a3', 'lineColor': '#6366f1', 'fontSize': '16px' }}}%%

flowchart LR

A["Meta Releases LLaMA"] --> B["Hugging Face Hosts"]

B --> C["Community Fine-tunes"]

C --> D["Thousands of Variants"]

D --> E["Users Run Locally"]

E --> F["Feedback to Meta"]

F --> A- Hugging Face hosts the models for easy download

- Ollama lets you run models with one command

- LM Studio provides a beautiful GUI

- Thousands of community fine-tunes for specific purposes

- Services like Together.ai and Fireworks offer cheap hosted inference

When to Choose LLaMA

✅ Choose LLaMA when you want:

- Complete privacy (data never leaves your machine)

- Zero API costs (just your own compute)

- Full customization and fine-tuning

- Offline access

- To avoid vendor lock-in

❌ Consider alternatives when:

- You want plug-and-play simplicity

- You don’t have sufficient hardware

- You need cutting-edge capabilities (closed models are usually ahead)

- You want official enterprise support

The Challengers: A Deep Dive into Rising AI Powers

The AI landscape in December 2025 extends far beyond the Big Four. A constellation of ambitious challengers is pushing boundaries, often with unique approaches that complement or challenge the incumbents. Let’s explore each one in detail.

Mistral AI (Europe’s Open Source Champion)

Founded in Paris in 2023 by ex-DeepMind and Meta researchers (including Arthur Mensch, who worked on Chinchilla), Mistral has become Europe’s answer to American AI dominance. Their philosophy: smaller, more efficient models that punch above their weight.

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#4f46e5', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#3730a3', 'lineColor': '#6366f1', 'fontSize': '16px' }}}%%

flowchart LR

Mistral["Mistral AI"] --> Large3["Mistral Large 3<br/>675B total / 41B active"]

Mistral --> Ministral["Ministral 3<br/>3B, 8B, 14B"]

Mistral --> Devstral["Devstral 2<br/>Code specialists"]

Mistral --> Pixtral["Pixtral Large<br/>Multimodal"]The Mistral 3 Family (December 2025)

| Model | Parameters | Context | Best For |

|---|---|---|---|

| Mistral Large 3 | 675B (41B active) | 256K | Frontier tasks, multimodal |

| Ministral 3 | 3B, 8B, 14B | 128K | Edge devices, mobile, offline |

| Devstral 2 | 123B | 256K | Software engineering agents |

| Pixtral Large | 124B | 128K | Vision + text reasoning |

| Codestral 25.01 | 22B | 32K | Code generation (80+ languages) |

Why Mistral Matters:

- European AI sovereignty - Building strategic alternatives to US/China dominance

- Apache 2.0 licensing - Fully open weights, commercial use allowed

- Efficiency-first - Mixture-of-Experts means only 41B of 675B parameters activate per query

- Edge deployment - Ministral runs on laptops, phones, even robots

When to Choose Mistral:

- You need EU data sovereignty compliance

- Edge deployment is critical

- You want open-weight models with commercial licensing

- Efficiency matters more than absolute frontier performance

xAI (Elon Musk’s Moonshot)

Founded in 2023, xAI has rapidly become a serious contender. With access to X (Twitter) data, the Colossus supercomputer (100K+ GPUs), and Elon Musk’s capital, they’re moving at a pace that’s alarming competitors.

The Grok Evolution

| Version | Released | Key Feature |

|---|---|---|

| Grok 1 | Nov 2023 | Initial launch, X integration |

| Grok 2 | Aug 2024 | Vision, image generation (Aurora) |

| Grok 3 | May 2025 | Scientific reasoning |

| Grok 4.1 | Nov 2025 | Multimodal, voice, DeepSearch |

| Grok 4.20 | Dec 2025 | Latest generation (announced) |

What Makes Grok Different:

- Real-time X data - Access to live social media conversations

- “Less filtered” - Fewer content restrictions than competitors

- Voice and vision - Native multimodal processing

- DeepSearch - Autonomous web research capability

- Colossus - One of the world’s largest GPU clusters powers training

December 2025 Updates:

- Grok integration in Tesla vehicles (“Grok with Navigation Commands”)

- Partnership with El Salvador for AI-powered education (1M+ students)

- Grok 2 announced to be open-sourced

When to Choose Grok:

- You use X Premium/Premium+

- You want real-time social data insights

- You prefer less restrictive content policies

- You’re in the Tesla ecosystem

DeepSeek (China’s Cost-Efficiency Pioneer)

DeepSeek has been the biggest surprise of 2025. This Chinese company achieved GPT-5 level performance at a fraction of the training cost, sending shockwaves through the industry. Their message: frontier AI doesn’t require Big Tech budgets.

DeepSeek V3.2 (December 2025)

| Capability | Performance |

|---|---|

| Reasoning | GPT-5 level for everyday tasks |

| Mathematics | 118/120 on Putnam Competition (beats top humans) |

| IMO 2025 | Gold medal results |

| Tool use | 1,800+ environments, 85,000+ complex instructions |

| Inference costs | 50-75% lower than competitors |

Key Innovations:

- DeepSeek Sparse Attention (DSA) - Dramatically reduces inference costs

- Mixture of Experts (MoE) - 685B total parameters, efficient activation

- Self-verifiable reasoning - Mathematical proofs with built-in verification

- Thinking in tool-use - First to integrate reasoning directly into tool utilization

The DeepSeek Family:

- DeepSeek V3.2 - Flagship, all platforms (web, mobile, API)

- DeepSeek V3.2-Speciale - Maximum reasoning (API-only, limited availability)

- DeepSeek-Coder - Specialized programming assistant

- DeepSeek-VL2 - Vision-language multimodal model

- DeepSeekMath-V2 - Mathematical reasoning specialist

Why DeepSeek Matters:

- Cost democratization - Proves frontier AI can be affordable

- Open weights - Available for download and local running

- Mathematical breakthrough - Superhuman performance on competition math

- Long context - Handles large documents efficiently

When to Choose DeepSeek:

- Cost is a primary concern

- Mathematical/scientific reasoning is your use case

- You want open-weight models

- You need efficient inference

Alibaba Qwen (China’s Comprehensive Ecosystem)

Alibaba Cloud’s Qwen family represents one of the most comprehensive open-source AI ecosystems available. By December 2025, they’ve progressed to Qwen3, trained on 36 trillion tokens across 119 languages.

The Qwen Ecosystem (December 2025)

| Model Family | Focus | Context |

|---|---|---|

| Qwen3-Max | Flagship API model | 1M tokens |

| Qwen3-Omni | Native multimodal (text, image, audio, video) | 256K |

| QwQ-32B | Open-source reasoning | 131K |

| Qwen 2.5-Coder | Code (92 languages) | 128K |

| Qwen 2.5-VL | Vision-language | 128K |

| Qwen 2.5-Math | Mathematical reasoning | 128K |

Key Achievements:

- 18-20 trillion tokens of training data

- 29+ languages with strong multilingual performance

- Competitive benchmarks - Matches or exceeds GPT-4o on many tasks

- True open source - Fully downloadable, commercial-friendly

QwQ-32B (The Reasoning Model):

- Compact 32B parameters for reasoning tasks

- Multi-step reasoning with self-reflection

- Excels at complex math and programming

- Open-source alternative to o1/o3

When to Choose Qwen:

- Open-source with commercial licensing is essential

- Multilingual support (especially Chinese) is needed

- You want a complete ecosystem (code, math, vision, reasoning)

- Cost-effective alternative to Western closed models

Moonshot AI Kimi K2 (The Agentic Powerhouse)

Moonshot AI, the Chinese company that pioneered ultra-long context windows, released Kimi K2 as an open-source rival to frontier models. Its specialty? Agentic AI that can execute complex multi-step tasks autonomously.

Kimi K2 Specifications

| Spec | Value |

|---|---|

| Total Parameters | 1 trillion |

| Active Parameters | 32B per token |

| Context Window | 256K tokens |

| Architecture | Mixture of Experts |

| Training Data | 15.5 trillion tokens |

| License | Modified MIT (open source) |

What Makes Kimi K2 Special:

- Agentic mastery - Sustains 200-300 sequential tool calls without human intervention

- State-of-the-art coding - Competes with GPT-4.1 and Claude Sonnet 4.5

- Thinking variant - Kimi K2 Thinking adds extended reasoning

- INT4 quantization - Efficient inference, lower GPU requirements

Variants:

- Kimi-K2-Base - Foundation model for fine-tuning

- Kimi-K2-Instruct - General-purpose chat and agents

- Kimi K2 Thinking - Enhanced reasoning (November 2025)

When to Choose Kimi:

- Agentic AI is your primary use case

- You need autonomous multi-step task execution

- Open-source with frontier performance is required

- Coding and tool-use are critical

MiniMax (The Multimodal Creative Studio)

MiniMax, one of China’s top AI unicorns, has carved a unique niche with Hailuo—their multimodal platform that generates text, images, video, and audio. They’re leaders in AI-generated video.

MiniMax Models (December 2025)

| Product | Capability |

|---|---|

| MiniMax-M2 | Open-source LLM for coding and reasoning |

| Hailuo 2.3 | Text-to-video with enhanced realism |

| Hailuo Video-01 | Flagship video generation |

| MiniMax Voice | Text-to-speech synthesis |

Hailuo Video Capabilities:

- Text-to-video - Generate videos from prompts

- Camera control - Pan, zoom, tracking shots via natural language

- Style variety - Anime, illustration, realistic, game CG

- Audio integration - Videos with ambient sound, dialogue, and music

- 720p @ 25fps - Up to 6+ second clips

MiniMax-M2 (LLM):

- Open-source large language model

- Optimized for coding, reasoning, and agentic workflows

- Competitive with Western models

When to Choose MiniMax:

- Video generation is your primary need

- Multimodal creative content is the goal

- You want AI-generated video with audio

- Chinese market applications

Amazon Nova 2 (The Enterprise Contender)

At re:Invent 2025, Amazon unveiled Nova 2—their most ambitious AI play yet. Integrated into AWS Bedrock, these models target enterprise customers with industry-leading price-performance.

The Nova 2 Family

| Model | Best For | Key Feature |

|---|---|---|

| Nova 2 Lite | Everyday tasks, chatbots | Cost-effective reasoning, 1M context |

| Nova 2 Pro | Complex multi-step tasks | Most intelligent, teacher for distillation |

| Nova 2 Sonic | Voice AI | Speech-to-speech, real-time conversation |

| Nova Multimodal Embedding | Search, RAG | Text, image, video, audio embeddings |

| Nova Act | Browser automation | 90% reliability on UI workflows |

| Nova Forge | Custom models | Train variants with your data |

Key Advantages:

- 75% cost reduction compared to comparable models

- 1M token context on Lite and Sonic

- Native Bedrock integration - Load balancing, scaling, model routing

- AgentCore - Policy controls, evaluations, episodic memory for agents

When to Choose Amazon Nova:

- You’re already on AWS

- Enterprise compliance and security are required

- Cost-performance ratio is critical

- Agentic AI with enterprise controls is needed

Perplexity (AI-Native Search)

Perplexity isn’t building foundation models—they’re building what search should be in the AI age. Every answer comes with citations. Every source is clickable. It’s the anti-hallucination approach.

What Makes Perplexity Different:

- Citation-first - Every claim has a source

- Real-time - Searches the live web

- Conversational - Follow-up questions refine results

- Pro Search - Deeper research with multiple source synthesis

When to Choose Perplexity:

- Research with verifiable sources

- Current events and real-time information

- Academic or professional research

- When factual accuracy is paramount

Hugging Face (The GitHub of AI)

Not a model creator, but the infrastructure that enables everything else. Hugging Face hosts 700K+ models, millions of datasets, and provides the tools that power open-source AI development.

What They Provide:

- Model Hub - Download any open model with one click

- Transformers - The de facto library for working with LLMs

- Spaces - Host AI demos and applications

- Inference API - Serverless model serving

- Enterprise Hub - Private model hosting for companies

Why They Matter: The entire open AI ecosystem runs on Hugging Face infrastructure.

The Challenger Comparison

| Company | Flagship Model | Best For | Open Source? | Context |

|---|---|---|---|---|

| Mistral | Large 3 | EU sovereignty, efficiency | ✅ Apache 2.0 | 256K |

| xAI | Grok 4.1 | X integration, real-time | ❌ (Grok 2 soon) | 128K |

| DeepSeek | V3.2 | Math, cost-efficiency | ✅ Open weights | 128K |

| Alibaba | Qwen3-Max | Comprehensive ecosystem | ✅ (most models) | 1M |

| Moonshot | Kimi K2 | Agentic AI, tool use | ✅ MIT | 256K |

| MiniMax | Hailuo 2.3 | Video generation | Partial | N/A |

| Amazon | Nova 2 Pro | Enterprise, AWS | ❌ | 1M |

| Perplexity | N/A | Search with sources | ❌ | N/A |

Open Source vs Closed Source: The Great Debate

One of the most important decisions in AI is whether to use open or closed models. Let me break down the tradeoffs.

Understanding the Terms

Closed Source (Proprietary)

- Model weights are secret

- Access only via API

- Examples: GPT-5.2, Claude, Gemini Pro

Open Weights

- Model parameters downloadable

- Can run locally

- Examples: LLaMA 4, Mistral, Qwen

Fully Open Source

- Code, data, AND weights available

- Rare in frontier AI

- Examples: OLMo, BLOOM

The Case for Closed Source

| Advantage | Why It Matters |

|---|---|

| Cutting-edge capability | Usually 6-12 months ahead |

| Polished experience | Better UX, continuous updates |

| Enterprise support | SLAs, security certifications |

| Safety controls | Company manages guardrails |

| No hardware needed | Someone else runs the GPUs |

The Case for Open Source

| Advantage | Why It Matters |

|---|---|

| Privacy | Data never leaves your machine |

| Cost | No per-token pricing |

| Customization | Fine-tune for your use case |

| Transparency | Inspect model behavior |

| Offline access | Works without internet |

| No vendor lock-in | You control your destiny |

The Decision Framework

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#4f46e5', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#3730a3', 'lineColor': '#6366f1', 'fontSize': '16px' }}}%%

flowchart TB

A["Choose AI Model"] --> B{Privacy Critical?}

B -->|Yes| C["Open Source / Local"]

B -->|No| D{Need Latest Features?}

D -->|Yes| E["Closed Source API"]

D -->|No| F{High Volume?}

F -->|Yes| C

F -->|No| G["Either Works"]My recommendation: Use a mix. I use Claude for coding, ChatGPT for general tasks, Perplexity for research, and local LLaMA for anything sensitive. The “right” choice depends entirely on what you’re doing.

The Investment Landscape: Follow the Money

Understanding who’s funding AI tells you where the industry is heading. The capital flowing into AI in 2025 is unprecedented.

The Numbers Are Staggering

- 2024/2025 AI Investment: $150+ billion globally

- Training GPT-5: $500+ million estimated

- Microsoft’s OpenAI stake: $14+ billion

- xAI’s Colossus cluster: $10+ billion in hardware (100K+ H100 GPUs)

- Nvidia’s market cap: Surpassed $3.5 trillion

- Amazon’s Anthropic investment: $4 billion

- Google’s combined AI spend: $75+ billion annually

Who’s Betting on Whom

| Investor | Key Investments | Strategy |

|---|---|---|

| Microsoft | OpenAI ($14B+) | Exclusive Azure integration, Copilot ecosystem |

| Amazon | Anthropic ($4B), AWS Bedrock | Nova models + third-party hosting |

| Anthropic ($2B), DeepMind, internal | Gemini + hedge on Anthropic | |

| Nvidia | Many AI startups, inference | Infrastructure + ecosystem |

| Alibaba | Qwen (internal) | Open ecosystem strategy |

| Tencent | Various Chinese AI | Ecosystem integration |

Company Valuations (December 2025)

| Company | Valuation | Key Investor | Country |

|---|---|---|---|

| OpenAI | $157 billion | Microsoft | USA |

| Anthropic | $60 billion | Amazon/Google | USA |

| xAI | $50+ billion | Private/Musk | USA |

| Moonshot AI | $3+ billion | Various | China |

| Mistral | $6 billion | Various | France |

| Hugging Face | $4.5 billion | Various | USA/France |

| MiniMax | $2.5+ billion | Alibaba, Tencent | China |

| DeepSeek | Private | ByteDance-affiliated | China |

| Perplexity | $3 billion | Various | USA |

AI Company Valuations (December 2025)

Billions of dollars raised by frontier AI labs

$100B+

2024 AI Investment

$3T+

Nvidia Market Cap

$50B+

Annual Infra Spend

Sources: TechCrunch • Reuters • CNBC

The Compute Arms Race

Beyond funding, there’s a hardware war underway:

| Company | GPU Cluster | Status |

|---|---|---|

| xAI (Colossus) | 100K+ H100s | Operational |

| Microsoft/OpenAI | 100K+ H100s | Azure infrastructure |

| Meta | 600K+ H100s (planned) | By 2025 |

| TPU v5p clusters | Custom silicon | |

| Amazon | Trainium3 UltraServers | AWS infrastructure |

What This Means for You

- Well-funded companies sustain free tiers longer—they can absorb losses to build market share

- Price competition is intensifying—DeepSeek proves efficiency can beat brute-force spending

- Infrastructure investments mean better models coming—the hundreds of billions being spent will produce results

- Chinese challengers are real—Qwen, DeepSeek, and Kimi compete at frontier levels

- Open source is thriving—Meta, Mistral, and Alibaba are funding alternatives to closed models

How to Choose the Right AI for Your Needs

After all that background, here’s the practical section you’ve been waiting for: which AI should you actually use?

Which AI Should You Use?

Click a use case to see the recommendation

👆 Click a use case above to see which AI is recommended

The Use Case Matrix

| What You Need | Best Choice | Why |

|---|---|---|

| General chat, daily use | ChatGPT, Claude, Gemini | Polished consumer experience |

| Coding assistance | Claude Opus 4.5, Kimi K2 | Best code quality, agentic coding |

| Long document analysis | Gemini (1M+), Qwen3 (1M) | Massive context windows |

| Research with citations | Perplexity | Built-in source attribution |

| Image generation | ChatGPT (DALL-E 3), Grok (Aurora) | Quality and ease of use |

| Video generation | MiniMax Hailuo, Runway | Text-to-video capabilities |

| Privacy-critical work | LLaMA 4, Kimi K2 (local) | Data never leaves your machine |

| Complex reasoning | o3-Pro, DeepSeek V3.2-Speciale | Dedicated reasoning models |

| Mathematical/scientific | DeepSeek V3.2, QwQ-32B | Superhuman math performance |

| Cost-conscious (high volume) | DeepSeek, open source | 50-75% lower inference costs |

| EU data sovereignty | Mistral Large 3 | European company, Apache 2.0 |

| Enterprise/AWS | Amazon Nova 2 | 75% lower costs, Bedrock integration |

| Agentic workflows | Kimi K2, Claude, Nova 2 | Multi-step autonomous tasks |

| Real-time social data | Grok | X (Twitter) integration |

Pricing Comparison (December 2025)

| Service | Free Tier | Pro Tier | Best For |

|---|---|---|---|

| ChatGPT | Limited GPT-4o | $20/mo (Plus), $200/mo (Pro) | General use |

| Claude | Limited access | $20/mo (Pro) | Coding, writing |

| Gemini | Generous free | $20/mo (Advanced) | Google users |

| Grok | X Premium | X Premium+ | X power users |

| Perplexity | 5 Pro searches/day | $20/mo (Pro) | Research |

| DeepSeek | Generous free | API (cheap) | Cost-conscious |

| Qwen | Free (open source) | Alibaba Cloud API | Open source |

| Kimi K2 | Free (open source) | Moonshot API | Agentic AI |

| Local (Ollama) | Free | Free | Privacy, control |

My Personal Setup (December 2025)

I use different tools for different things:

- Claude: Coding, long documents, nuanced writing

- ChatGPT: General tasks, voice mode, image generation

- Perplexity: Research with sources, current events

- Gemini: Google-integrated tasks, ultra-long documents

- DeepSeek: Math and scientific reasoning, cost-effective API calls

- Kimi K2: Agentic tasks, complex multi-step workflows

- Local LLaMA/Mistral: Sensitive content, offline work

This might seem complicated, but once you know each tool’s strengths, it becomes second nature. Like how you might use different apps for email, notes, and calendar—each is optimized for its purpose.

Practical Recommendations by User Type

Individual User

- Start with free tiers on ChatGPT, Claude, and Gemini

- Try DeepSeek for a cost-effective alternative

- Use Perplexity for research with citations

- Upgrade one service to Pro based on your primary use case

Developer

- Claude API for coding (Opus 4.5 is worth the cost)

- Kimi K2 or DeepSeek for agentic workflows

- Local LLaMA/Mistral for testing and experimentation

- OpenAI API for access to o3 reasoning models

- DeepSeek for cost-effective high-volume inference

Enterprise

- Enterprise tiers for compliance and security (Azure OpenAI, AWS Bedrock)

- Amazon Nova 2 for AWS-integrated workflows at lower cost

- Consider Mistral for EU data sovereignty requirements

- Evaluate Chinese models (Qwen, DeepSeek) for specific cost/capability needs

Staying Current: Navigating Rapid Change

The AI landscape moves faster than any industry I’ve seen. Models that were state-of-the-art three months ago are already outdated. How do you keep up without drowning?

Model Release Velocity

New models are releasing every few weeks. Here’s how major releases have accelerated:

- 2022: ChatGPT launches, AI becomes mainstream

- 2023: GPT-4, Claude 2, Gemini—major annual releases

- 2024: Monthly model updates become normal

- 2025: Multiple significant releases per month

How I Stay Updated (Without Losing My Mind)

| Source | What You Get | Frequency |

|---|---|---|

| Company blogs | Official announcements | Weekly |

| AI Twitter/X | @sama, @karpathy, @ylecun | Daily |

| Newsletters | The Batch, The Neuron | Weekly |

| r/LocalLLaMA, r/ChatGPT | Daily | |

| YouTube | AI Explained, Two Minute Papers | Weekly |

| lmarena.ai | Head-to-head comparisons | Live |

My Strategy

- Pick 2-3 sources you’ll actually read consistently

- Try new models when they release—most have free tiers

- Focus on your use cases—not every release matters to you

- Quarterly review: Am I using the right tools? Has something better emerged?

Understanding Version Numbers

- Major versions (GPT-3 → 4 → 5): Huge capability jumps

- Minor versions (GPT-4 → 4o → 4.5): Meaningful improvements

- Point releases (Claude 3.5 Sonnet → updated): Bug fixes, refinements

- Codenames (o1, o3): Different model families or approaches

Red Flags and Hype

Some skepticism is healthy:

- “This will change everything” claims are usually overstated

- Benchmarks can be gamed—real-world testing matters more

- “AGI by next year” predictions are almost always wrong

- Wait for independent testing before switching tools

The Landscape in One View

Let me give you a mental model that ties everything together:

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#4f46e5', 'primaryTextColor': '#ffffff', 'primaryBorderColor': '#3730a3', 'lineColor': '#6366f1', 'fontSize': '16px' }}}%%

flowchart TB

subgraph Closed["Closed Source (API)"]

OpenAI["OpenAI<br/>GPT-5.2, o3"]

Anthropic["Anthropic<br/>Claude 4.5"]

Google["Google<br/>Gemini 3"]

xAI["xAI<br/>Grok 4"]

Amazon["Amazon<br/>Nova 2"]

end

subgraph Open["Open Source"]

Meta["Meta<br/>LLaMA 4"]

Mistral["Mistral<br/>Large 3"]

DeepSeek["DeepSeek<br/>V3.2"]

Qwen["Alibaba<br/>Qwen3"]

Kimi["Moonshot<br/>Kimi K2"]

end

subgraph Specialized["Specialized"]

Perplexity["Perplexity<br/>AI Search"]

MiniMax["MiniMax<br/>Video AI"]

HF["Hugging Face<br/>Infrastructure"]

end

User["You"] --> Closed

User --> Open

User --> SpecializedThe Big Four (OpenAI, Anthropic, Google, Meta) set the pace. Western Challengers (Mistral, xAI, Amazon) add diversity and competition. Chinese Powerhouses (DeepSeek, Qwen, Kimi, MiniMax) prove frontier AI is global. Specialists (Perplexity, Hugging Face) fill critical niches.

Key Takeaways

Let’s distill this down to what you need to remember:

- The Big Four lead, but challengers are serious: OpenAI, Anthropic, Google, Meta—plus Mistral, xAI, DeepSeek, Qwen, and Kimi K2

- Each company has real strengths:

- Claude for coding

- Gemini for massive context (1M+ tokens)

- ChatGPT for ecosystem breadth

- LLaMA/Mistral for privacy and control

- DeepSeek for cost-efficiency

- Kimi K2 for agentic workflows

- Grok for real-time data

- Nova 2 for AWS integration

- Open source is thriving: Meta, Mistral, Alibaba, Moonshot, and DeepSeek provide frontier-level open models

- Use multiple tools: Different AI for different tasks—that’s the smart approach

- The landscape is now global: Chinese models compete at frontier levels

- Investment is massive: $150B+ annually, and hardware matters as much as algorithms

- Stay current, but don’t panic: Focus on your use cases, not every announcement

Your Action Plan

Here’s what to do next:

- Create free accounts on ChatGPT, Claude, and Gemini if you haven’t already

- Try DeepSeek at chat.deepseek.com for math and cost-effective generation

- Explore Perplexity at perplexity.ai for research with citations

- Check out Qwen at chat.qwenlm.ai for open-source alternative

- If you’re a developer, try Kimi K2 for agentic workflows

- If you’re technical, install Ollama and run LLaMA 4 or Mistral locally

- Try the same complex prompt on 3 different models—experience the differences firsthand

What’s Next?

Now that you understand who the players are and how to choose between them, the next article dives into the technical fundamentals: Tokens, Context Windows & Parameters Demystified. You’ll learn the vocabulary that helps you use these tools more effectively.

Your learning path:

- ✅ What Are Large Language Models?

- ✅ The Evolution of AI

- ✅ How LLMs Are Trained

- ✅ You are here: Understanding the AI Landscape

- 📖 Next: Tokens, Context Windows & Parameters Demystified

- 📖 Prompt Engineering Fundamentals

Related Articles: