2025 wasn’t just another year in artificial intelligence—it was the year the industry’s center of gravity shifted. The dominance of a few Silicon Valley giants gave way to a multi-polar world where Chinese labs released frontier models under open licenses, reasoning became a standard capability, and AI agents moved from impressive demos to production deployments executing real tasks on real computers.

This comprehensive timeline documents what happened, when it happened, and why it mattered. Whether you’re a developer making build-vs-buy decisions, a business leader navigating AI strategy, or simply someone trying to understand the most transformative technology of our era, this guide will help you make sense of a year that moved faster than any before it.

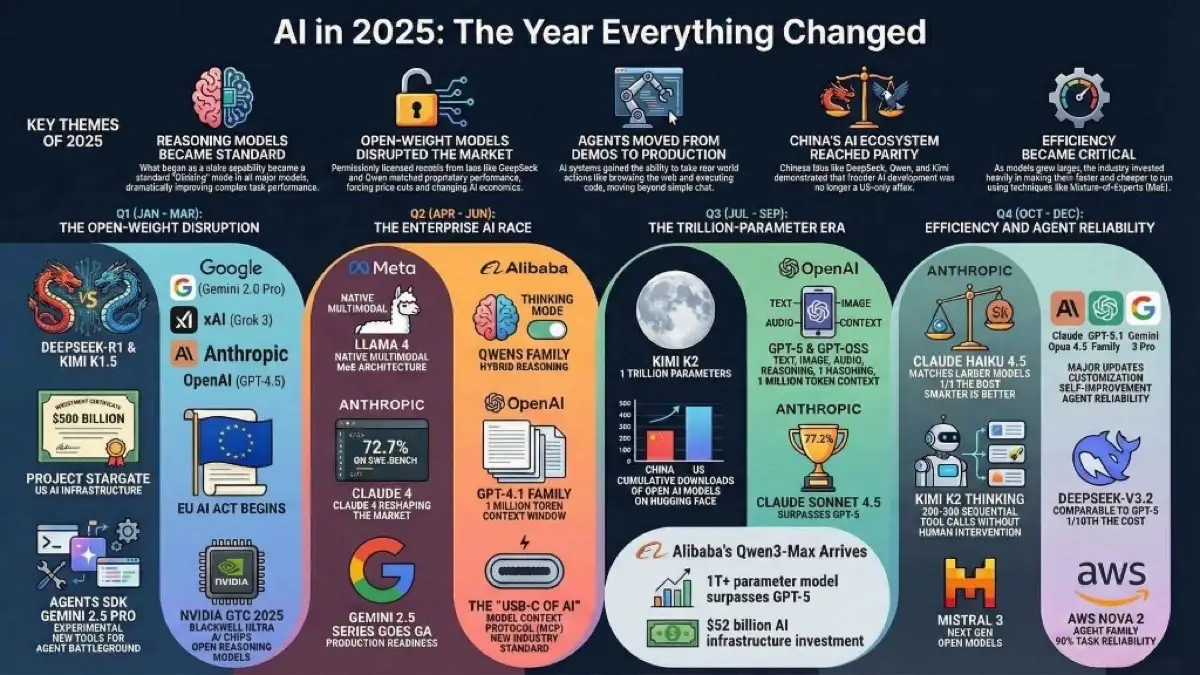

Five Themes That Defined AI in 2025

Before diving into the monthly breakdown, let’s establish the major themes that thread through this year:

-

Reasoning Models Became Standard: What began as a specialized capability (OpenAI’s o1 in late 2024) became table stakes. By mid-2025, every major provider offered “thinking” or “reasoning” modes that dramatically improved performance on complex tasks.

-

Open-Weight Models Disrupted the Market: DeepSeek, Qwen, and Kimi released models under permissive licenses that matched or exceeded proprietary alternatives. This forced dramatic price cuts and fundamentally changed the economics of AI deployment. Learn more about running open-weight models locally.

-

Agents Moved from Demos to Production: The launch of OpenAI’s Operator, Anthropic’s Claude Computer Use, and standardized protocols like MCP meant AI systems could finally take actions in the real world—browsing the web, executing code, and managing files.

-

China’s AI Ecosystem Reached Parity: Chinese labs didn’t just catch up—in some domains, they led. DeepSeek-R1, Qwen3-Max, and Kimi K2 demonstrated that frontier AI development was no longer a US-only affair. See our AI landscape overview for context.

-

Efficiency and Inference Optimization Became Critical: As models grew larger, the industry invested heavily in making them faster and cheaper to run. Mixture-of-Experts architectures, speculative decoding, and inference optimization became as important as raw capability.

📑 Table of Contents (click to expand)

📅 January 2025

January set the tone for the entire year with seismic announcements that reshaped competitive dynamics in AI—from China’s open-weight disruption to a historic $500 billion infrastructure commitment.

🔹 Major Model Launches

DeepSeek-R1 (January 20, 2025)

- Organization: DeepSeek (China)

- Model Type: Open-weight reasoning model (671B total / 37B active parameters)

- What Made It Notable: Released under the MIT license, DeepSeek-R1 matched OpenAI’s o1 on mathematical reasoning and coding benchmarks—but it was fully open and commercially usable. Key highlights:

- Achieved 79.8% Pass@1 on AIME 2024 and 97.3% on MATH-500

- Used Mixture-of-Experts architecture for cost-effective inference

- Built-in explainability with step-by-step reasoning output

- Trained for approximately $6 million—a fraction of competitors’ costs

- Distilled versions (1.5B to 70B parameters) made reasoning accessible on consumer hardware

- The DeepSeek chatbot app launched on iOS/Android on January 10, 2025

Kimi K1.5 (January 20, 2025)

- Organization: Moonshot AI (China)

- Model Type: Multimodal reasoning model

- What Made It Notable: Released the same day as DeepSeek-R1, Kimi K1.5 demonstrated that Chinese labs were coordinating their challenge to Western AI dominance. It matched o1 in mathematics and coding while adding multimodal capabilities (text, images, and video processing) that o1 lacked.

OpenAI o3-mini (January 31, 2025)

- Organization: OpenAI

- Model Type: Cost-efficient reasoning model

- What Made It Notable: OpenAI’s answer to the efficiency challenge, o3-mini offered strong STEM performance (math, coding, science) with three reasoning effort levels (low, medium, high). Made available to:

- ChatGPT Free users (via “Reason” option)

- Plus/Team users (150 messages/day, up from 50)

- API developers with function calling and structured outputs

Gemini 2.0 Flash (January 30, 2025)

- Organization: Google DeepMind

- Model Type: Frontier multimodal model

- What Made It Notable: Became Google’s new default model in the Gemini app, offering improved speed and efficiency over Gemini 1.5 while maintaining strong performance across text, image, and code tasks.

Gemini 2.0 Flash Thinking (January 21, 2025)

- Organization: Google DeepMind

- Model Type: Experimental reasoning model

- What Made It Notable: Google’s experimental entry into explicit reasoning models, showing the model’s chain of thought during inference.

🔹 Product & Platform Updates

- OpenAI Operator (January 23, 2025): Launched as a research preview for ChatGPT Pro users in the US. OpenAI’s first production AI agent, capable of navigating websites and completing multi-step tasks like booking travel, filling forms, and managing workflows without needing custom APIs.

- Stargate Project Announced (January 21, 2025): OpenAI, SoftBank, Oracle, and MGX announced a joint venture to invest up to $500 billion in AI infrastructure in the United States by 2029. Announced at the White House with President Trump, this was called “the largest AI infrastructure project in history.” Construction began immediately on data centers in Texas.

- NVIDIA Llama Nemotron Family: Announced at CES (January 6), these models (Nano 4B, Super 49B, Ultra 253B) were optimized for agentic tasks on NVIDIA hardware, available as NIM microservices.

- Gemini Live Expansion: Google expanded Gemini Live to incorporate images, files, and YouTube videos into conversations.

🔹 Technical & Research Breakthroughs

- Reinforcement Learning for Reasoning: DeepSeek-R1 demonstrated that pure reinforcement learning (using Group Relative Policy Optimization/GRPO) without supervised fine-tuning on chain-of-thought data could produce genuine reasoning capabilities.

- Cost-Efficient Training: DeepSeek’s $6 million training cost challenged the assumption that frontier AI required billions in compute investment.

- MCP Adoption Begins: The Model Context Protocol, initially released by Anthropic in late 2024, started gaining traction as a standard for connecting AI models to external tools and data sources.

🔹 Why It Mattered

January 2025 shattered the assumption that frontier AI required Western hardware and proprietary development. DeepSeek-R1’s MIT license meant any developer could run, modify, and deploy a reasoning model without API costs or geographic restrictions. The Stargate announcement signaled that the US was treating AI infrastructure as a national priority, while Chinese labs demonstrated they could compete at a fraction of the cost.

🔹 Looking Ahead

January’s open-weight releases set the stage for the “Sputnik moment” narrative that would dominate AI discourse throughout 2025, as policymakers and executives grappled with China’s rapid advancement.

📅 February 2025

February saw Western labs respond to January’s open-weight disruption with their own major releases.

🔹 Major Model Launches

Gemini 2.0 Pro (February 5, 2025)

- Organization: Google DeepMind

- Model Type: Frontier multimodal model

- What Made It Notable: Google’s most capable model at release, featuring:

- Massive 2 million token context window

- Enhanced performance for coding and complex prompts

- Integration with Google Search and code execution tools

- Available via Google AI Studio, Vertex AI, and Gemini Advanced

xAI Grok 3 (February 17, 2025)

- Organization: xAI

- Model Type: Frontier multimodal model with reasoning modes

- What Made It Notable: Elon Musk called it “the smartest AI on Earth” and “an order of magnitude more powerful” than Grok 2. Trained on 200,000 NVIDIA H100 GPUs via the Colossus supercomputer, Grok 3 introduced:

- DeepSearch: Real-time internet analysis with reasoning about conflicting information

- Big Brain Mode: Enhanced processing for complex analytical tasks

- Think Mode: Multi-step logical reasoning

- 128K+ token context window (up to 1M in advanced versions)

- Made free for all users on February 20 (initially for a “short time” but never disabled)

Claude 3.7 Sonnet & Claude Code (February 24, 2025)

- Organization: Anthropic

- Model Type: Hybrid reasoning model + coding agent

- What Made It Notable: Claude 3.7 Sonnet was the first hybrid reasoning model on the market, offering both rapid responses and extended step-by-step thinking. Claude Code launched as a terminal-based tool enabling developers to delegate engineering tasks, edit files, run bash commands, and commit to GitHub directly from the command line.

GPT-4.5 (February 27, 2025)

- Organization: OpenAI

- Model Type: Frontier chat/general-purpose LLM (research preview, codename “Orion”)

- What Made It Notable: OpenAI’s largest GPT-4 series model, offering:

- Improved pattern recognition and creative insights via scaled unsupervised learning

- Greater “emotional intelligence” (EQ) and conversational nuance

- Significantly reduced hallucination rate compared to previous models

- Sam Altman described it as the first model that “feels like talking to a thoughtful person”

- Initially for Pro users, then Plus/Team/Enterprise

- Note: Compute-intensive and expensive—not intended to replace GPT-4o

🔹 Product & Platform Updates

- Grok Free Access (February 20): xAI made Grok 3 free for all X users, challenging OpenAI’s freemium model.

- Qwen 2.5-Max Announcement: Alibaba teased its most powerful model yet, with full release coming later.

- Gemini 2.0 Flash GA: Google announced general availability of Gemini 2.0 Flash alongside the Pro experimental release.

🔹 Technical & Research Breakthroughs

- EU AI Act First Provisions (February 2, 2025): The first provisions of the EU AI Act came into force, including prohibitions on certain AI practices (like social scoring and emotion recognition in workplaces) and requirements for AI literacy among employees. This marked the beginning of comprehensive AI regulation in Europe.

- Hybrid Reasoning Models: Claude 3.7 Sonnet introduced the concept of “extended thinking” that could be toggled on and off—a pattern that would spread across the industry.

🔹 Why It Mattered

February demonstrated that competition in AI was now multi-dimensional: OpenAI competed on conversational quality and EQ, Google on multimodality and context length, xAI on speed and real-time information, and Anthropic on developer tools and coding. No single company could claim clear leadership across all dimensions.

🔹 Looking Ahead

Grok 3’s emphasis on real-time information and internet connectivity foreshadowed the agent-focused developments that would accelerate throughout the year. Claude 3.7 Sonnet’s hybrid reasoning hinted at what Claude 4 would bring.

📅 March 2025

March was packed with releases across the industry—from Google’s reasoning breakthrough to OpenAI’s agent-building tools and NVIDIA’s hardware announcements at GTC.

🔹 Major Model Launches

Gemini 2.5 Pro Experimental (March 25, 2025)

- Organization: Google DeepMind

- Model Type: Frontier reasoning (“thinking”) model

- What Made It Notable: Google’s most intelligent model at release, featuring:

- Enhanced reasoning that works through problems step by step before responding

- 1 million token context window

- Strong improvements in coding and complex prompt handling

- Designed to compete directly with OpenAI o1 and DeepSeek-R1

Gemma 3 (March 2025)

- Organization: Google DeepMind

- Model Type: Open-source lightweight model family

- What Made It Notable: A major open-source release featuring:

- Sizes from 1B to 27B parameters

- 128K token context window

- Multimodal capabilities (image analysis)

- Support for 140 languages

- Improved math, reasoning, and chat performance

DeepSeek-V3-0324 (March 24, 2025)

- Organization: DeepSeek

- Model Type: Open-weight general-purpose LLM

- What Made It Notable: A mid-cycle update to DeepSeek’s flagship model, improving on already-strong coding and reasoning benchmarks while maintaining MIT licensing. Continued to challenge the assumption that frontier AI required massive funding.

Qwen2.5-VL-32B-Instruct (March 24, 2025)

- Organization: Alibaba Cloud

- Model Type: Open-weight vision-language model

- What Made It Notable: A powerful mid-sized vision-language model that demonstrated strong visual understanding at a fraction of the compute required by larger competitors.

Qwen2.5-Omni-7B (March 26, 2025)

- Organization: Alibaba Cloud

- Model Type: Open-weight omni-modal model

- What Made It Notable: A truly multimodal model capable of processing text, images, videos, and audio inputs while generating both text and audio outputs—optimized for smartphones and laptops, enabling real-time voice conversations.

🔹 Product & Platform Updates

- OpenAI 4o Image Generation: Unveiled improved image generation with better text rendering, follow-up prompt refinement, and linked knowledge between text and images.

- OpenAI Agents SDK & Responses API: New tools for building AI agents, simplifying creation and management of complex multi-step task automation.

- OpenAI Audio Models for API: New audio models designed for voice agents with improved performance in noisy environments and with accents.

- xAI Image Generation API: Elon Musk’s xAI entered visual AI with the “grok-2-image-1212” model and Image Generation API.

- Manus AI Agent (Monica): Chinese startup Monica introduced Manus, an advanced AI agent capable of executing complex tasks autonomously—foreshadowing the year’s agent focus.

- Microsoft Reasoning Agents: New reasoning agents introduced within Microsoft 365 Copilot.

🔹 Technical & Research Breakthroughs

- NVIDIA GTC 2025 Highlights:

- Blackwell Ultra AI chips unveiled for next-gen AI training

- Llama Nemotron: Family of open reasoning AI models based on Meta’s Llama

- Groot N1: AI model for robotics

- NVIDIA Dynamo: AI factory operating system

- Gemini Robotics: Google DeepMind announced robotics capabilities integrating language, vision, and action.

- Mixture-of-Experts Maturation: Multiple releases demonstrated that MoE architectures could achieve frontier performance at dramatically lower inference costs.

🔹 Why It Mattered

March established AI agents as the central competitive battleground. OpenAI, Google, Microsoft, and Chinese startups all released agent-building tools. Meanwhile, NVIDIA’s GTC showed that hardware innovation was accelerating to match software advancement. Multimodality became expected, not exceptional.

🔹 Looking Ahead

The release of Gemini 2.5 Pro Experimental and OpenAI’s agent tools signaled that reasoning and agentic AI would define the year’s second half.

📅 April 2025

April was dominated by Meta’s re-entry into the frontier model race with Llama 4, alongside significant releases from China and OpenAI’s o-series going GA.

🔹 Major Model Launches

Meta Llama 4 (Scout & Maverick) (April 5, 2025)

-

Organization: Meta

-

Model Type: Open-weight multimodal MoE models

-

What Made It Notable: Meta’s first native multimodal models, accepting both text and image inputs. The mixture-of-experts architecture dramatically improved efficiency:

- Llama 4 Scout: Designed for faster inference with extensive context window

- Llama 4 Maverick: Larger, more capable variant for demanding applications

- Llama 4 Behemoth: The largest model, still in training as a “teacher model”

The release maintained Meta’s “open” licensing, though community feedback was mixed, with some disappointment regarding performance relative to internal benchmarks.

Kimi-VL (April 11, 2025)

- Organization: Moonshot AI

- Model Type: Open-weight vision-language MoE model

- What Made It Notable: A remarkably efficient VLM that demonstrated the rapid maturation of Chinese multimodal capabilities:

- 16B total parameters with only 2.8B active during inference

- 128K token context window for processing extensive documents

- MoonViT: Native-resolution visual encoder for high-resolution processing

- Strong performance in OCR, mathematical reasoning, and multi-image understanding

- Variants include Instruct and Thinking versions

OpenAI o3 & o4-mini GA (April 16, 2025)

- Organization: OpenAI

- Model Type: Reasoning models (General Availability)

- What Made It Notable: OpenAI’s o3 and o4-mini reasoning models moved from preview to general availability, featuring tool calling, structured outputs, and improved performance on complex reasoning tasks.

Qwen3 Family (April 29, 2025)

-

Organization: Alibaba Cloud

-

Model Type: Open-weight LLM family (dense and sparse models)

-

What Made It Notable: A comprehensive release featuring hybrid reasoning:

- Thinking Mode: Step-by-step reasoning for complex logic, math, and coding

- Non-Thinking Mode: Near-instant responses for simpler queries

- Toggle-able thinking duration for user control

- Dense models from 0.6B to 32B parameters

- Sparse MoE models: 30B-A3B and 235B-A22B (flagship)

- Training on 36 trillion tokens across 119 languages

- Apache 2.0 license and MCP support for tool integration

- 32K native context, extendable to 131K tokens via YaRN

Qwen3 established Alibaba as a peer to Meta in the open-weight ecosystem.

🔹 Product & Platform Updates

- Llama 3.2 on ISS: Meta deployed Llama 3.2 aboard the International Space Station in partnership with Booz Allen Hamilton and HPE—symbolizing how pervasive LLM deployment had become.

- Grok API Launch: xAI released its API in April, enabling developers to integrate Grok 3 into their applications.

- US AI Bills Introduced: Congress introduced several AI-related bills including the COPIED Act (content provenance standards) and the NO FAKES Act (protections against AI-generated imitations).

🔹 Technical & Research Breakthroughs

- Native Multimodality: Llama 4 and Qwen3 demonstrated architectures trained from scratch on multimodal data, resulting in more seamless integration between modalities.

- Hybrid Thinking Architecture: Qwen3’s toggle-able thinking modes introduced a new paradigm where users could choose between speed and reasoning depth at inference time.

🔹 Why It Mattered

April’s releases meant developers now had multiple tier-1 open-weight options for multimodal AI. Qwen3’s hybrid reasoning architecture offered flexibility that proprietary models didn’t yet match. Combined with o3/o4-mini going GA, the gap between open and closed models continued to narrow.

🔹 Looking Ahead

The Llama 4 release set expectations for Meta’s continued open-weight leadership, while Qwen3’s breadth signaled Alibaba’s ambition to own the full spectrum from edge to cloud.

📅 May 2025

May brought what many consider the most significant commercial AI release of 2025: Claude 4, alongside major announcements at Google I/O and Apple’s WWDC.

🔹 Major Model Launches

Claude Opus 4 & Claude Sonnet 4 (May 22, 2025)

-

Organization: Anthropic

-

Model Type: Frontier hybrid reasoning models

-

What Made It Notable: Claude 4 represented Anthropic’s most significant leap in reasoning and agentic capabilities:

- 200K token context window for both Opus 4 and Sonnet 4

- Hybrid reasoning modes: Toggle between instant responses and extended thinking

- Extended Thinking with Tool Use (beta): Seamless integration of reasoning with web search and APIs

- Parallel tool usage and improved memory management

- Claude Code moved from research preview to general availability with 32K output tokens

- SWE-bench scores: Opus 4 (72.5%), Sonnet 4 (72.7%)—industry-leading coding performance

- Safety levels: Opus 4 (ASL-3), Sonnet 4 (ASL-2)

- Pricing: Opus 4 ($15/M input, $75/M output), Sonnet 4 ($3/M input, $15/M output)

Available via Anthropic API, Claude.ai (free tier for Sonnet 4), Amazon Bedrock, Google Vertex AI, and GitHub Copilot.

GPT-4.1 Family (API: April 14, 2025 | ChatGPT: May 14, 2025)

- Organization: OpenAI

- Model Type: Developer-focused multimodal model family

- What Made It Notable: A complete family designed for coding and complex instructions:

- GPT-4.1: Flagship with 1 million token context, 21.4% improvement over GPT-4o on SWE-bench

- GPT-4.1 mini: 50% lower latency, comparable performance to GPT-4o ($0.40/M input, $1.60/M output)

- GPT-4.1 nano: Fastest and cheapest, optimized for autocomplete and classification ($0.10/M input, $0.40/M output)

- 49% on instruction-following benchmark (vs GPT-4o’s 29%)

- Full multimodal support (text + image)

- Replaced GPT-4.5 Preview, which was deprecated

Grok 3 on Azure (May 2025)

- Organization: xAI / Microsoft

- Model Type: Cloud deployment

- What Made It Notable: xAI’s first major cloud partnership, making Grok 3 available on Microsoft Azure and signaling xAI’s enterprise ambitions.

🔹 Product & Platform Updates

- Google I/O 2025 Highlights:

- AI Mode for Google Search: Generative AI embedded directly into search

- Google AI Ultra subscription plan for premium features

- Flow: AI filmmaking tool

- Veo 3: State-of-the-art video generation model

- Apple WWDC - Apple Intelligence: Apple announced “Apple Intelligence” with on-device AI processing, Genmoji (AI-generated emojis), Visual Intelligence, and advanced writing tools across iOS, iPadOS, and macOS.

- Midjourney V7: Released with 40% faster rendering speed.

- OpenAI Responses API Upgrade: Streaming, multi-round editing, and MCP tool integration.

- Claude Computer Use Expansion: Anthropic expanded Claude’s ability to interact with computer interfaces.

- TAKE IT DOWN Act: The US signed legislation criminalizing nonconsensual deepfakes into law.

🔹 Technical & Research Breakthroughs

- Extended Thinking with Tool Use: Claude 4’s ability to reason continuously while calling external tools represented a significant advancement in agentic capability.

- On-Device AI: Apple Intelligence demonstrated that significant AI processing could occur locally on consumer devices without cloud dependency.

🔹 Why It Mattered

Claude 4’s release reshaped the competitive landscape for enterprise AI. Anthropic’s focus on safety, extended thinking, and developer experience attracted enterprises that had previously defaulted to OpenAI. The combination of strong coding performance (72%+ on SWE-bench) and computer use capabilities made Claude the preferred choice for many agent-building teams. Meanwhile, GPT-4.1’s 1M token context opened new possibilities for document-heavy workflows.

🔹 Looking Ahead

May set the stage for OpenAI’s GPT-5 reveal, as the pressure to respond to Claude 4’s agentic capabilities and the open-weight models intensified.

📅 June 2025

June focused on filling out product lines and expanding enterprise capabilities, with major releases from Google and OpenAI plus significant developer tools.

🔹 Major Model Launches

OpenAI o3-pro (June 10, 2025)

- Organization: OpenAI

- Model Type: Premium reasoning model

- What Made It Notable: OpenAI’s most capable reasoning model, designed for complex problem-solving:

- Thinks longer for more reliable, comprehensive responses

- Tool integration: Web browsing, file analysis, Python execution, visual inputs, and memory

- Strong performance on AIME 2025 and GPQA Diamond benchmarks

- Pricing: $20/M input, $80/M output

- Launched alongside an 80% price cut for base o3 model

- Available to ChatGPT Pro, Team, and API users

Gemini 2.5 Pro & Gemini 2.5 Flash GA (June 17, 2025)

- Organization: Google DeepMind

- Model Type: Production-ready frontier models

- What Made It Notable: Google moved its 2.5 series from experimental to general availability:

- Gemini 2.5 Pro: Described as Google’s “most advanced reasoning model,” capable of solving complex problems across text, audio, images, video, and code

- Gemini 2.5 Flash: Optimized for speed and low cost, ideal for summarization and responsive chat

- Gemini 2.5 Flash-Lite: Even faster/cheaper tier for high-volume applications

- Native audio output and enhanced security across all models

Mistral Devstral (June 2025)

- Organization: Mistral AI (with All Hands AI)

- Model Type: Open-source agentic coding LLM

- What Made It Notable: Purpose-built for software engineering:

- Navigates entire codebases

- Performs iterative multi-file edits

- Resolves real-world GitHub issues through code agent frameworks

- Open-source release signaling Mistral’s push into developer tools

Kimi-Dev (June 2025)

- Organization: Moonshot AI

- Model Type: Open-weight coding model (72B parameters)

- What Made It Notable: A large coding-focused model demonstrating Moonshot AI’s expansion into specialized domains.

🔹 Product & Platform Updates

- Google AlphaEvolve: DeepMind introduced AlphaEvolve, an AI coding agent using LLMs and evolutionary algorithms to discover and optimize novel algorithms. Already showing breakthroughs in data center management and chip design.

- Gemini CLI: Open-source AI agent for developers, bringing Gemini capabilities to the command line. See our guide to CLI tools for AI.

- Midjourney V1 (Video): Midjourney released its first image-to-video generation model.

- Meta’s Scale AI Investment: Meta invested $14 billion to acquire 49% of Scale AI, signaling major commitment to ML operations.

- MCP Goes Mainstream: The Model Context Protocol saw accelerating adoption as the standard for connecting AI models to external tools, earning the nickname “USB-C of AI.”

- Enterprise AI Integrations: All major providers expanded enterprise offerings with enhanced security, compliance, and deployment options.

🔹 Technical & Research Breakthroughs

- Inference Cost Optimization: Industry focus shifted from training to inference costs, with speculative decoding, quantization, and batching optimization becoming critical differentiators.

- AlphaEvolve’s Evolutionary Approach: Google’s combination of LLMs with evolutionary algorithms for code generation represented a novel approach to AI-assisted programming.

🔹 Why It Mattered

June’s releases showed the industry consolidating around proven architectures while competing aggressively on price and performance. The GA releases from Google indicated experimental capabilities were now production-ready. OpenAI’s 80% price cut on o3 signaled aggressive competition on pricing, while the o3-pro release maintained a premium tier for high-stakes reasoning tasks.

🔹 Looking Ahead

The stage was set for the summer’s major announcements, with all eyes on OpenAI’s GPT-5 and the next generation of Chinese open-weight models.

📅 July 2025

July brought one of the year’s most impactful open-weight releases (Kimi K2) alongside xAI’s Grok 4 and the emergence of true agent capabilities in consumer products.

🔹 Major Model Launches

Kimi K2 (July 2025)

-

Organization: Moonshot AI

-

Model Type: Open-weight MoE reasoning model (1 trillion parameters)

-

What Made It Notable: The most ambitious open-weight release yet:

- 1 trillion total parameters with 32 billion active during inference

- Trained on 15.5 trillion tokens of data

- MuonClip optimizer for stable large-scale pre-training

- Released under modified MIT license for commercial use

- Two variants: Kimi-K2-Base (for fine-tuning) and Kimi-K2-Instruct (chat/agents)

- State-of-the-art performance on coding benchmarks, matching closed models

- Strong capabilities in knowledge, math, and agentic tasks

Kimi K2 represented a new tier of open-weight capability, with later updates expanding context to 256K tokens.

xAI Grok 4 (July 2025)

- Organization: xAI

- Model Type: Frontier reasoning model

- What Made It Notable: Elon Musk’s latest release emphasizing real-time reasoning and live data integration for conversational intelligence. An upgraded version (Grok 4.1) followed in November.

Mistral Voxtral (July 2025)

- Organization: Mistral AI

- Model Type: Open-weight speech understanding model

- What Made It Notable: Mistral’s entry into audio AI, offering state-of-the-art accuracy and native semantic understanding for speech-to-text applications.

Mistral Magistral Models (July 2025)

- Organization: Mistral AI

- Model Type: Dedicated reasoning models

- What Made It Notable: Magistral Small and Magistral Medium offered transparent, step-by-step logic for complex tasks with multilingual fluency.

MiniMax-M1 (July 2025)

- Organization: MiniMax

- Model Type: Open-weight hybrid-attention reasoning model

- What Made It Notable: A competitive open-weight alternative with hybrid attention mechanisms for improved reasoning.

🔹 Product & Platform Updates

- ChatGPT Agent Mode: OpenAI’s ChatGPT gained an agent mode, allowing autonomous handling of complex requests via Operator and deep research capabilities.

- GPT-4.5 Deprecated: OpenAI officially deprecated GPT-4.5 Preview as GPT-5 neared release.

- Claude Code Analytics Dashboard: Anthropic unveiled a new analytics dashboard for Claude Code, offering team usage insights.

- China Model Downloads Milestone: By July 2025, China surpassed the US in cumulative open model downloads on Hugging Face.

🔹 Technical & Research Breakthroughs

- Sakana AI Darwin Gödel Machine: A self-improving AI coding agent, representing novel approaches to autonomous code improvement.

- ICML 2025 (July 13-19, Vancouver): Major research presentations on scaling, reasoning, and agent architectures.

- World AI Conference (WAIC) (July 26-29): Showcased global AI developments with emphasis on Chinese lab advancements.

🔹 Why It Mattered

Kimi K2’s release demonstrated that trillion-parameter models were no longer exclusive to well-funded Western labs. The open licensing meant any organization could deploy frontier-class reasoning on their own infrastructure. Grok 4’s emphasis on real-time data showed xAI’s differentiated approach.

🔹 Looking Ahead

July’s releases intensified pressure on OpenAI to deliver GPT-5, while demonstrating that the open-weight ecosystem was now firmly at the frontier.

📅 August 2025

August was the month OpenAI unveiled GPT-5, marking a new chapter in the company’s history alongside strong responses from Anthropic and DeepSeek.

🔹 Major Model Launches

GPT-5 (August 7, 2025)

- Organization: OpenAI

- Model Type: Unified multimodal intelligent system

- What Made It Notable: OpenAI’s most ambitious release—a “super assistant” unifying previously separate capabilities:

- Seamless multimodal integration: Text, images, audio, and video in a single conversation

- Integrated o3 reasoning: Advanced multi-step logic and planning

- Massive context: Up to 1 million tokens input, 100K output

- System of models: Real-time “router” selects the best model for each task

- Persistent memory: Remembers user preferences and writing style across sessions

- Reduced hallucinations: Below 10%, improved reliability for law/medicine

- “Strongest coding model to date”: Significant code generation improvements

- Three-tier access: Free (standard intelligence), Plus, and Pro (maximum capability)

- Simultaneous launch for ChatGPT, Microsoft Copilot, and OpenAI API

GPT-OSS (August 5, 2025)

- Organization: OpenAI

- Model Type: Open-weight reasoning models

- What Made It Notable: OpenAI’s first open-weight models with reasoning capabilities—a significant strategic shift in response to competitive pressure from DeepSeek, Qwen, and Kimi K2.

Claude Opus 4.1 (August 5, 2025)

- Organization: Anthropic

- Model Type: Frontier reasoning model

- What Made It Notable: Incremental but meaningful upgrade to Opus 4:

- SWE-bench Verified: 74.5% (up from 72.5%)

- GPQA Diamond: 80.9% graduate-level reasoning

- Enhanced agentic capabilities with 64K thinking tokens for complex tasks

- 200K context window with 32K output support

- Same pricing as Opus 4 ($15/$75 per M tokens)

- Available via Amazon Bedrock, Google Vertex AI, and GitHub Copilot

DeepSeek-V3.1 (August 21, 2025)

- Organization: DeepSeek

- Model Type: Open-weight hybrid reasoning model

- What Made It Notable: DeepSeek’s shift toward the “agent era”:

- Hybrid inference: “Think” mode (deepseek-reasoner) and “Non-Think” mode (deepseek-chat)

- DeepThink button for toggling between modes

- 128K token context for both modes

- 40%+ improvement on agent benchmarks (SWE-bench, Terminal-bench)

- Replaced separate R1 model with unified hybrid architecture

- Anthropic API format support and strict Function Calling

- 840B additional pre-training tokens for long-context capability

Grok Code Fast 1 (August 2025)

- Organization: xAI

- Model Type: Specialized coding model

- What Made It Notable: Designed specifically for agentic coding tasks, reflecting xAI’s expansion into specialized agent models.

🔹 Product & Platform Updates

- OpenAI Agent Mode in ChatGPT: Operator fully integrated as “agent mode,” enabling autonomous task execution.

- EU GPAI Regulations Take Effect: On August 2, rules for General-Purpose AI models came into force, requiring transparency and training data disclosure.

- China AI Plus Formal Release: State Council officially released the comprehensive AI+ initiative.

🔹 Technical & Research Breakthroughs

- Unified Architecture: GPT-5’s integration of multiple modalities and reasoning into a single router-based system marked a shift from specialized model proliferation.

- Hybrid Reasoning Modes: DeepSeek V3.1’s toggle-able thinking depth showed the maturation of this pattern across the industry.

🔹 Why It Mattered

August established the new competitive landscape: OpenAI with unified multimodal intelligence, Anthropic with enterprise-grade reasoning and coding, and open-weight models matching proprietary capabilities. GPT-5’s freemium availability put frontier AI in the hands of millions, while DeepSeek’s hybrid architecture showed open-weight wasn’t far behind.

🔹 Looking Ahead

The August releases set up an intense fall competition, with all providers racing to refine their flagship models and expand agentic capabilities.

📅 September 2025

September saw major infrastructure announcements, the maturation of the open-weight ecosystem, and Anthropic’s strongest coding model to date.

🔹 Major Model Launches

Claude Sonnet 4.5 (September 29, 2025)

- Organization: Anthropic

- Model Type: Frontier reasoning/coding model

- What Made It Notable: Anthropic’s most capable model to date, setting the new standard for AI coding:

- SWE-bench Verified: 77.2%—leading coding benchmark

- OSWorld: 61.4%—leading real-world computer tasks

- 30+ hours autonomous coding capability with sustained focus

- 200K context window (1M tested internally)

- Claude Agent SDK: New tool for building long-running agents with memory, permissions, and subagent coordination

- Native VS Code extension and improved terminal interface

- File creation (spreadsheets, slides, docs) directly in conversations

- Most aligned frontier model with progress against sycophancy

- Same pricing: $3/M input, $15/M output

Qwen3-Max (Preview: September 5 | Official: September 23, 2025)

- Organization: Alibaba Cloud

- Model Type: Frontier closed-weight model (1+ trillion parameters)

- What Made It Notable: Alibaba’s largest and most capable LLM:

- 1+ trillion parameters, pre-trained on 36T tokens

- Surpassed GPT-5-Chat on LMArena text leaderboard

- SWE-bench Verified: 69.6%

- 1 million token context length

- Both Instruct and Thinking (Qwen3-Max-Thinking) variants

- Qwen3-Max-Thinking achieved 100% on AIME25 and HMMT math benchmarks

- Pricing: $0.90/M input, $3.40/M output (preview)

OpenAI Sora 2 (September 2025)

- Organization: OpenAI

- Model Type: Advanced video/audio generation

- What Made It Notable: OpenAI’s next-generation video model with improved quality, consistency, and audio integration.

GPT-5 Codex (September 2025)

- Organization: OpenAI

- Model Type: Coding-tuned GPT-5 variant

- What Made It Notable: Became the default coding assistant, specifically optimized for software engineering workflows.

Qwen3-Omni (September 22, 2025)

- Organization: Alibaba Cloud

- Model Type: Real-time multimodal streaming model

- What Made It Notable: Open-source real-time multimodal processing with streaming text and speech responses, enabling voice-first AI applications.

Kimi-K2-Instruct-0905 (September 9, 2025)

- Organization: Moonshot AI

- Model Type: Updated instruction-following model

- What Made It Notable: Enhanced coding performance and expanded context window to 256K tokens (up from initial release).

🔹 Product & Platform Updates

- Alibaba Apsara Conference (September 19, 2025): Major announcements:

- RMB 380 billion ($52B) investment in AI and cloud infrastructure over three years

- Vision to position Qwen as an “AI operating system”

- Roadmap toward artificial superintelligence (ASI) by 2032

- Context length expansion goals from 1M to 100M tokens

- China AI Content Labeling Enforcement: Mandatory labeling of AI-generated content began.

- California SB 53: Legislative efforts to establish transparency and safety standards for powerful AI systems.

🔹 Technical & Research Breakthroughs

- Trillion-Parameter Competition: With Qwen3-Max and Kimi K2, trillion-parameter-class models were available from both Chinese and Western providers in open and closed variants.

- Agent SDK Emergence: Anthropic’s Claude Agent SDK showed the industry moving from basic tool calling to sophisticated agent orchestration frameworks.

🔹 Why It Mattered

September demonstrated that the AI industry had entered a new era of competition on capability, ecosystem, and infrastructure simultaneously. Alibaba’s massive investment signaled China’s commitment to US-hyperscaler-level AI infrastructure. Claude Sonnet 4.5’s dominance in coding benchmarks made it the go-to choice for AI-assisted development.

🔹 Looking Ahead

The announcements set up a dramatic Q4 with new model generations expected from multiple providers.

📅 October 2025

October brought efficiency-focused releases, major video AI advances, and significant platform launches across all major providers.

🔹 Major Model Launches

Claude Haiku 4.5 (October 15, 2025)

- Organization: Anthropic

- Model Type: Fast, cost-efficient reasoning model

- What Made It Notable: Anthropic’s fastest and most cost-efficient model, bringing flagship-level performance:

- 73.3% SWE-bench Verified—matches Claude Sonnet 4’s coding capability

- 50.7% computer use benchmark—surpasses Sonnet 4 in some tasks

- 200K token context with 64K output support

- First Haiku with extended thinking and computer use capabilities

- 4-5x faster than Sonnet 4.5 at 1/3 the cost

- Pricing: $1/M input, $5/M output

- Available via Anthropic API, Amazon Bedrock, and Google Vertex AI

OpenAI Sora 2 (October 2025)

- Organization: OpenAI

- Model Type: Revolutionary video-audio generation

- What Made It Notable: Major advancement in video AI:

- Generates 60-second video clips with realistic physics and natural lighting

- High-fidelity, context-aware audio generation

- “Cameo” feature: Users can insert their likeness into generated videos

- iOS app achieved 1M+ downloads in first 5 days

GPT-5 Pro API (October 2025)

- Organization: OpenAI

- Model Type: Premium reasoning API

- What Made It Notable: OpenAI’s most powerful API offering:

- 400,000-token context window

- Designed for complex tasks: scientific research, legal analysis

- Available to developers via API

Gemini 2.5 Computer Use (October 2025)

- Organization: Google DeepMind

- Model Type: Agentic computer interaction model

- What Made It Notable: Enables AI agents to:

- Interact directly with user interfaces

- Navigate websites

- Complete complex multi-step tasks autonomously

Google Veo 3.1 (October 2025)

- Organization: Google DeepMind

- Model Type: Advanced video generation

- What Made It Notable: Major update focusing on narrative control:

- Extended clips

- Seamless transitions

- Creator-focused workflow

MiniMax M2 (October 2025)

- Organization: MiniMax

- Model Type: Open-weight MoE model for coding/agents

- What Made It Notable: Record scores on open model intelligence indexes, surpassing Gemini 2.5 Pro in some assessments. Compact, efficient architecture for coding and agents.

🔹 Product & Platform Updates

- OpenAI Atlas Browser: AI-first web browser replacing traditional search with conversational, voice-driven interface and powerful “agent mode” for online tasks.

- Gemini Enterprise: Google’s “front door” for workplace AI, offering advanced Gemini models grounded in company data for building, deploying, and governing AI agents.

- OpenAI-NVIDIA 10GW Partnership: Announced partnership for massive compute infrastructure.

- Windows 11 AI Integration: Microsoft rolled out “Hey Copilot” and “Copilot Vision” features.

- Apple M5 Chip: Integrated into new devices, boosting on-device ML capability.

🔹 Technical & Research Breakthroughs

- Efficiency Over Scale: October’s releases proved raw parameter count was less important than efficient architecture.

- Google AI Research: Breakthroughs in quantum algorithms (13,000x faster than supercomputers), AI for cancer therapy (Cell2Sentence-Scale), and fusion energy acceleration.

🔹 Why It Mattered

October showed that the “bigger is better” era was giving way to “smarter is better.” Haiku 4.5 matching Sonnet 4’s coding performance at a fraction of the cost proved efficient architectures could match larger models. Video AI with Sora 2 reached consumer-ready quality.

🔹 Looking Ahead

The focus on efficiency and multimodal integration set up the year-end releases pushing both capability and cost-effectiveness.

📅 November 2025

November brought flagship releases from multiple providers, the most customizable AI models yet, and significant progress in agentic reliability.

🔹 Major Model Launches

Claude Opus 4.5 (November 24, 2025)

- Organization: Anthropic

- Model Type: Flagship frontier model

- What Made It Notable: Anthropic’s most intelligent model, excelling across all dimensions:

- Achieves SWE-bench scores with 65% fewer tokens—better cost control

- Self-improving AI agents that learn from experience

- Multi-file codebase navigation and autonomous debugging

- Long-context storytelling with strong organization

- Automatic context summarization for endless dialogue

- More resistant to prompt injection and jailbreaking

- New “effort parameter” API to balance speed/cost vs capability

- Available via Anthropic apps, API, and all major cloud platforms

- Excel, Chrome, and desktop integrations for everyday tasks

GPT-5.1 Family (November 12-19, 2025)

- Organization: OpenAI

- Model Type: Refined frontier models

- What Made It Notable: Major upgrade focusing on personalization and adaptive reasoning:

- GPT-5.1 Instant (Nov 12): High-speed responses, adaptive reasoning decides when to “think”

- GPT-5.1 Thinking (Nov 12): For complex multi-step tasks, enhanced logical consistency

- GPT-5.1-Codex-Max (Nov 19): Agentic coding, 24+ hour tasks, multi-step refactors

- GPT-5.1 Pro (Nov 19): Replaced GPT-5 Pro for ChatGPT Pro users

- Customization options: Tone selection (Friendly, Professional, Candid), personality settings

- Most customizable OpenAI model yet

Kimi K2 Thinking (November 6, 2025)

- Organization: Moonshot AI

- Model Type: Open-weight reasoning agent

- What Made It Notable: “Thinking agent” pushing open-source reasoning limits:

- 1 trillion parameters (32B active)

- 256K token context window

- Executes 200-300 sequential tool calls without human intervention

- State-of-the-art on HLE, BrowseComp, and SWE-bench Verified benchmarks

- Native INT4 quantization: 2x inference speedup without quality loss

- Transparent step-by-step reasoning visible to users

- Outperforms GPT-5 and Claude Sonnet 4.5 on specific tasks

- Available on Hugging Face, kimi.com, and via API

Gemini 3 Pro (November 18, 2025)

- Organization: Google DeepMind

- Model Type: Next-generation frontier model

- What Made It Notable: Google’s most intelligent model:

- Excels in reasoning, multimodal understanding, and coding

- Deep Think mode: PhD-level reasoning for complex math/science/logic

- Advanced agentic workflows and autonomous coding

- Most secure Gemini model with reduced sycophancy and prompt injection resistance

- New API features:

thinking_levelcontrol,thought_signatures

🔹 Product & Platform Updates

- EU AI Act Amendment Draft: European Commission proposed extended compliance timelines and reduced burdens for smaller companies.

- Grok 4.1 Update: xAI released upgraded version of Grok 4 with enhanced capabilities.

🔹 Technical & Research Breakthroughs

- Agent Reliability: Focus shifted from capability to reliability, with real-world deployment benchmarks becoming standard.

- Adaptive Reasoning: GPT-5.1’s dynamic “thinking” based on task complexity became a pattern across providers.

🔹 Why It Mattered

November demonstrated that the AI ecosystem had matured to the point where months-old models were significantly outdated by new releases. Kimi K2 Thinking proved open-source could match proprietary frontier reasoning. Claude Opus 4.5’s efficiency gains showed the path to practical enterprise deployment.

🔹 Looking Ahead

November set up the year’s climactic December releases, with all providers preparing final 2025 announcements.

📅 December 2025

December brought the year’s final wave of releases, capping an extraordinary twelve months of AI advancement with major open-weight and proprietary releases.

🔹 Major Model Launches

DeepSeek-V3.2 & DeepSeek-V3.2-Speciale (December 1, 2025)

- Organization: DeepSeek

- Model Type: Open-weight reasoning-first agent models

- What Made It Notable: DeepSeek’s most advanced release, built for the agent era:

- First DeepSeek model with thinking directly integrated into tool-use

- 671B total parameters (37B active), MoE architecture

- DeepSeek Sparse Attention (DSA): Near-linear complexity for long contexts

- 131K token context for both thinking and non-thinking modes

- Training data: 1,800+ environments, 85,000+ complex instructions

- V3.2-Speciale: 96% AIME 2025, 99.2% HMMT 2025—rivaling Gemini 3 Pro

- Gold-level results in IMO, CMO, ICPC World Finals, IOI 2025

- Performance comparable to GPT-5 at ~1/10 the cost

- MIT license for broad commercial use

Mistral 3 Family (December 2, 2025)

- Organization: Mistral AI

- Model Type: Open-weight multimodal models

- What Made It Notable: Mistral’s next generation, trained on 3,000 NVIDIA H200 GPUs:

- Mistral Large 3: 675B total / 41B active parameters—best permissive open-weight multimodal model from Europe

- Excels in image understanding and multilingual conversations

- Ministral 3 family: Nine compact models (3B, 8B, 14B) for edge deployment

- Optimized for NVIDIA Spark, RTX PCs, and Jetson devices

- Available on Mistral Studio, Amazon Bedrock, Azure Foundry, and Hugging Face

Mistral Devstral 2 (December 9, 2025)

- Organization: Mistral AI

- Model Type: Agentic coding model

- What Made It Notable: State-of-the-art open-source coding agent:

- 123B-parameter dense transformer

- 256K token context window

- Specializes in codebase exploration, multi-file changes, and bug fixing

Gemini 3 Flash (December 17, 2025)

- Organization: Google DeepMind

- Model Type: Fast, cost-efficient frontier model

- What Made It Notable: The speed tier of Gemini 3:

- Became default model in Gemini app globally

- Available in Google Search with “AI Mode”

- PhD-level reasoning with multimodal understanding

- Significantly lower cost than Pro variant

NVIDIA Nemotron 3 Nano (December 15, 2025)

- Organization: NVIDIA

- Model Type: Agentic AI-optimized model

- What Made It Notable: Hybrid Mamba-MoE architecture (30B total / 3.5B active):

- 4x higher token throughput than Nemotron 2 Nano

- Optimized for debugging, summarization, RAG, and AI assistants

Mistral OCR 3 (December 17, 2025)

- Organization: Mistral AI

- Model Type: Document processing API

- What Made It Notable: Transforms scanned PDFs into structured, AI-readable text with new accuracy levels.

Qwen-Image-2512 (December 31, 2025)

- Organization: Alibaba Cloud

- Model Type: Text-to-image model

- What Made It Notable: Top-ranked open-source image generation in blind tests, with enhanced human realism and text rendering.

Qwen3-TTS Models (December 22, 2025)

- Organization: Alibaba Cloud

- Model Type: Text-to-speech models

- What Made It Notable: Voice design and voice cloning capabilities, expanding Alibaba’s audio AI ecosystem.

🔹 Product & Platform Updates

-

AWS re:Invent 2025: Amazon announced the Nova 2 family:

- Nova 2 Lite: Fast reasoning with 1M token context

- Nova 2 Pro: Complex multistep tasks (preview)

- Nova 2 Sonic: Speech-to-speech for real-time conversation

- Nova 2 Omni: Multimodal cross-modal reasoning (preview)

- Nova Forge: Custom model training service

- Nova Act: AI agent for UI automation with 90% reliability

- Amazon Bedrock expanded to 100+ foundation models

-

China Draft AI Rules: Draft regulations tightening controls on human-like AI, requiring user notification and security assessments.

🔹 Technical & Research Breakthroughs

- Agentic AI Reliability: December releases emphasized reliability metrics, with AWS claiming 90% success rates on browser tasks.

- Hybrid Architectures: NVIDIA’s Nemotron 3 demonstrated Mamba + MoE combination for superior agent efficiency.

- Sparse Attention Scaling: DeepSeek’s DSA showed path to efficient long-context processing without quadratic complexity.

🔹 Why It Mattered

December 2025 showed that the pace of AI advancement was accelerating, not slowing. Every major provider released new flagship models within weeks of each other. DeepSeek V3.2 at 1/10 the cost of GPT-5 with comparable performance demonstrated that open-source was now genuinely competitive at the frontier.

🔹 Looking Ahead

December set up 2026 as the year AI agents move from experimental to expected, with all major providers launching agent-focused services and models.

What 2025 Changed in AI

How Model Capabilities Evolved

At the start of 2025, “reasoning” was a premium capability limited to specialized models like OpenAI’s o1. By year-end, reasoning was table stakes—every major model family included thinking modes, chain-of-thought capabilities, and adjustable reasoning depth.

The definition of “frontier” shifted from raw benchmark performance to practical capability clusters (track these evolving scores in our LLM Benchmark Tracker):

- General Reasoning: Complex problem-solving and logical analysis

- Coding: Not just generation, but debugging, refactoring, and repository-scale understanding

- Multimodal Understanding: Seamless integration of text, image, audio, and video

- Agentic Capability: The ability to take actions, use tools, and complete multi-step tasks

The Rise of Agents and Autonomous Workflows

2025 was the year AI moved from answering questions to completing tasks. Key developments:

- Computer Use: Starting with Anthropic’s computer use capabilities and OpenAI’s Operator, AI systems learned to interact with graphical interfaces just as humans do. See our agentic browsers analysis.

- Tool Integration: The Model Context Protocol (MCP) became the standard for connecting AI to external tools and data sources, enabling reliable, standardized integrations.

- Agent Reliability: Early agents were impressive demos but unreliable in production. By year-end, providers were publishing reliability benchmarks and agents were handling real production workloads. Learn more about why AI agents fail.

The Impact of Open-Weight Models

January’s DeepSeek-R1 release triggered a transformation in AI economics:

- Price Compression: API providers slashed prices throughout 2025, with some pricing dropping by 10x or more as open alternatives raised the competitive bar.

- Deployment Flexibility: Enterprises could now run frontier-class models locally, eliminating data governance concerns and API dependencies.

- Innovation Distribution: Open-weight models enabled thousands of fine-tuned variants for specific applications, accelerating the pace of applied AI development.

Shifts in Leadership

The US-only era of AI leadership definitively ended in 2025:

- China: DeepSeek, Qwen, Kimi, and MiniMax released models matching or exceeding Western alternatives, with China surpassing the US in open model downloads by mid-year.

- Europe: Mistral AI demonstrated that European labs could compete at the frontier, particularly for applications requiring European data sovereignty.

- Distributed Innovation: The combination of open-weight releases and cloud availability meant that AI innovation was no longer concentrated in a few cities but distributed globally.

The Changing Role of Hardware and Efficiency

Training costs continued to climb, but the industry’s focus shifted to inference:

- Mixture-of-Experts: MoE architectures became standard for large models, enabling trillion-parameter models that only activated a fraction of their parameters for each query.

- Inference Optimization: Techniques like speculative decoding, quantization, and batching optimization became critical competitive differentiators. Learn about tokens, context, and parameters.

- Edge Deployment: Efficient models like Mistral’s Ministral family and NVIDIA’s Nemotron Nano brought capable AI to edge devices and local deployments.

Key Takeaways for Builders and Leaders

For Technical Teams

-

Don’t default to GPT: The 2025 landscape offers multiple tier-1 options. Claude excels at coding and agents, Gemini at multimodal tasks, and open-weight models like Qwen3 and Kimi K2 offer deployment flexibility.

-

Build for multiple backends: Use abstraction layers (like LangChain or LiteLLM) to avoid lock-in. Model leadership changes quarterly.

-

Invest in MCP and tool integration: The Model Context Protocol is becoming the standard for connecting AI to external systems. Early adoption pays dividends.

-

Don’t ignore open-weight options: For many use cases, Qwen, DeepSeek, or Llama offer equivalent quality to proprietary APIs at dramatically lower cost—especially at scale.

For Business Leaders

-

AI is infrastructure now: Every competitor will have AI capabilities. Advantage comes from how you integrate AI into your specific workflows and data.

-

Consider hybrid deployment: Some workloads benefit from proprietary API quality; others are better served by self-hosted open-weight models. Build for both.

-

Plan for agents: AI that takes actions—not just answers questions—will define the next wave of competitive advantage. Start identifying workflows suitable for agent automation.

-

Geographic diversification matters: Chinese models are not inferior alternatives; they’re tier-1 options with different strengths and trade-offs. Evaluate them on merit.

When to Use Frontier vs. Open-Weight Models

Use Frontier Proprietary APIs When:

- Absolute highest quality matters more than cost

- You need the latest capabilities immediately upon release

- Your use case benefits from provider-managed safety and compliance

- Scale is modest (thousands to low millions of queries/month)

Use Open-Weight Models When:

- Cost is a primary concern at scale

- Data sovereignty or privacy requirements prevent API usage

- You need fine-tuning or customization

- Deployment flexibility (edge, air-gapped, specific clouds) is required

- You’re building defensible differentiation through model customization

Model Index Table

| Model | Organization | Release | Type | Key Capability |

|---|---|---|---|---|

| DeepSeek-R1 | DeepSeek | Jan 20, 2025 | Open-weight reasoning | MIT-licensed reasoning matching o1 |

| Kimi K1.5 | Moonshot AI | Jan 20, 2025 | Multimodal reasoning | Text, image, video reasoning |

| Gemini 2.0 Flash | Jan 30, 2025 | Multimodal | New default model, speed focus | |

| o3-mini | OpenAI | Jan 31, 2025 | Cost-efficient reasoning | STEM focus, free tier access |

| Gemini 2.0 Pro | Feb 5, 2025 | Frontier multimodal | 2M token context window | |

| Grok 3 | xAI | Feb 17, 2025 | Multimodal reasoning | DeepSearch, Big Brain, Think modes |

| Claude 3.7 Sonnet | Anthropic | Feb 24, 2025 | Hybrid reasoning | First hybrid reasoning model |

| GPT-4.5 | OpenAI | Feb 27, 2025 | Frontier chat | Codename “Orion”, reduced hallucinations |

| Gemma 3 | Mar 2025 | Open-source | 128K context, 140 languages | |

| Gemini 2.5 Pro Exp | Mar 25, 2025 | Reasoning | Google’s thinking model entry | |

| Llama 4 (Scout/Maverick) | Meta | Apr 5, 2025 | Open-weight multimodal | Native multimodal MoE architecture |

| Kimi-VL | Moonshot AI | Apr 11, 2025 | Open-weight VLM | 2.8B active, 128K context |

| o3 & o4-mini GA | OpenAI | Apr 16, 2025 | Reasoning (GA) | Tool calling, structured outputs |

| Qwen3 Family | Alibaba | Apr 29, 2025 | Open-weight LLM | Hybrid thinking, 119 languages |

| GPT-4.1 Family | OpenAI | Apr 14, 2025 | Developer-focused | 1M context, coding excellence |

| Claude Opus/Sonnet 4 | Anthropic | May 22, 2025 | Frontier reasoning | 200K context, 72%+ SWE-bench |

| o3-pro | OpenAI | Jun 10, 2025 | Premium reasoning | AIME/GPQA leader, tool use |

| Gemini 2.5 Pro GA | Jun 17, 2025 | Production multimodal | GA with Flash-Lite tier | |

| Mistral Devstral | Mistral | Jun 2025 | Open-source coding | Agentic software engineering |

| Kimi K2 | Moonshot AI | Jul 2025 | Open-weight MoE | 1T params, 15.5T training tokens |

| xAI Grok 4 | xAI | Jul 2025 | Frontier reasoning | Real-time data integration |

| Mistral Voxtral | Mistral | Jul 2025 | Open-weight audio | Speech understanding |

| GPT-5 | OpenAI | Aug 7, 2025 | Unified multimodal | 1M context, router-based system |

| GPT-OSS | OpenAI | Aug 5, 2025 | Open-weight reasoning | OpenAI’s first open models |

| Claude Opus 4.1 | Anthropic | Aug 5, 2025 | Frontier reasoning | 74.5% SWE-bench, 80.9% GPQA |

| DeepSeek-V3.1 | DeepSeek | Aug 21, 2025 | Hybrid reasoning | DeepThink, 128K context |

| Claude Sonnet 4.5 | Anthropic | Sep 29, 2025 | Frontier coding | 77.2% SWE-bench, 61.4% OSWorld |

| Qwen3-Max | Alibaba | Sep 5-23, 2025 | Closed frontier | 1T+ params, beats GPT-5 on LMArena |

| Sora 2 | OpenAI | Oct 2025 | Video generation | 60s clips, audio, cameo feature |

| Claude Haiku 4.5 | Anthropic | Oct 15, 2025 | Efficient reasoning | 73.3% SWE-bench, 5x faster |

| GPT-5 Pro API | OpenAI | Oct 2025 | Premium reasoning | 400K context, research grade |

| Gemini 2.5 Computer Use | Oct 2025 | Agentic | UI interaction, navigation | |

| MiniMax M2 | MiniMax | Oct 2025 | Open-weight agent | Record open model scores |

| Claude Opus 4.5 | Anthropic | Nov 24, 2025 | Flagship | 65% fewer tokens, self-improving |

| GPT-5.1 Family | OpenAI | Nov 12-19, 2025 | Refined frontier | Instant, Thinking, Codex-Max |

| Kimi K2 Thinking | Moonshot AI | Nov 6, 2025 | Open reasoning | 200-300 tool calls, HLE leader |

| Gemini 3 Pro | Nov 18, 2025 | Next-gen frontier | Deep Think, PhD-level reasoning | |

| DeepSeek-V3.2 | DeepSeek | Dec 1, 2025 | Open agent-first | GPT-5 level at 1/10 cost |

| Mistral Large 3 | Mistral | Dec 2, 2025 | Open multimodal | 675B, 3000 H200s trained |

| Devstral 2 | Mistral | Dec 9, 2025 | Agentic coding | 123B, 256K context |

| Gemini 3 Flash | Dec 17, 2025 | Fast frontier | Default in Gemini app | |

| Nemotron 3 Nano | NVIDIA | Dec 15, 2025 | Agentic-optimized | 4x throughput, Mamba+MoE |

| Nova 2 Family | Amazon | Dec 2025 | Cloud models | 1M context, 90% agent reliability |

FAQs

What was the most important AI model release of 2025?

It depends on your perspective. GPT-5 was the most anticipated and widely used release. DeepSeek-R1 was the most disruptive, demonstrating that open-weight models could match proprietary frontier capabilities. Claude 4 was arguably the most impactful for developers, setting new standards for AI-assisted coding and agents.

Did open-weight models catch up to proprietary models in 2025?

Yes, for most practical purposes. By year-end, models like Qwen3, Kimi K2, and DeepSeek-V3.2 matched or exceeded proprietary alternatives on benchmark performance. The remaining advantages of proprietary models were primarily in safety features, ease of use, and guaranteed availability—not raw capability. Learn how to run open-weight models locally.

Which model should I use for coding in 2026?

As of December 2025, Claude Sonnet 4.5/Opus 4.5 are the leaders for general coding tasks. For open-weight options, Kimi K2 and DeepSeek-V3.2 offer excellent performance. OpenAI’s GPT-5-Codex family is strong for specific coding workflows. The best choice depends on your deployment requirements and budget. See our AI-powered IDEs comparison for tool recommendations.

Are Chinese AI models safe to use?

Chinese AI models like Qwen, DeepSeek, and Kimi are technically capable and commercially licensed. However, they may have different content policies and training data than Western models. For most technical applications, they work excellently. For applications involving sensitive content, political topics, or regions with specific compliance requirements, evaluate carefully. Read more about AI safety considerations.

What should I expect from AI in 2026?

Based on 2025 trajectories, expect:

- Agent reliability to improve significantly, enabling autonomous workflows in production

- Reasoning depth to increase further, with multi-hour “thinking” modes for complex problems

- Multimodality to become truly seamless, with models that naturally mix text, image, audio, and video

- Open-weight parity to continue, with open models offering 90%+ of proprietary capability

- Efficiency gains to accelerate, making today’s flagship capabilities available on consumer hardware

Is “prompt engineering” still relevant?

Prompt engineering remains relevant but is being subsumed by context engineering—the broader discipline of managing all information fed to an AI system, including system prompts, retrieved documents, tool definitions, and conversation history. For agentic applications, context engineering is now more important than prompt optimization. See our prompt engineering fundamentals guide for best practices.

References & Sources

This timeline draws from official announcements, press releases, and reputable industry reporting. Key sources by organization:

OpenAI

- OpenAI Blog — Official announcements for GPT-5, o3-mini, Operator, Sora, and API updates

- OpenAI API Documentation — Technical specifications and pricing

Anthropic

- Anthropic News — Claude model releases, Claude Code, and safety research

- Anthropic Research — Technical papers on reasoning and agents

Google DeepMind

- Google Blog - AI — Gemini releases, Google I/O announcements

- DeepMind Blog — Research breakthroughs like AlphaEvolve and Gemini Robotics

- Google for Developers — Gemini API and developer tools

DeepSeek

- DeepSeek GitHub — Open-weight model releases and documentation

- arXiv — Technical papers (DeepSeek-R1, V3 series)

Alibaba Cloud / Qwen

- Qwen Blog — Model releases and technical updates

- Hugging Face - Qwen — Model weights and documentation

Moonshot AI

- Kimi Platform — Kimi model access

- GitHub - MoonshotAI — Open-weight releases

Mistral AI

- Mistral Blog — Model announcements and research

- Hugging Face - Mistral AI — Open-weight models

xAI

- xAI Blog — Grok releases and research

Meta

- Meta AI Blog — Llama releases and research

NVIDIA

- NVIDIA Developer Blog — Nemotron models, NIM microservices

- NVIDIA GTC — Annual conference announcements

Industry Coverage

- Reuters Technology — Breaking news and industry analysis

- The Verge AI — Product launches and reviews

- Ars Technica AI — Technical analysis

- SWE-bench — Coding benchmark leaderboards

- LMArena — Model comparison leaderboard

Regulatory Sources

- EU AI Act — European regulation

- US Congress AI Legislation — US policy developments

This article was last updated December 31, 2025. The AI field moves rapidly; while all information was accurate at time of writing, newer developments may have occurred since publication.